Thank for replying me.

But I have ran the toturial(https://tvm.apache.org/docs/tutorials/frontend/deploy_model_on_rasp.html)step by step.

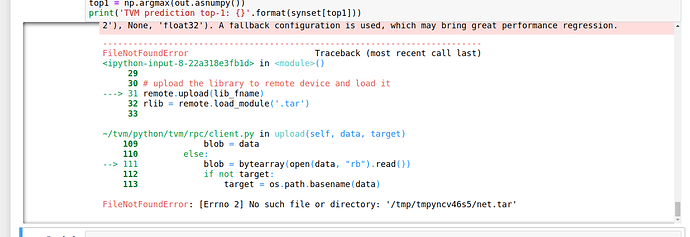

I changed the version of tvm and llvm, the errors below still exist:

RPCError: Traceback (most recent call last):

[bt] (8) /home/ljs/tvm/build/libtvm.so(std::_Function_handler<void (tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*), tvm::runtime::RPCModuleNode::WrapRemoteFunc(void*)::{lambda(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*)#1}>::_M_invoke(std::_Any_data const&, tvm::runtime::TVMArgs&&, tvm::runtime::TVMRetValue*&&)+0x33) [0x7ff0e9e88b43]

[bt] (7) /home/ljs/tvm/build/libtvm.so(tvm::runtime::RPCWrappedFunc::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const+0x3c5) [0x7ff0e9e885a5]

[bt] (6) /home/ljs/tvm/build/libtvm.so(tvm::runtime::RPCClientSession::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)> const&)+0x57) [0x7ff0e9e7c397]

[bt] (5) /home/ljs/tvm/build/libtvm.so(tvm::runtime::RPCEndpoint::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)>)+0x215) [0x7ff0e9e73dd5]

[bt] (4) /home/ljs/tvm/build/libtvm.so(tvm::runtime::RPCEndpoint::HandleUntilReturnEvent(bool, std::function<void (tvm::runtime::TVMArgs)>)+0x1ab) [0x7ff0e9e72d8b]

[bt] (3) /home/ljs/tvm/build/libtvm.so(tvm::runtime::RPCEndpoint::EventHandler::HandleNextEvent(bool, bool, std::function<void (tvm::runtime::TVMArgs)>)+0xd7) [0x7ff0e9e7c0a7]

[bt] (2) /home/ljs/tvm/build/libtvm.so(tvm::runtime::RPCEndpoint::EventHandler::HandleProcessPacket(std::function<void (tvm::runtime::TVMArgs)>)+0x126) [0x7ff0e9e7be86]

[bt] (1) /home/ljs/tvm/build/libtvm.so(tvm::runtime::RPCEndpoint::EventHandler::HandleReturn(tvm::runtime::RPCCode, std::function<void (tvm::runtime::TVMArgs)>)+0x13f) [0x7ff0e9e7b3af]

[bt] (0) /home/ljs/tvm/build/libtvm.so(+0x17ab042) [0x7ff0e9e71042]

[bt] (8) /home/ubuntu/tvm/build/libtvm.so(+0x103b100) [0xffff9d1bc100]

[bt] (7) /home/ubuntu/tvm/build/libtvm.so(tvm::runtime::RPCServerLoop(int)+0xac) [0xffff9d1bb7b4]

[bt] (6) /home/ubuntu/tvm/build/libtvm.so(tvm::runtime::RPCEndpoint::ServerLoop()+0xe8) [0xffff9d19f0c8]

[bt] (5) /home/ubuntu/tvm/build/libtvm.so(tvm::runtime::RPCEndpoint::HandleUntilReturnEvent(bool, std::function<void (tvm::runtime::TVMArgs)>)+0x258) [0xffff9d19eb40]

[bt] (4) /home/ubuntu/tvm/build/libtvm.so(tvm::runtime::RPCEndpoint::EventHandler::HandleNextEvent(bool, bool, std::function<void (tvm::runtime::TVMArgs)>)+0x1e4) [0xffff9d1a7184]

[bt] (3) /home/ubuntu/tvm/build/libtvm.so(tvm::runtime::RPCEndpoint::EventHandler::HandleProcessPacket(std::function<void (tvm::runtime::TVMArgs)>)+0x168) [0xffff9d1a6ea0]

[bt] (2) /home/ubuntu/tvm/build/libtvm.so(tvm::runtime::RPCSession::AsyncCallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::RPCCode, tvm::runtime::TVMArgs)>)+0x58) [0xffff9d1ba368]

[bt] (1) /home/ubuntu/tvm/build/libtvm.so(tvm::runtime::LocalSession::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)> const&)+0x74) [0xffff9d1ad9c4]

[bt] (0) /home/ubuntu/tvm/build/libtvm.so(+0xfbf140) [0xffff9d140140]

File "/home/ubuntu/tvm/python/tvm/_ffi/_ctypes/packed_func.py", line 81, in cfun

rv = local_pyfunc(*pyargs)

File "/home/ubuntu/tvm/python/tvm/rpc/server.py", line 69, in load_module

m = _load_module(path)

File "/home/ubuntu/tvm/python/tvm/runtime/module.py", line 411, in load_module

_cc.create_shared(path + ".so", files)

File "/home/ubuntu/tvm/python/tvm/contrib/cc.py", line 43, in create_shared

_linux_compile(output, objects, options, cc, compile_shared=True)

File "/home/ubuntu/tvm/python/tvm/contrib/cc.py", line 205, in _linux_compile

raise RuntimeError(msg)

File "/home/ljs/tvm/src/runtime/rpc/rpc_endpoint.cc", line 370

RPCError: Error caught from RPC call:

RuntimeError: Compilation error:

/tmp/tmpvh_4_v0b/net/lib0.o: error adding symbols: File in wrong format

collect2: error: ld returned 1 exit status

Command line: g++ -shared -fPIC -o /tmp/tmpvh_4_v0b/net.tar.so /tmp/tmpvh_4_v0b/net/lib0.o /tmp/tmpvh_4_v0b/net/devc.o