Hi friends: Currently I am working on a project which needs me to combine tensorrt and ansor tune to boost inference performance of a model. What I am doing is that I firstly ansor tune the model and saved all of ansor tune log in a json file called best_ansor_tune.json. Then, I use the following codes to combine tensorrt and ansor tune result and hope I can get better result:

mod, config = partition_for_tensorrt(mod, params, remove_no_mac_subgraphs=True)

config['remove_no_mac_subgraphs'] = True

with tvm.transform.PassContext(opt_level=3, config={"relay.ext.tensorrt.options": config}):

mod = tensorrt.prune_tensorrt_subgraphs(mod)

# exit(1)

from tvm.relay.backend import compile_engine

compile_engine.get().clear()

with auto_scheduler.ApplyHistoryBest(log_file):

with tvm.transform.PassContext(opt_level=3, config={'relay.ext.tensorrt.options': config, "relay.backend.use_auto_scheduler": True}):

if debug_mode == 1:

json, lib, param = relay.build(mod, target=target, params=params)

else:

lib= relay.build(mod, target=target, params=params)

lib.export_library('deploy_trt_ansor_640_640_new.so')

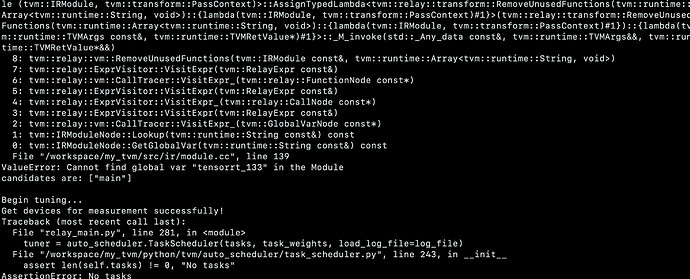

but I got some message which says that some workloads cannot be found, which confuses me. I used the same method to try another model which didn’t show any warning message (such as workloads cannot be found). Did someone know what’s wrong with it? Or, do you know how to combine ansor tune and byoc tensorrt correctly?