Let’s discuss how UMA could be integrated into TVMC. I did a first brute force attempt to figure out the challenges.

CLI

A simple approach would be to just give TVMC an additional argument, e.g. --uma-backends that adds directories to scan for UMA backends. An issue here is that this argument would have to be treated in a two-phase way to make the TVMC argparser recognize the target and target attribute commands.

Every found UMA backend would be loaded as Python modules, their class is constructed and registered with backend.register().

Example implementation (thanks to @cgerum for the uma_loader code):

Multiple targets

TVMC currently does not support multi targets. Here we would need to discuss how the syntax should look like for multi targets. For example, a partitioned UMA mod requires the following:

This syntax would then have to be implemented in the tvmc/target.py:parse_target function. As far as I understand, a string as vanilla_accelerator,c would currently create tvm.target.Target("vanilla_accelerator", host=tvm.target.Target("c")). A suggestion would be ["c","vanilla_accelerator,c"]. Alternatively we could make --target an action="append" argument to allow multiple targets.

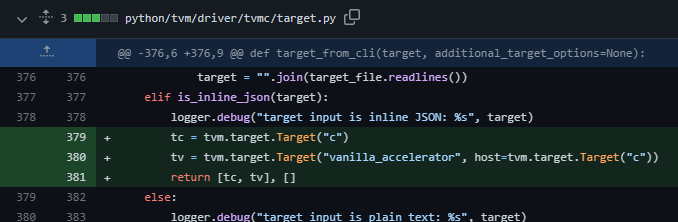

Instead I implemented a simple hack for testing. If given the target string {}, I construct the multi target manually. (Sidenote: The JSON string parsing does not work because target_host is undefined on that branch in target_from_cli)

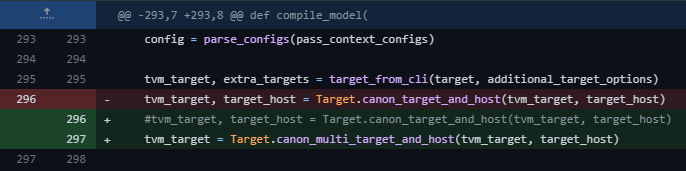

Additionally, compile_model needs to be able to handle this multi target:

Partitioning

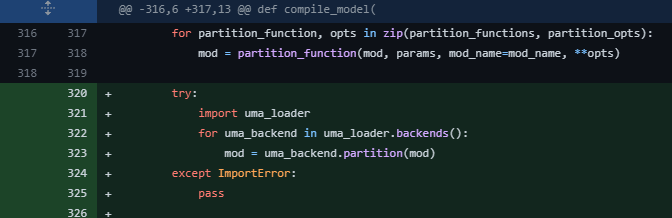

Finally, we need to invoke the mod = backend.partition(mod) interface of the UMA backends. For testing, this code just invokes them for all the backends, but of course we would only do this for the selected backends, which we could query from the selected targets.

I didn’t quite understand the extra_targets here. It seems they are treated similarly by calling a partition_function, but as far as I understood, these targets are not properly registered in TVMC.

I tested this in our MLonMCU flow and everything seems to work fine. A given model is correctly partitioned, rewritten and using the accelerator function in the generated C code.

https://github.com/tum-ei-eda/mlonmcu/compare/main...patch_uma

Please let me know your comments and suggestions.