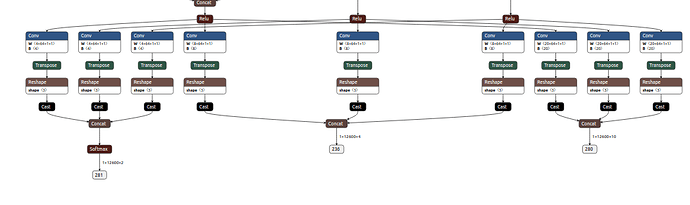

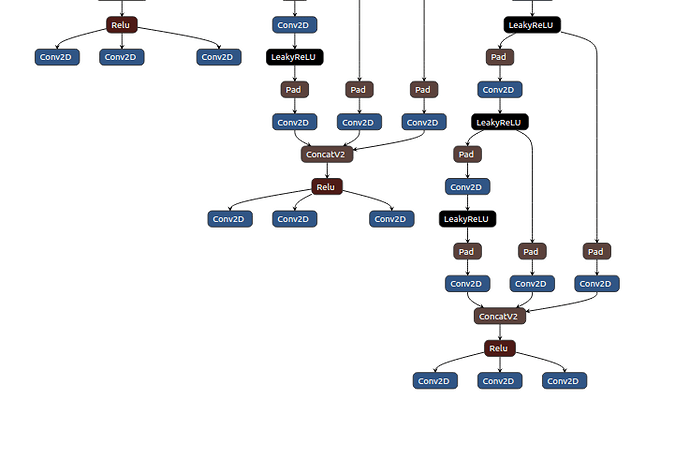

I am trying port face detector(Retinaface) on zcu104 platform and I am seeing exception at Vitis-ai Compilation stage, please have a look at the below error message and from the error log its look like the vitis-ai compiler not able to find the network output names. I have debug further and I have noticed that the TVM graph generation is fine and from module = graph_runtime.GraphModule(lib"default") Module is showing three outputs and its sizes as expected. I also attached tvm graph json file as a reference.

Error log:

2020-12-04 05:49:12.550036: W tensorflow/contrib/decent_q/utils/quantize_utils.cc:848] [DECENT_WARNING] Cannot find quantize info file: /tmp/t

mpb23g97c2//temp/Pad_18_aquant. Use default quantize info.

2020-12-04 05:49:12.550064: W tensorflow/contrib/decent_q/utils/quantize_utils.cc:848] [DECENT_WARNING] Cannot find quantize info file: /tmp/t

mpb23g97c2//temp/Pad_16_aquant. Use default quantize info.

INFO: Generating Deploy Model…

INFO: Deploy Model Generated.

********************* Quantization Summary *********************

INFO: Output:

quantize_eval_model: /tmp/tmpb23g97c2/quantize_eval_model.pb

deploy_model: /tmp/tmpb23g97c2/deploy_model.pb

/workspace/.local/lib/python3.6/site-packages/pyxir-0.1.3-py3.6-linux-x86_64.egg/pyxir/contrib/target/components/DPUCZDX8G/vai_c.py:61: UserWa

rning: This compilation only works with one network configuration at the moment!!

warnings.warn("This compilation only works with one network"

[VAI_C-BACKEND][Check Failed: dptconv_param->get_nonlinear_type() == 0 || dptconv_param->get_nonlinear_type() == 1 || dptconv_param->get_nonli

**near_type() == 4][/home/xbuild/conda-**bld/dnnc_1592904456005/work/submodules/asicv2com/src/SlNode/SlNodeDptConv.cpp:46][TYPE_UNMATCH][Unmatched

type!]

*** Check failure stack trace: ***

Traceback (most recent call last):

File “/opt/vitis_ai/conda/envs/vitis-ai-tensorflow/lib/python3.6/pdb.py”, line 1667, in main

pdb._runscript(mainpyfile)

File “/opt/vitis_ai/conda/envs/vitis-ai-tensorflow/lib/python3.6/pdb.py”, line 1548, in _runscript

self.run(statement)

File “/opt/vitis_ai/conda/envs/vitis-ai-tensorflow/lib/python3.6/bdb.py”, line 434, in run

exec(cmd, globals, locals)

File “”, line 1, in

File “/workspace/test_mobilenet.py”, line 157, in

module.run()

File “/workspace/tvm/python/tvm/contrib/graph_runtime.py”, line 206, in run

self._run()

File “tvm/_ffi/_cython/./packed_func.pxi”, line 322, in tvm._ffi._cy3.core.PackedFuncBase.call

File “tvm/_ffi/_cython/./packed_func.pxi”, line 257, in tvm._ffi._cy3.core.FuncCall

File “tvm/_ffi/_cython/./packed_func.pxi”, line 246, in tvm._ffi._cy3.core.FuncCall3

File “tvm/_ffi/_cython/./base.pxi”, line 160, in tvm._ffi._cy3.core.CALL

tvm._ffi.base.TVMError: AssertionError: Can’t retrieve right out tensor names from DNNC compiler output

TVM Graph:

“{\n “nodes”: [\n {\n “op”: “null”, \n “name”: “input.1”, \n “inputs”: []\n }, \n {\n “op”: “tvm_op”, \n “name”: “vitis_ai_0”, \n “attrs”: {\n “num_outputs”: “9”, \n “num_inputs”: “1”, \n “func_name”: “vitis_ai_0”, \n “flatten_data”: “0”\n }, \n “inputs”: [\n [\n 0, \n 0, \n 0\n ]\n ]\n }, \n {\n “op”: “tvm_op”, \n “name”: “fused_transpose_reshape_cast_transpose_reshape_cast_transpose_reshape_cast_conca_5331883896003102944_”, \n “attrs”: {\n “num_outputs”: “1”, \n “num_inputs”: “9”, \n “func_name”: “fused_transpose_reshape_cast_transpose_reshape_cast_transpose_reshape_cast_conca_5331883896003102944_”, \n “flatten_data”: “0”\n }, \n “inputs”: [\n [\n 1, \n 0, \n 0\n ], \n [\n 1, \n 1, \n 0\n ], \n [\n 1, \n 2, \n 0\n ], \n [\n 1, \n 3, \n 0\n ], \n [\n 1, \n 4, \n 0\n ], \n [\n 1, \n 5, \n 0\n ], \n [\n 1, \n 6, \n 0\n ], \n [\n 1, \n 7, \n 0\n ], \n [\n 1, \n 8, \n 0\n ]\n ]\n }, \n {\n “op”: “tvm_op”, \n “name”: “fused_transpose_reshape_cast_transpose_reshape_cast_transpose_reshape_cast_conca_5331883896003102944__1”, \n “attrs”: {\n “num_outputs”: “1”, \n “num_inputs”: “9”, \n “func_name”: “fused_transpose_reshape_cast_transpose_reshape_cast_transpose_reshape_cast_conca_5331883896003102944__1”, \n “flatten_data”: “0”\n }, \n “inputs”: [\n [\n 1, \n 0, \n 0\n ], \n [\n 1, \n 1, \n 0\n ], \n [\n 1, \n 2, \n 0\n ], \n [\n 1, \n 3, \n 0\n ], \n [\n 1, \n 4, \n 0\n ], \n [\n 1, \n 5, \n 0\n ], \n [\n 1, \n 6, \n 0\n ], \n [\n 1, \n 7, \n 0\n ], \n [\n 1, \n 8, \n 0\n ]\n ]\n }, \n {\n “op”: “tvm_op”, \n “name”: “fused_nn_softmax”, \n “attrs”: {\n “num_outputs”: “1”, \n “num_inputs”: “1”, \n “func_name”: “fused_nn_softmax”, \n “flatten_data”: “0”\n }, \n “inputs”: [\n [\n 3, \n 0, \n 0\n ]\n ]\n }, \n {\n “op”: “tvm_op”, \n “name”: “fused_transpose_reshape_cast_transpose_reshape_cast_transpose_reshape_cast_conca_5331883896003102944__2”, \n “attrs”: {\n “num_outputs”: “1”, \n “num_inputs”: “9”, \n “func_name”: “fused_transpose_reshape_cast_transpose_reshape_cast_transpose_reshape_cast_conca_5331883896003102944__2”, \n “flatten_data”: “0”\n }, \n “inputs”: [\n [\n 1, \n 0, \n 0\n ], \n [\n 1, \n 1, \n 0\n ], \n [\n 1, \n 2, \n 0\n ], \n [\n 1, \n 3, \n 0\n ], \n [\n 1, \n 4, \n 0\n ], \n [\n 1, \n 5, \n 0\n ], \n [\n 1, \n 6, \n 0\n ], \n [\n 1, \n 7, \n 0\n ], \n [\n 1, \n 8, \n 0\n ]\n ]\n }\n ], \n “arg_nodes”: [0], \n “heads”: [\n [\n 2, \n 0, \n 0\n ], \n [\n 4, \n 0, \n 0\n ], \n [\n 5, \n 0, \n 0\n ]\n ], \n “attrs”: {\n “dltype”: [\n “list_str”, \n [\n “float32”, \n “float32”, \n “float32”, \n “float32”, \n “float32”, \n “float32”, \n “float32”, \n “float32”, \n “float32”, \n “float32”, \n “float32”, \n “float32”, \n “float32”, \n “float32”\n ]\n ], \n “shape”: [\n “list_shape”, \n [\n [1, 3, 480, 640], \n [1, 8, 60, 80], \n [1, 8, 30, 40], \n [1, 8, 15, 20], \n [1, 4, 60, 80], \n [1, 4, 30, 40], \n [1, 4, 15, 20], \n [1, 20, 60, 80], \n [1, 20, 30, 40], \n [1, 20, 15, 20], \n [1, 12600, 4], \n [1, 12600, 2], \n [1, 12600, 2], \n [1, 12600, 10]\n ]\n ], \n “storage_id”: [\n “list_int”, \n [\n 0, \n 1, \n 2, \n 3, \n 4, \n 5, \n 6, \n 7, \n 8, \n 9, \n 10, \n 11, \n 12, \n 11\n ]\n ]\n }, \n “node_row_ptr”: [0, 1, 10, 11, 12, 13, 14]\n}”

Also I have attached vitis and ONNX through TVM compilers graphs to anaylize it further.

Please kindly help us on this.