Bug description:

When the model is inception_v3 , the target is cuda, and the batch_size is relatively large (such as 30), the below script crashed.

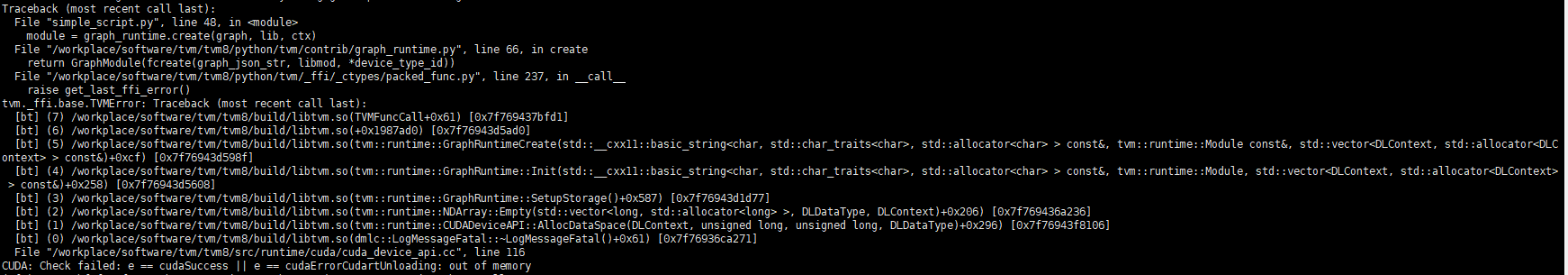

The statement graph_runtime.create(graph, lib, ctx) trigger this crash. The crash messages report out of memory (OOM), but the server’s memory is large enough.

The factors of trigger this bug.

- model: inception_v3-imagenet.h5

- batch_size: >20

- target =cuda

In order to confirm that the crash has nothing to do with other factors, 3 comparative experiments were carried out:

- experiment-1: Change target to cpu, and set batch_size to a very large value (such as 30, 50, and 100)---->no crash, only

target=cudacan trigger this bug! - experiment-2: changing to a different model, —> no crash, only ** xception_v3** can trigger this bug!

- experiment-3: change the batch_size —> when batch_size is set to a very small number(such as 1,2,3,4), no bug! However, when batch_size is set to a related large number(such as 25, 30, 100), It crashed !

Crash messages:

Environment:

TVM : 0.8.dev0

OS: Ubuntu 16.04

GPU: GeForce GTX 1080 Ti

Cuda: 10.1

Mem: 125G

The reproducable script

import keras

import os

import tvm

from tvm import te

import tvm.relay as relay

import numpy as np

from PIL import Image

import tvm.runtime as runtime

from tvm.contrib import graph_runtime

input_tensor = 'input_6'

def image_resize(x, shape):

x_return = []

for x_test in x:

tmp = np.copy(x_test)

img = Image.fromarray(tmp.astype('uint8')).convert('RGB')

img = img.resize(shape, Image.ANTIALIAS)

x_return.append(np.array(img))

return np.array(x_return)

input_precessor = keras.applications.vgg16.preprocess_input

input_shape = (32,32)

dataset_dir = "/share_container/data/dataset/"

data_path = os.path.join(dataset_dir, "imagenet-val-1500.npz")

data = np.load(data_path)

x, y = data['x_test'], data['y_test']

x_resize = image_resize(np.copy(x),input_shape)

x_test = input_precessor(x_resize)

y_test = keras.utils.to_categorical(y, num_classes=1000)

model_path = '/share_container/data/keras_model/inception_v3-imagenet_origin.h5'

predict_model = keras.models.load_model(model_path)

print(predict_model.input)

print(predict_model.summary())

shape_dict = {input_tensor: (25, 3, 299, 299)}

irmod, params = relay.frontend.from_keras(predict_model, shape_dict)

target = 'cuda'

ctx = tvm.gpu(0)

with tvm.transform.PassContext(opt_level=0, disabled_pass= None):

graph, lib, params = relay.build_module.build(irmod, target, params=params)

module = graph_runtime.create(graph, lib, ctx) # crash here !!!

You can receive the model by this link: