I try the sample code :Relay TensorRT Integration — tvm 0.8.dev0 documentation

I want to print gen_module.run(data=input_data) using a = gen_module.run(data=input_data), print(a). But I get None.

If I want to get the output result, how do I obtain?

I try the sample code :Relay TensorRT Integration — tvm 0.8.dev0 documentation

I want to print gen_module.run(data=input_data) using a = gen_module.run(data=input_data), print(a). But I get None.

If I want to get the output result, how do I obtain?

You need to use gen_module.get_output(0).numpy() to get the output.

Unfortunately, i doesn’t work. I use the follows: output_shape = (1,1000) out = gen_module.get_output(0, tvm.nd.empty(output_shape)). My TVM version is 0.8

How didn’t work? What’s the error message? Did you have the minimal reproducible example?

‘’’ Test resnet18 TVM+TensorRT directly ‘’’ import tvm from tvm import relay

import numpy as np

from tvm.contrib.download import download_testdata

#Pytorch import

import torch import torchvision

from PIL import Image

model_name = “resnet18” model = getattr(torchvision.models, model_name)(pretrained=True) model = model.eval()

#we grab the TorchScripted model via tracing input_shape = [1,3,224,224] input_data = torch.randn(input_shape) scripted_model = torch.jit.trace(model,input_data).eval()

img_name = “cat.png” img = Image.open(img_name).resize((224,224))

from torchvision import transforms

my_preprocess = transforms.Compose( [ transforms.Resize(256), transforms.CenterCrop(224), transforms.ToTensor(), transforms.Normalize(mean=[0.485, 0.456, 0.406],std=[0.229, 0.224,0.225]), ] )

img = my_preprocess(img) #print(img.shape) img = np.expand_dims(img,0) #print(img.shape)

#import the graph to Relay input_name = “input0” shape_list = [(input_name, img.shape)] mod, params = relay.frontend.from_pytorch(scripted_model, shape_list)

#print(params)

from tvm.relay.op.contrib.tensorrt import partition_for_tensorrt mod, config = partition_for_tensorrt(mod, params) #print(mod) #print(config)

target = “cuda” with tvm.transform.PassContext(opt_level=3, config={‘relay.ext.tensorrt.options’: config}): lib = relay.build(mod, target=target, params=params)

#export the module lib.export_library(‘resnet18_trt.so’)

#ctx = tvm.gpu(0) #load module #dev = tvm.cuda(0) dev = tvm.gpu(0) loaded_lib = tvm.runtime.load_module(‘resnet18_trt.so’)

gen_module = tvm.contrib.graph_executor.GraphModule(loaded_lib’default’)

dtype = “float32”

input_data = np.random.uniform(0,1, input_shape).astype(dtype)

output_shape = (1,1000)

gen_module.run(data=img)

out = gen_module.get_output(0).numpy()

Traceback (most recent call last):

File “resnet18_tensor.py”, line 83, in out = gen_module.get_output(0).numpy() AttributeError: ‘NDArray’ object has no attribute ‘numpy’

Hi @xinchen, I think you might not install the latest tvm from source. Could you try gen_module.get_output(0).asnumpy() instead?

It works. Thank you. However, I rewrite the tensorRT sample and input is a cat image. The output is wrong. Could you take a look?

‘’’ Test resnet18 TVM+TensorRT directly ‘’’ import tvm from tvm import relay

import numpy as np

from tvm.contrib.download import download_testdata

#Pytorch import

import torch import torchvision

from PIL import Image

model_name = “resnet18” model = getattr(torchvision.models, model_name)(pretrained=True) model = model.eval()

#we grab the TorchScripted model via tracing input_shape = [1,3,224,224] input_data = torch.randn(input_shape) scripted_model = torch.jit.trace(model,input_data).eval()

img_name = “cat.png” img = Image.open(img_name).resize((224,224))

from torchvision import transforms

my_preprocess = transforms.Compose( [ transforms.Resize(256), transforms.CenterCrop(224), transforms.ToTensor(), transforms.Normalize(mean=[0.485, 0.456, 0.406],std=[0.229, 0.224,0.225]), ] )

img = my_preprocess(img) #print(img.shape) img = np.expand_dims(img,0) #print(img.shape)

#import the graph to Relay input_name = “input0” shape_list = [(input_name, img.shape)] mod, params = relay.frontend.from_pytorch(scripted_model, shape_list)

#print(params)

from tvm.relay.op.contrib.tensorrt import partition_for_tensorrt mod, config = partition_for_tensorrt(mod, params) #print(mod) #print(config)

target = “cuda” with tvm.transform.PassContext(opt_level=3, config={‘relay.ext.tensorrt.options’: config}): lib = relay.build(mod, target=target, params=params)

#export the module lib.export_library(‘resnet18_trt.so’)

#ctx = tvm.gpu(0) #load module #dev = tvm.cuda(0) dev = tvm.gpu(0) loaded_lib = tvm.runtime.load_module(‘resnet18_trt.so’)

gen_module = tvm.contrib.graph_executor.GraphModule(loaded_lib’default’)

dtype = “float32”

input_data = np.random.uniform(0,1, input_shape).astype(dtype)

output_shape = (1,1000)

gen_module.run(data=img)

out = gen_module.get_output(0).asnumpy()

#out = gen_module.get_output(0, tvm.nd.empty(output_shape)) #top1_tvm = np.argmax(out.asnumpy()[0]) top1_tvm = np.argmax(out[0])

synset_url = “”.join( [ “https://raw.githubusercontent.com/Cadene/”, “pretrained-models.pytorch/master/data/”, “imagenet_synsets.txt”, ] )

synset_name = “imagenet_synsets.txt” synset_path = download_testdata(synset_url, synset_name, module=“data”)

with open(synset_path) as f: synsets = f.readlines()

synsets = [x.strip() for x in synsets]

splits = [line.split(" ") for line in synsets]

key_to_classname = {spl[0]:" ".join(spl[1:]) for spl in splits}

#print(key_to_classname)

class_url = “”.join( [ “https://raw.githubusercontent.com/Cadene/”, “pretrained-models.pytorch/master/data/”, “imagenet_classes.txt”, ] )

class_name = “imagenet_classes.txt”

class_path = download_testdata(class_url, class_name, module=“data”)

with open(class_path) as f: class_id_to_key = f.readlines()

class_id_to_key = [x.strip() for x in class_id_to_key]

tvm_class_key = class_id_to_key[top1_tvm]

print(“Relay top-1 id: {}, class name: {}”.format(top1_tvm, key_to_classname[tvm_class_key]))

Sounds good, I’d like to help!

Can you please clear your code a little bit to have the new lines and indentation correctly (some lines of code are squeezed in the same line), and use the preformatted text button to wrap your python code? It makes the code display in this platform more readable, and makes it easier for us to copy, paste, and execute your code.

One question: where did you download your ‘cat.png’?

‘’’ Test resnet18 TVM+TensorRT directly ‘’’ import tvm from tvm import relay

import numpy as np

from tvm.contrib.download import download_testdata

import torch import torchvision

from PIL import Image

model_name = “resnet18” model = getattr(torchvision.models, model_name)(pretrained=True) model = model.eval() input_shape = [1,3,224,224]

input_data = torch.randn(input_shape) scripted_model = torch.jit.trace(model,input_data).eval()

img_url = “https://github.com/dmlc/mxnet.js/blob/main/data/cat.png?raw=true” img_path = download_testdata(img_url, “cat.png”, module=“data”) img = Image.open(img_path).resize((224, 224))

from torchvision import transforms

my_preprocess = transforms.Compose( [ transforms.Resize(256), transforms.CenterCrop(224), transforms.ToTensor(), transforms.Normalize(mean=[0.485, 0.456, 0.406],std=[0.229, 0.224,0.225]), ] )

img = my_preprocess(img) img = np.expand_dims(img,0)

#import the graph to Relay input_name = “input0” shape_list = [(input_name, img.shape)] mod, params = relay.frontend.from_pytorch(scripted_model, shape_list)

#print(params)

from tvm.relay.op.contrib.tensorrt import partition_for_tensorrt mod, config = partition_for_tensorrt(mod, params) #print(mod) #print(config)

target = “cuda” with tvm.transform.PassContext(opt_level=3, config={‘relay.ext.tensorrt.options’: config}): lib = relay.build(mod, target=target, params=params)

lib.export_library(‘resnet18_trt.so’)

#ctx = tvm.gpu(0) #load module #dev = tvm.cuda(0) dev = tvm.gpu(0) loaded_lib = tvm.runtime.load_module(‘resnet18_trt.so’)

gen_module = tvm.contrib.graph_executor.GraphModule(loaded_lib’default’)

dtype = “float32”

input_data = np.random.uniform(0,1, input_shape).astype(dtype)

output_shape = (1,1000)

gen_module.run(data=img)

out = gen_module.get_output(0).asnumpy()

top1_tvm = np.argmax(out[0])

synset_url = “”.join( [ “https://raw.githubusercontent.com/Cadene/”, “pretrained-models.pytorch/master/data/”, “imagenet_synsets.txt”, ] )

synset_name = “imagenet_synsets.txt” synset_path = download_testdata(synset_url, synset_name, module=“data”)

with open(synset_path) as f: synsets = f.readlines()

synsets = [x.strip() for x in synsets]

splits = [line.split(" ") for line in synsets]

key_to_classname = {spl[0]:" ".join(spl[1:]) for spl in splits}

#print(key_to_classname)

class_url = “”.join( [ “https://raw.githubusercontent.com/Cadene/”, “pretrained-models.pytorch/master/data/”, “imagenet_classes.txt”, ] )

class_name = “imagenet_classes.txt”

class_path = download_testdata(class_url, class_name, module=“data”)

with open(class_path) as f: class_id_to_key = f.readlines()

class_id_to_key = [x.strip() for x in class_id_to_key]

tvm_class_key = class_id_to_key[top1_tvm]

print(“Relay top-1 id: {}, class name: {}”.format(top1_tvm, key_to_classname[tvm_class_key]))

////end of the code @ yuchenj, thank you for your kind offer. I re-organize my code. Please take a look. I want to find how to paste code or insert an attachment. However, I can’t do. Therefore,it there is still some format error, please tell me your email. Maybe I can send you python file directly.

@xinchen, no worries at all! I will take a look when I am free tomorrow.

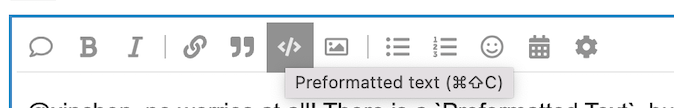

There is a Preformatted Text button as shown in the screenshot below, which you can use to enclose your code and your code will be displayed well in this forum. Also, feel free to share code using tools like github gist.

Hi @xinchen, I found it hard to transform your code into something that can be run because there are a few indentation issues and invalid characters such as “ and ”. Do you mind trying the preformatted feature, or sharing your code via gist or directly send to my email (yuchenj@cs.washington.edu)?