Hi everyone,

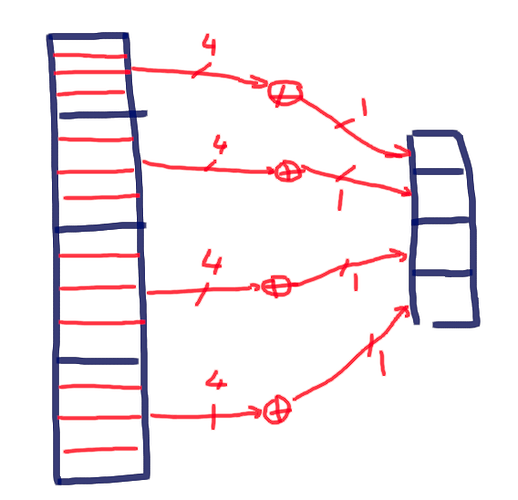

I’m trying to write a TensorIR program to reduce a 16 element vector piece-wise into a 4 element vector. Here an drawing:

Here TVM Script code for this:

from tvm import tir

from tvm.script import tir as T

@T.prim_func

def reduce(input0: T.handle, output0: T.handle) -> None:

A = T.match_buffer(input0, (16,), dtype="float32")

B = T.match_buffer(output0, (4,), dtype="float32")

for i0, i1 in T.grid(4, 4):

with T.block("piecewise-reduce"):

vi0 = T.axis.spatial(4, i0)

vi1 = T.axis.reduce(4, i0+ i1*4)

with T.init():

B[vi0] = 0.0

B[vi0] = B[vi0] + A[vi1]

sch = tir.Schedule(reduce)

block = sch.get_block("piecewise-reduce")

l0, l1 = sch.get_loops(block)

sch.reorder(l1,l0)

The issue come up when calling sch.reorder(l1,l0). This throws an error: telling

Error message: The block tir.Block#0 is required to have an affine binding (Full error message at the bottom)

Here my questions:

- Does anyone see an issue in the TIR script?

- Is there maybe a better way to describe the reduction, I’m intending to do? I definitely want to call scheduling primitives like

reorderon it afterwards? - Why does reorder not work here?

vi0andvi1are kinda independent?

Btw, the example is boiled down from a bigger TVM Script program (with way more axes), the sch.reorder in this case if just a demo.

Thanks in advance,

Michael

CC: @Hzfengsy @tqchen @jinhongyii @MasterJH5574 @yzh119

File "/usr/tvm/python/tvm/tir/schedule/_type_checker.py", line 340, in wrap

return func(*args, **kwargs)

File "/usr/tvm/python/tvm/tir/schedule/schedule.py", line 874, in reorder

_ffi_api.ScheduleReorder(self, ordered_loops) # type: ignore # pylint: disable=no-member

File "tvm/_ffi/_cython/./packed_func.pxi", line 332, in tvm._ffi._cy3.core.PackedFuncBase.__call__

File "tvm/_ffi/_cython/./packed_func.pxi", line 263, in tvm._ffi._cy3.core.FuncCall

File "tvm/_ffi/_cython/./packed_func.pxi", line 252, in tvm._ffi._cy3.core.FuncCall3

File "tvm/_ffi/_cython/./base.pxi", line 182, in tvm._ffi._cy3.core.CHECK_CALL

File "/usr/tvm/python/tvm/_ffi/base.py", line 476, in raise_last_ffi_error

raise py_err

tvm.tir.schedule.schedule.ScheduleError: Traceback (most recent call last):

2: tvm::runtime::PackedFuncObj::Extractor<tvm::runtime::PackedFuncSubObj<tvm::runtime::TypedPackedFunc<void (tvm::tir::Schedule, tvm::runtime::Array<tvm::tir::LoopRV, void> const&)>::AssignTypedLambda<tvm::runtime::Registry::set_body_method<tvm::tir::Schedule, tvm::tir::ScheduleNode, void, tvm::runtime::Array<tvm::tir::LoopRV, void> const&, void>(void (tvm::tir::ScheduleNode::*)(tvm::runtime::Array<tvm::tir::LoopRV, void> const&))::{lambda(tvm::tir::Schedule, tvm::runtime::Array<tvm::tir::LoopRV, void> const&)#1}>(tvm::runtime::Registry::set_body_method<tvm::tir::Schedule, tvm::tir::ScheduleNode, void, tvm::runtime::Array<tvm::tir::LoopRV, void> const&, void>(void (tvm::tir::ScheduleNode::*)(tvm::runtime::Array<tvm::tir::LoopRV, void> const&))::{lambda(tvm::tir::Schedule, tvm::runtime::Array<tvm::tir::LoopRV, void> const&)#1}, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >)::{lambda(tvm::runtime::TVMArgs const&, tvm::runtime::TVMRetValue*)#1}> >::Call(tvm::runtime::PackedFuncObj const*, tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*)

1: tvm::tir::TracedScheduleNode::Reorder(tvm::runtime::Array<tvm::tir::LoopRV, void> const&)

0: tvm::tir::ConcreteScheduleNode::Reorder(tvm::runtime::Array<tvm::tir::LoopRV, void> const&)

ScheduleError: An error occurred in the schedule primitive 'reorder'.

The IR with diagnostic is:

# from tvm.script import ir as I

# from tvm.script import tir as T

@I.ir_module

class Module:

@T.prim_func

def main(input0: T.handle, output0: T.handle):

T.func_attr({"global_symbol": "reduce"})

A = T.match_buffer(input0, (16,))

B = T.match_buffer(output0, (16,))

with T.block("root"):

T.reads()

T.writes()

for i0 in range(4):

for i1 in range(4):

# tir.Block#0

with T.block("reduce"):

^^^^^^^^^^^^^^^^^^^^^^^

vi0 = T.axis.spatial(4, i0)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

vi1 = T.axis.reduce(4, i0 + i1 * 4)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

T.reads(A[vi1])

^^^^^^^^^^^^^^^

T.writes(B[vi0])

^^^^^^^^^^^^^^^^

with T.init():

^^^^^^^^^^^^^^

B[vi0] = T.float32(0)

^^^^^^^^^^^^^^^^^^^^^

B[vi0] = B[vi0] + A[vi1]

^^^^^^^^^^^^^^^^^^^^^^^^

Error message: The block tir.Block#0 is required to have an affine binding