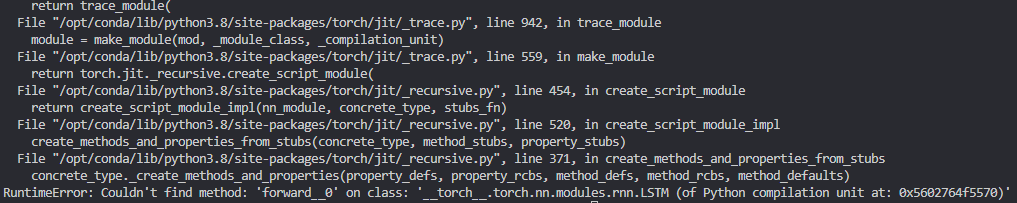

Hi Sirs, I’m having some issues trying to infer Tacotron2 using TVM: First, I want to import the model with torch frontend, but I have this problem with torch.jit.trace:

So I had to use the onnx frontend, and I successfully performed the inference of the model. But I found that there seems to be some problems with the accuracy of the model compared to TensorRT. Ultimately, I found that the problem was in the inference of Tacotron2_decoder.onnx, which performed very badly on some text samples. So, will TVM work on Tacotorn2? Any other suggestions? Looking forward to your help. ThanksThe Model I use: DeepLearningExamples/PyTorch/SpeechSynthesis/Tacotron2 at master · NVIDIA/DeepLearningExamples · GitHub