First off, the question: Is it possible to run a model compiled for VTA on the PYNQ board locally, instead of using RPC?

So if I create the graph runtime on my host machine with:

# Some setup code

remote = rpc.connect(ip, port)

vta.reconfig_runtime(remote)

vta.program_fpga(remote)

remote.upload("./lib.tar")

lib = remote.load_module("lib.tar")

ctx = remote.ext_dev(0)

m = graph_runtime.create(graph, lib, ctx)

# load params, input and execute model...

everything works fine and the model is executed as expected.

I then tried to write a python script, that I can execute on the PYNQ board directly, instead of via RPC:

# Some setup code

lib = tvm.module.load("./lib.tar")

ctx = tvm.ext_dev(0)

m = graph_runtime.create(graph, lib, ctx)

# load params, input and execute model...

On calling graph_runtime.create(..) I get the error

TVMError: Check failed: allow_missing: Device API ext_dev is not enabled.

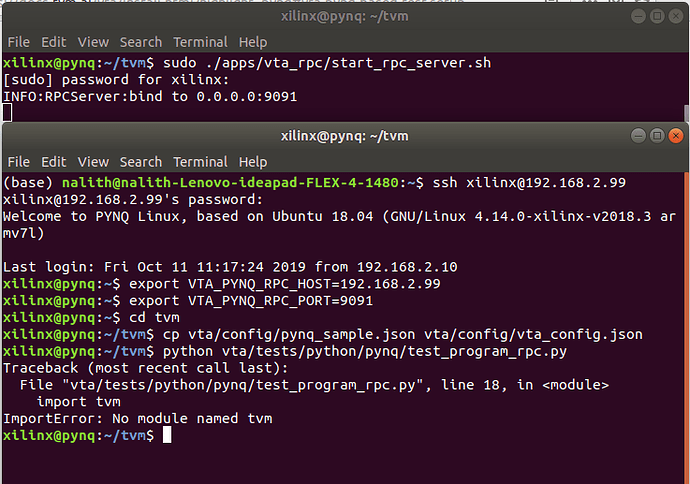

I compiled the TVM runtime and VTA on the PYNQ like it’s described in this tutorial: https://docs.tvm.ai/vta/install.html#pynq-side-rpc-server-build-deployment

Do I have to enable some additional features, so that I can use tvm.ext_dev(0) on the PYNQ?

glad that it worked out

glad that it worked out