I’m working for a MCU vendor, and thinking about using micro tvm to deploy some ML models on MCUs. However, I’m a bit confused with the concepts of standalone execution.

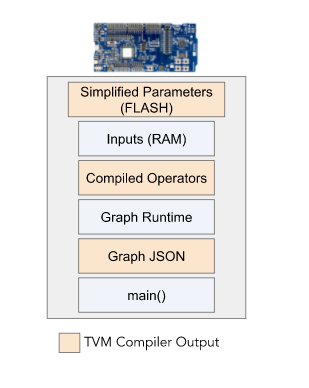

We, as one of MCU vendors, would like to see standalone execution solely on MCUs using micro tvm. The image below is the concept of standalone execution found in the official doc.

Here I have some questions regarding this.

-

Where is ‘micro tvm’ in this image? I’m not sure what ‘micro tvm’ exactly means, and how it’s involved in the process of generating binary for MCUs. If ‘micro tvm’ means the mechanism or something to generate binary and doesn’t mean the code or binary that will be stored in MCUs, then what is the one people call ‘micro tvm runtime’?

-

Also I’m a bit confused with the difference between tvm and micro tvm. tvm can also generate the same components (Simplified Parameters, Compiled Operators and Graph JSON) right? I’d like to know the difference of the artifacts between by tvm and micro tvm.

-

According to the presentation below: TVM Community Meetup - AOT - Google Slides the difference between compilation with AOT and without AOT is the generated C code. To my understanding, AOT enables us to remove Graph Runtime and Graph JSON from the lists stored in MCUs, and instead another kind of C code will be generated which kind of combine compiled operators, Graph Runtime and Graph JSON. All we need to run inference is this C code(Compiled Operators) , Simplified Parameters, Inputs, device initialization code and application code(those in main). We just download these into MCUs. Is my understanding correct?

-

Regarding the test file: tvm/tests/micro/zephyr/test_zephyr.py https://github.com/apache/tvm/tree/main/tests/micro/zephyr As the README suggests, I ran

$ cd tvm/apps/microtvm/

$ poetry lock && poetry install && poetry shell

$ cd tvm/tests/micro/zephyr

$ pytest test_zephyr.py --zephyr-board=qemu_x86

But I got errors like below.

ImportError while loading conftest '/home/ubuntu/workspace/tvm/conftest.py'.

../../../conftest.py:20: in <module>

import tvm

../../../python/tvm/__init__.py:26: in <module>

from ._ffi.base import TVMError, __version__

../../../python/tvm/_ffi/__init__.py:28: in <module>

from .base import register_error

../../../python/tvm/_ffi/base.py:71: in <module>

_LIB, _LIB_NAME = _load_lib()

../../../python/tvm/_ffi/base.py:51: in _load_lib

lib_path = libinfo.find_lib_path()

../../../python/tvm/_ffi/libinfo.py:146: in find_lib_path

raise RuntimeError(message)

E RuntimeError: Cannot find the files.

E List of candidates:

E /home/ubuntu/.cache/pypoetry/virtualenvs/microtvm-vg_j_zxI-py3.8/bin/libtvm.so

E /home/ubuntu/.poetry/bin/libtvm.so

E /usr/local/sbin/libtvm.so

E /usr/local/bin/libtvm.so

E /usr/sbin/libtvm.so

E /usr/bin/libtvm.so

E /usr/sbin/libtvm.so

E /usr/bin/libtvm.so

E /usr/games/libtvm.so

E /usr/local/games/libtvm.so

E /snap/bin/libtvm.so

E /home/ubuntu/workspace/tvm/python/tvm/libtvm.so

E /home/ubuntu/workspace/libtvm.so

E /home/ubuntu/.cache/pypoetry/virtualenvs/microtvm-vg_j_zxI-py3.8/bin/libtvm_runtime.so

E /home/ubuntu/.poetry/bin/libtvm_runtime.so

E /usr/local/sbin/libtvm_runtime.so

E /usr/local/bin/libtvm_runtime.so

E /usr/sbin/libtvm_runtime.so

E /usr/bin/libtvm_runtime.so

E /usr/sbin/libtvm_runtime.so

E /usr/bin/libtvm_runtime.so

E /usr/games/libtvm_runtime.so

E /usr/local/games/libtvm_runtime.so

E /snap/bin/libtvm_runtime.so

E /home/ubuntu/workspace/tvm/python/tvm/libtvm_runtime.so

E /home/ubuntu/workspace/libtvm_runtime.so

After successfully running the script, am I supposed to get all the C code to build and download into MCUs?

- Another script for AOT tvm/python/tests/relay/aot/test_crt_aot.py (https://github.com/apache/tvm/blob/main/tests/python/relay/aot/test_crt_aot.py)

There is no information on the environments so I didn’t even run the script. But this script generates the C code that can be compiled by let’s say arm v7? At this moment, can we generate C code by AOT, make and customize some application using the generated C code? In case of TFL micro, we can create a project in IDEs, pull some C code from TFL micro repository and include them in the project(C runtime), convert some tflite models into a large C array, TFL micro loads this array before running inference. This is how we can run inference using TFL micro and there is no need for MCUs connected to the host PC. I just want to do the same thing by AOT. Is it possible for the moment, and is there any sample of it?