my code:

from tvm.relax.expr_functor import PyExprMutator, mutator

@mutator

class MyPass(PyExprMutator):

def __init__(self, mod = None) -> None:

super().__init__(mod)

self.id = 0

def visit_binding_block(self, block):

print(f" enter visit binding block {self.id}")

self.id += 1

return super().visit_binding_block(block)

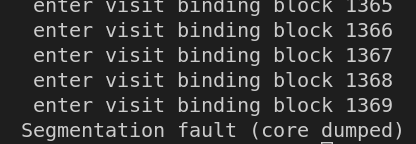

and got the result: