RFC: Type-Directed Relay Fuzzing Library

Summary

This RFC proposes to employ fuzzing (mass generation of random programs) for Relay in order to test the compiler. Fuzz testing for Relay can expose bugs and improve confidence in the compiler by generating vastly more test cases than can be written by hand. In particular, the RFC proposes libraries for making it easy to implement Relay fuzzers (different program generators can implement specialized generation policies, useful for testing particular passes) and infrastructure for running Relay fuzzers at scale in order to identify possible compiler bugs.

Motivation

Fuzzing is a common technique for exposing compiler bugs, namely by generating valid (or invalid) programs and ensuring the compiler produces correct output (defined by a language specification or a test oracle like “the compiler should never crash, even with invalid output” or “the same program should yield the same results when compiled with different standards-compliant compilers”). See this paper for a discussion of the classic CSmith fuzzer (which has found many bugs in C compilers); another well-known program fuzzer is jsfunfuzz.

As I described in my TVMCon talk on this subject (covering work done with my UW colleagues, Edward Misback and Michael Flanders), fuzzing is a promising means for us to test our compiler passes at scale – this RFC concerns fuzzing for Relay specifically, but the community would also do well to consider fuzzing TIR this way (though it would have different considerations; note that TVMFuzz and Tzer have targeted TIR). The large-scale generation of random tests will increase our confidence in our compiler implementation (passes, as well as the parser).

Overview

The main challenge in fuzzing is ensuring that the outputs will be valid programs (or otherwise ensuring that they will only be invalid in clearly defined ways). In the case of Relay, the type system provides rules for creating correct programs–hence my calling this a “type-directed fuzzer”–but the type relations on operators mean that a fuzzer would have to reason about tensor shapes. This RFC discusses different approaches for dealing with shapes in the fuzzer. Incidentally, since this fuzzer has to reason about typing rules, it has the secondary benefit of serving as an executable specification for some of the language rules.

For my TVMCon talk, I implemented a prototype, which is available here. I have since simplified the approach for dealing with tensor shapes and refined the interfaces in this prototype, which I will discuss in detail below.

In this RFC, I propose one way we can implement a fuzzer for Relay and infrastructure to support it. I have recently learned of Tzer, another work that has fuzzed Relay (and have greatly appreciated it), so I would also like to emphasize that this proposal is for one way of implementing fuzzing for Relay, ideally in a manner that will be extensible and allow for incorporating further techniques, rather than a suggestion that this is the only way we should approach fuzzing.

Goals

The additions proposed in this RFC serve two purposes:

- To provide reusable libraries for generating Relay programs that type check, to be used for testing specific passes or behavior.

- To provide a framework for fuzz-testing Relay at scale, implemented using the above libraries. The fuzzer should be capable of generating code that uses most of the language’s features, which can be used for testing general-purpose passes in Relay. The framework should allow for bugs found when compiling generated programs to be saved and categorized.

I emphasize that these are fuzzing libraries: It may be of interest to generate programs with a specific structure (for example, providing a lot of fusable operators), so these libraries should make it easier to create new generators that fulfill specific needs for testing passes. (Hence my comment on this proposal not coming to the exclusion of other fuzzing techniques.)

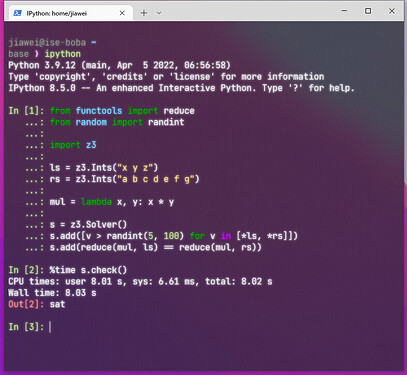

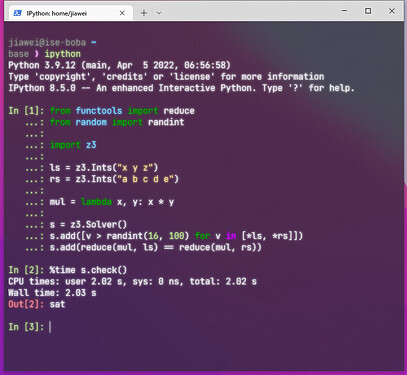

Fuzzer Implementation

As a proof of concept, I have written a prototype implementation of the general-purpose fuzzer and fuzzing libraries in 1744 lines of Python, which includes handling for 7 type relations and 21 operators. This is a more modular reimplementation of the prototype presented in my TVMCon talk, which I used to find a bug in the match completeness checker and a parser roundtripping bug (refs of refs would not parse back, though this has not yet been fixed).

Core Approach

The main approach for type-directed fuzzing is to build up programs recursively by following the typing rules “in reverse”:

- Given a target type, choose an expression AST node that can yield a value of that type.

- Use the target type to determine the types of subexpressions and generate those recursively.

Note that when generating an expression, we must keep track of which variables are in scope and with what type (let us call this the “environment,” a mapping of variables to types). This is because let expressions and function definitions introduce new variables into the scope, which dictates when it is valid for variables to appear.

Base cases for the recursion:

-

If there is a variable in scope of the appropriate type, we can simply return the variable.

-

We can return a literal of the appropriate type. In Relay, here are the literals for different types:

- Tensor types: A tensor constant

- Tuple types: A tuple expression

- Reference types: A

refexpression - Function types: A function expression

- ADTs (type calls): A call to a constructor of that ADT

For an example of a recursive case, let us consider the typing rule for let expressions (and for simplicity, let us assume we are not defining a recursive function). A let expression of the form let v1 = e1; e2 has the type T2 in the environment Γ if:

-

e1has some typeT1under the environmentΓ, -

e2has the typeT2under the environmentΓ ∪ {v1 ↦ T1}

This means if we start with a type T2 and choose to produce a let expression, we would have to choose a type T1, generate an expression e1 of type T1, add v1 to the scope with type T1, and finally generate an expression e2 of type T2 using the updated scope.

We can ensure termination by forcing the expression generation to choose a base case after a certain point, e.g., by limiting AST depth. In the prototype, there is a “fuel” parameter that is decremented upon recursive calls and forces base cases once the fuel “runs out” (not a very sophisticated approach).

A note on graph bindings: Graph bindings in Relay can be treated like variable bindings. An expression can be reused for a graph binding as long as it contains only variables that are in scope and does not redefine existing variables (redefining an existing variable would fail Relay’s wellformedness check; we could replace the redefined variable with a fresh one, but then this would no longer be a graph binding). The prototype simply keeps all generated subexpressions in scope (grouped by type), except those that introduce new variables (lets, function definitions, and anything containing a let or function definition), and chooses between them when inserting a graph binding.

Handling Operators

The type checker references type relations to check operator calls. These relations are functions that take the call’s input types and output type (which may be underspecified TypeVars) and check that concrete types are correct or unify TypeVars with concrete types, ultimately determining whether the operator accepts those types. The bulk of the logic tends to deal with tensor shapes. See the type relation for bias addition for a simple (and fairly typical) example.

Generating operator calls differs from ordinary type checking in Relay in that the algorithm described in the previous section starts from the final return type: we know the output type of the operator call, but not the input types and we have not yet generated the input expressions either. This is less information than the type checker has. Yet, if we generate input expressions to the call without type checking them, it is possible that the types will be invalid and will mean that we wasted time generating the input expressions. Hence, it is important for us to solve the type relations by going backwards: given an output type, find input types that satisfy the relation. The main issue is that the relations are implemented in C++, so there is no convenient automatic way to derive solutions to the relations.

In my TVMCon talk, I discuss multiple approaches to generating operator calls:

- Formalize type relations in a solver domain (e.g., constraint logic programming, integer linear programming) and solve for valid types – most of relations are only checking constraints on tensor shapes, so this would be feasible, but entails manual effort and requires understanding all of the type relations. Additionally, it introduces a dependency on the solver.

- Use brute force to solve type relations: This approach does not require manual reimplementation of the type relations (in fact, the type relation can be reused as-is for checking the solutions). However, brute force seems wasteful and will not scale well to larger tensor shapes.

- An entirely different approach: Instead of attempting to “solve” type relations in the normal recursive algorithm described above, instead we can “sample” solutions. In particular, we could randomly choose valid argument types for a type relation and then use the type relation implementation to solve for the result type, giving us a solution to the type relation and a solution. We can keep these sampled solutions in a cache. If we encounter the return type to a solution in the cache, we can reference the solution and use it to generate argument expressions for the operator call. This approach relies on the assumption that it’s easier to randomly generate valid arguments to an operator than to work backwards from the solution to find argument types. However, this approach is limited in generality unless there is a very large cache of solutions (otherwise, we will not be able to match the types very often). Notably, however, this approach does not require reformulating type relations and also does not entail a brute force search.

I was particularly pleased with the sampling approach when I implemented the first prototype, since it did not require a solver or independent reformulation of type relations, but while working on sampling for the more recent version, I realized that it did still entail manual work: It was necessary to define how to sample valid argument types. This, in turn, led me to realize that most of my implementations of the sampling procedure consisted of generating a return type and, well, manually working out how to set up the arguments from that – I had written manual solvers without realizing it!

Hence, the approach I followed in the most recent prototype was to simply write out logic for solving type relations. At least for the operators examined, this proved to be rather simple (simpler than the type relations themselves, since the solver only needs to give a solution with no constraints). These solvers are located in registered_ops.py in the prototype and are generally quite simple. (Note that the solver for conv2d does not handle all potential parameters to it.) Notice that the same file also includes sampling methods, which simply generate a return type and then call the solver.

The following components are used for generating operator calls:

- A recognizer: This is a simple function that determines if a given type

Tis a possible return type for an operator call (i.e., is this an opportunity for an operator call?). These are generally very simple, checking only if a tensor is of a certain rank. - A solver: A function that takes a return type

Tand returns argument types and attributes that result in a return type ofTfor an operator. - A sampler: A function that returns a set of valid argument types, attributes, and return type for an operator.

Solvers do not need to be perfect in order to be useful: Even if a solver is not able to find all possible solutions, as long as it always returns correct solutions, the generated programs will type check. In particular, the following invariants must hold:

- For a type

T, ifrecognizer(T)returns true,solver(T)must find a valid solution. - If

sampler()yields a return type ofT,recognizer(T)must be true.

In principle, the sampler can be omitted, but I have included in the prototype in case the community favors this approach (an advantage, as mentioned above, is that solutions can be cached, perhaps in a database, and not require any computation at all, but the solvers presented in this prototype do not seem expensive).

The recognizer could also, in principle, be omitted if the solvers are specified to fail cleanly when given a return type they do not support. However, this proposal keeps solving and recognition of possible operator calls separate. Many operators that do not have the same type relation do share a recognizer in this implementation, meaning that the recognizer could be run only once and determine that several groups of operators are applicable at once.

Operator Registry

There are dozens of operators in TVM and the fuzzer would have to be able to reason about all of them. The prototype addresses this by having a registry of recognizers, solvers, and samplers, referenced by operator. The registry would permit operators to share implementations (this is most useful for recognizers – many operators are capable of returning a tensor of arbitrary shape, so the fuzzer can call one recognizer and know that any of these operators is permitted) and it would also allow for there to be multiple possible choices of implementation (in case it is desirable to have solvers that only return specific kinds of solution or recognizers that will restrict the tensor shapes accepted), with the convention that all operators are expected to have a “default” implementation listed. The registries are defined in op_properties.py. These registries should allow for flexibility and easy reuse. The implementation of recognizers, solvers, and samplers would only need to change if the underlying relation changes.

Expression, Type, and Module Generators

The rest of the prototype is structured in terms of separating out different kinds of generators: There are expression generators, type generators, and a module generator, the last of which is the front-end interface for generating programs. Included in the prototype are default implementations of the expression generator and the type generator, which are extended in the general-purpose fuzzer (which is meant to serve as an example for how to write other generators). These default implementations include some of the basic logic of the type system, allowing extensions to focus on generation policy. Each generator is essentially a visitor “in reverse”: rather than recursing down an AST, as a visitor does, it instead generates new AST nodes, choosing between which node to insert based on a policy (discussed below).

To illustrate the structure of generators (more concisely than in the prototype), here is pseudocode:

class ExprGenerator:

def generate_expr(self, ty):

if time_to_generate_literal():

return self.generate_literal(ty)

return self.generate_connective(ty)

def generate_literal(self, ty):

if isinstance(ty, relay.TensorType):

return self.generate_tensor_literal(ty)

if isinstance(ty, relay.TupleType):

return self.generate_tuple_literal(ty)

# etc

def generate_connective(self, ty):

if time_to_generate_let():

return self.generate_let_expr(ty)

if time_to_generate_if():

return self.generate_if_expr(ty)

# etc

# implement generators for each case as well

Implementing Specific Generation Policies

The included general-purpose fuzzer includes various parameters to specify generation probabilities and allows them to be customized (see the test cases for examples of customization). However, while this parameterization can be useful (and can be taken beyond what the prototype exhibits), it would become unwieldy to have only a single program generator that is constantly extended with additional generation policies that are selected by parameterization. Instead, the proposal is to implement different generation policies by creating new generators that reuse components, which is why this proposal is phrased in terms of a fuzzing library, rather than having a single fuzzer per se.

For example, suppose it would be useful to test a compiler pass by inserting certain premade blocks into generated programs. This can be accomplished by extending the existing expression generator with a case for inserting the blocks (when the type is appropriate), with some probability, in the generate_expr() function. The prototype does not include such a generator, but it may be useful for testing specific passes, so I put the question to the community as to whether this kind of extensibility is necessary for fuzzing – my position is that it should be easy to create new, specialized program generators because it is difficult to predict what sorts of programs will be needed to test compiler passes.

Fuzzing Infrastructure

Given fuzzer implementations, it is necessary to generate programs at scale to identify possible compiler bugs, which requires further infrastructure to perform the generation of programs, run the tests, and analyze the results.

Program Generation

It is not actually necessary to generate programs particularly often. If existing type relations and language features do not change (and they do not change often), then the programs already generated will continue to be valid test cases. However, new operators may be added more often than that, so it would be desirable to create new test cases for those when the need arises. However, the essence is that program generation for fuzz testing only needs to be done occasionally (the fuzzer can be run in parallel for a defined period and the generated programs can be saved) and then these programs can be reused for tests. In particular, the generation should be offline and not included in the CI – this spares us from flaky tests (though the random generation seeds can be fixed to avoid that too) and limits the amount of computation necessary.

Running Tests

There are different notions of “correctness” that could make sense for generated programs. The simplest test oracle would be ensuring that the generated program compiles without errors or crashes. Other oracles of interest might be testing for parser round-tripping (this was one used in my TVMCon talk) and even comparing execution results between different implementations (comparing the VM and graph runtime, for example, or comparing against the reference interpreter I wrote some time ago for the purpose of fuzz testing). Any cases that fail can be saved to be analyzed. So long as the correctness properties can be easily checked automatically, they will be useful for fuzz testing.

When to Run

Program generation and execution at scale will be computationally expensive and will require resources that would, presumably, otherwise be used for CI. Even if a single large set of generated programs is generated and saved, running all the tests and all the oracles would still be expensive. Hence, fuzz testing should not be run particularly frequently (such as in CI); it would make sense to run the large-scale tests periodically and offline (perhaps after a certain number of merges). Failing test cases should be studied and those that expose important bugs should be added to the CI (though the CI itself should not contain random generation to avoid flakiness).

However, more specialized program generators that are intended for testing and prototyping specific features during development (hence the emphasis on extensibility) should be easily accessible to developers to run at small scales in order to test their features as they work on them.

Processing Errors

If a generated test case results in an error, this will have to be studied, but there is the possible issue of “fuzzer fatigue”: Generating programs at large scale can lead to many errors and if the generation is simple and random, there may be many programs triggering the same error (so it would not be very illuminating to manually examine all of them). Many techniques for addressing this problem have been proposed (they are generally called “fuzzer taming”); it seems that a common heuristic is comparing the tops and bottoms of stack traces and grouping errors based on those, allowing developers to focus their efforts on specific categories. (This would also incentivize having more informative error messages.) A system like Elastic Recheck may be useful for this kind of logging and searching.

It may also be the case that some errors are not worth addressing, either due to limited resources or simply because the errors are too unlikely to arise. If this is determined, it may be necessary to filter out some test failures based on the stack traces or, if there are some AST features that can be identified as the cause for the error, filter the programs out at the time of generation (or even modify the generator not to produce them).

In general, the generation of programs, running of tests, and analysis of errors are tasks that should be easily parallelizable and can be allocated specific computation budgets so as not to compete excessively with CI.

Assessing the Utility of Fuzzing

A common way of determining the efficacy of a fuzzing is tracking code coverage, so we may want to measure the code coverage of the tests (i.e., code coverage within TVM). Reasoning about coverage over the FFI boundary may be challenging, but it is certainly possible and has been done in TVMFuzz and Tzer. This will allow for assessing if the generation algorithm should be adjusted to trigger more paths in the compiler (especially in passes). Additionally, tracking coverage would make it feasible to use “white-box” fuzzing techniques (which guide the generation algorithm using coverage information or further analysis about how to trigger branches in the compiler), as in Tzer.

Possible Drawbacks

There would be a lot of implementation effort involved in creating and maintaining the fuzzer and the infrastructure, as well as in analyzing the results of fuzz tests. In addition to the human effort, there would also be computational resources spent on generating and running test cases. Furthermore, developers would likely have to specify how compiler passes should be tested using the fuzzer, requiring community effort and buy-in to make use of the fuzzer (yet another demand on developers’ attention). Whether these expenditures are worthwhile depends on the quantity and nature of the bugs found, which depends also on the ability of the fuzzers we write to probe the various code paths in the compiler.

(Arguably finding no bugs this way would increase confidence in the compiler – recent versions of LLVM and GCC often turn up no bugs during long fuzzing campaigns.)

Rationale and Alternatives

Without fuzz testing, we are entirely reliant on human review of code, manually crafted test cases, and user bug reports (or their absence) for ensuring the reliability of the compiler. Generating random test cases is likelier to probe cases that are tedious to construct by hand or were not anticipated by the developers.

As discussed in the TVMCon talk, the alternative of using a purely grammar-based fuzzer (much less effort to implement) is not very workable; my collaborators and I attempted to use it and less than 0.1% of the generated programs even type checked, and those that did tended to be trivial cases (incomplete match expressions with zero branches). It seems inevitable that fuzzing Relay programs will require some kind of reasoning about tensor shapes and to the best of my knowledge, I cannot imagine a simpler approach.

Unresolved Questions

Language Features Not Addressed in the Prototype

The prototype is able to generate programs incorporating many Relay features, but with some limitations:

- The generated modules include only a new

mainfunction (as well as the content from the prelude). - The only polymorphic functions used are those from the prelude (

map,fold, etc.); new ones are not generated. - Only the default ADTs (list, option, tree) from the prelude are used; new ones are not generated.

- All tensors must have static shapes.

- All variables have types annotated.

Of these limitations, (1) would be the easiest to address and would permit mutual recursion. (2) may be possible to address in principle, but would have some complexity (we would have to be careful with type variables and would need to keep track of whether we have a value of those types in scope, since we cannot arbitrarily create literals for type variables). (3) could also potentially be addressed, though it would be necessary to check whether it is possible to create instances of an arbitrary ADT (i.e., we must check that it has a base case). (4) and (5) would be more difficult to address. Dynamic shapes may be possible to address by starting with static shapes and then “relaxing” the solution by replacing some dimensions with Any (I am not sure if this is guaranteed to be correct). Removing annotations would require reasoning about type inference in Relay, which is not clearly specified; this could perhaps be addressed in a conservative manner (identifying specific situations where the type can clearly be inferred and removing annotations only in those cases, which would be useful even if some annotations left are not strictly necessary). Testing type inference would be a worthy aim, since it is a source of bugs.

Design Questions

I welcome all discussion of different aspects of this RFC. Below, I highlight some topics for which I would be particularly curious to have the community’s feedback.

- There are tradeoffs related to the language used for implementing the fuzzer. The prototype is written in Python, but speed will be a priority for running tests at scale, so it may be more useful to implement the fuzzer in C++. On the other hand, it is easier to experiment in Python, which might make it easier to write program generators for use in testing a new feature.

- There are various configuration options in the general-purpose fuzzer in the prototype, but there are more options I could imagine adding and being useful (e.g., a list of operators to include, more ways to customize generation chances). What are the best options to include for the general-purpose fuzzer and where should we draw the line between adding more configuration into a single general-purpose fuzzer and writing a new generator? My view is that new generators should be used to define new policies altogether.

- Fuzzing infrastructure poses the most questions: Should it be defined within TVM’s codebase, or live separately? Where should the scripts for invoking it be defined as well?

- What test oracles would be a high priority to include for fuzz testing? Are there pass-specific oracles we may want? (E.g., ensure that a pass accepts all inputs with a given property/correctly rejects inputs that fail the check.)

- How often should fuzz tests be run? Where should results be stored? Who would be responsible for analyzing the results? What summary statistics would make sense?

- I would be interested in technical advice on tracking code coverage, especially across the C++ and Python boundary, if it is desirable (I think it is).

- Does sampling solutions make sense when the solvers are simple to use?

- Where in the codebase should solver and recognizer definitions be located? Should they be near the type relation definitions or in a different part of the codebase altogether? (In the latter case, I think they should be grouped the same way the operators are grouped.)

- The handling of graph bindings is an addition in the latest prototype that was not present in my earlier one, so I would appreciate particular scrutiny towards their handling and whether it is being done correctly. I could not find a good written specification of how graph bindings are intended to work, so I took a guess. (See these lines in the prototype. Note that variables and constants are not eligible, since they are atomic expressions–if I am mistaken that these do not count as graph bindings, please correct me.)

Future Possibilities

An important additional tool for fuzz testing TVM would be a program minimizer (CReduce is a famous example for C and C++), which takes a failing test case and removes parts of it until it finds the smallest program that yields the same error. This would make error cases easier to analyze and also be useful for analyzing bug reports. It may be the case that a type-directed approach like that used in the prototype program generator would be useful for minimization: Try replacing each AST subtree with a smaller one of the same type.

A program mutator (which simply replaces one subtree with another) may also be useful for creating test cases: A developer could provide a manually specified test case (like an existing model) and apply the mutator to create new test cases by swapping out parts of the program, perhaps including more language features in it. This could be implemented by choosing an AST subtree and applying the existing fuzzer to create a new expression of the same type, though reasoning about changes that change the type may be more difficult in this approach.

It will likely also be valuable to generate programs that are known to be incorrect, for ensuring that the compiler will reject those programs and not crash. The infrastructure presented here can likely be reused for generating known-incorrect programs for testing error cases; this can be done with mutation by replacing a subtree of one type with a value of an incorrect type, though it will be necessary to take care not to replace it with another value that may, coincidentally, also type check. This may be a feasible further line of investigation if the need for it proves significant.

Finally, many members of the community are participating in an exciting effort to design Relax, a successor to Relay. One of Relax’s design aims is focusing on support for symbolic shapes. It may be worthwhile to consider if any insights from this fuzzing work may be applicable to Relax, since fuzzing can be useful at early stages of development. The additional complexity of symbolic shapes may be a compelling argument for the use of CLP or ILP solvers.