- Feature Name: DietCode: An Auto-Scheduler for Dynamic Tensor Programs

- Start Date: (2022-05-10)

- RFC PR: apache/tvm-rfcs#xx

- GitHub Issue: apache/tvm#yy

Summary

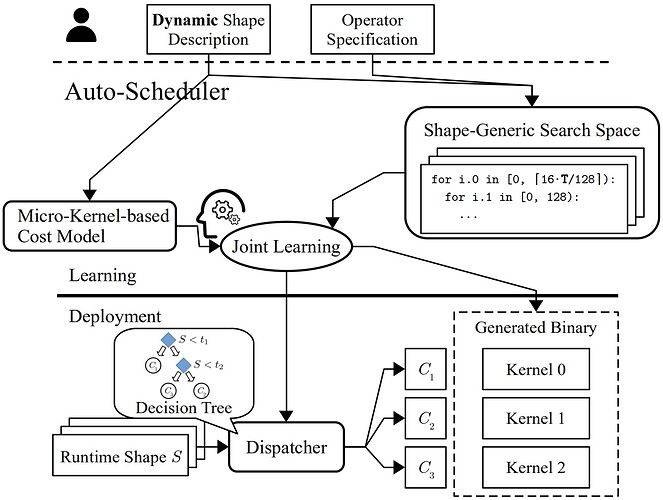

We propose to integrate DietCode, an auto-scheduler for dynamic tensor programs, to AutoTIR. DietCode offers the following features:

- A shape-generic search space to cover possible shapes in dynamic shape workloads.

- A dynamic-shape aware cost model to judge the quality of schedule candidates.

- Enhancement to the TVM CUDA codegen for imperfect tiling.

DietCode has been published by MLSys 2022 so please see the paper for more details and evaluations. Meanwhile, the latest DietCode codebase is also publicly available here.

Motivation

Achieving high performance for compute-intensive operators in machine learning workloads is a crucial but challenging task. Many machine learning and system practitioners rely on vendor libraries or auto-schedulers to do the job. While the former requires significant engineering efforts, the latter in TVM only supports static-shape workloads in existing works. It is difficult, if not impractical, to apply the existing auto-scheduler directly to dynamic-shape workloads, as this leads to extremely long tuning time.

We observe that the key challenge faced by existing auto-schedulers when handling a dynamic-shape workload is that they cannot construct a conclusive search space for all the possible shapes of the workload, because their search space is shape-dependent. To address this, this RFC aims to add dynamic-shape supports to AutoTIR by integrating DietCode framework, which constructs a shape-generic search space and cost model to auto-schedule dynamic-shape workloads efficiently.

Our evaluation shows that DietCode has the following key strengths when auto-scheduling an entire model end-to-end:

- reduces the auto-scheduling time by up to 5.88x less than the current auto-scheduler on 8 uniformly sampled dynamic shapes, and

- improves performance by up to 69.5% better than the auto-scheduler and 18.6% better than the vendor library. All these advantages make DietCode an efficient and practical solution for dynamic-shape workloads.

Guide-Level Explanation

The existing experiments are largely conducted with auto-scheduler. However, having been syncing with the AutoTIR team for quarters, we plan to integrate this RFC to MetaSchedule (AutoTIR), because it provides more systematic interface and cleaner integration path with less hacks.

To provide an example of additional information users are required to feed the system:

# A symbolic shape constraint

T = tir.ShapeVar('T’)

# The candidate values of `T`

T_vals = list(range(1, 128))

task = Task(func=Dense,

args=(16*T, 768, 2304),

shape_vars=(T,),

wkl_insts=(T_vals,)

wkl_inst_weights=([1. for _ in T_vals],))

To enable auto-scheduling for dynamic shape workloads, users only need to:

- Have

ShapeVarin the TE/TensorIR compututation. - Specify the weight/distribution of each shape value.

Notes:

- Symbolic constraint is required additional in Relay, but could be inferred automatically after Relax is introduced;

- The proposed interface does not change any existing functionality.

Reference-Level Explanation

Here is an overview of the DietCode framework design.

-

We construct a shape-generic search space that consists of micro-kernels, an incomplete program that carries out a tile of the complete computation, to efficiently support dynamic-shape workloads.

We use the hardware constraints (e.g., the maximum number of threads, the amount of shared and local memory) rather than the shape information to determine the micro-kernel candidates. Those candidates serve as the building blocks and are executed repeatedly to carry out a workload instance (defined as an static-shape instance of the dynamic-shape workload).

-

We build a micro-kernel-based cost model. The key insight is that the cost of a complete program P that is made up of a micro-kernel M can be decomposed into two parts:

- A shape-generic cost function fMK that predicts the cost of M, and

- A shape-dependent adaption cost function fadapt that defines the penalty of porting M to P.

While fMK is a function that has to be learned and updated by real hardware measurements during the auto-scheduling process, fadapt is a simple term that can be evaluated using the core occupancy and the padding ratio (in other words, it does not require feature extraction from the schedules).

Drawbacks

- The current compilation workflow generates one program per input shape.

Although we can merge those static-shape programs into a single dynamic-shape

program like the following code snippet:

Our evaluations indicate that this program has at least 5% worse performance compared with the static-shape alternatives. Hence, we decide to sacrifice the binary size for the runtime performance, which can potentially be problematic when the hardware resources are limited.__global__ void default_function(float* X, float* W, float* Y, const int T) // Note the `T` here.

Rationale and Alternatives

There is an approach proposed by Nimble, which partitions a range of dynamic shape to buckets and tunes one kernel for each bucket. We could, of course, implement this approach to the current auto-scheduler and AutoTIR. However, as evaluated in the DietCode paper, this approach is not guaranteed to achieve better performance as static shapes.

Prior State-of-the-Arts

-

Reuse-based Tuner

Selective Tuning (Cody Yu. 2019) and ETO (Jingzhi Fang et al. VLDB 2021) group workloads into clusters based on a set of pre-defined rules (e.g., similarity ratio in Selective Tuning) and reuse the same schedule in a single cluster.

-

Dynamic Neural Networks

Dynamic batching is a common graph-level optimization adopted by frameworks such as DyNet (Graham Neubig et al. 2017), Cavs (Shizhen Xu et al. USENIX ATC 2018), BatchMaker (Pin Gao et al. EuroSys 2018), and TensorFlow Fold (Moshe Looks et al. ICLR 2017) for cases when the batch size is dynamic.

Nimble (Haichen Shen et al. MLSys 2021) and DISC (Kai Zhu et al. EuroMLSys 2021) both design a compiler to represent and execute dynamic neural networks.

Cortex (Pratik Fegade et al. MLSys 2021) is a compiler-based framework on recursive neural networks.

Those works focus on the graph-level optimizations and therefore are orthogonal to DietCode, which operates on each individual layer. In fact, those graph-level solutions can also leverage DietCode for efficient operator code generation.

Unresolved Questions

- The current design does not support arbitrary shape dimensions. For better auto-scheduling outcomes, we expect that shape dimensions have to be specified beforehand.

- The proposed approach mostly works on NVIDIA GPUs and has not been tested on other hardware platforms.

Future Possibilities

- Evaluate more operator use cases.

- CPU Support

Upstream Milestones

We propose the following milestones for upstreaming, where each bullet point corresponds to a PR with unit tests of roughly several hundred lines.

- Code Generation Support

- Local Padding

- Loop Partitioning

- Auto-Scheduler

- Frontend Interface

- Sketch Generation

- Random Annotations

- Program Measurer

- Micro-Kernel Cost Model

- Evolutionary Search

- Decision-Tree Dispatching

FYI, @comaniac @junrushao