Motivation

Arm Compute Library (ACL) is an open source project that provides hand-crafted assembler routines for Arm CPU’s and GPU’s. This integration will look at how we can accelerate CPU performance for Arm devices in TVM using ACL. The idea is that by offloading select operators from a relay graph to ACL we can achieve faster inference times due to these routines. The initial intention is that this will improve performance for FP32 models. Although, with further improvements to the integration this will extend to quantized models and support for a wider range of operators.

Proposal

We have been working on integrating ACL using the BYOC infrastructure which is still under active development. Our current implementation uses JSON as a level of abstraction between relay operators and ACL functions (or layers). Here is an overview of the flow from compilation to runtime we aim to achieve:

- Front-end graph (Currently only NHWC is supported).

- Lower to relay graph.

- Run MergeComposite to create a one-to-one mapping of relay operators to ACL functions.

- Annotate graph for ACL and Partition (We currently do this without the MergeCompilerRegions pass, the reasoning for this will follow shortly).

- Pre-process the parts of the graph destined for ACL to align with expected formats.

- Use the codegen stage to convert Relay operators annotated for ACL to JSON.

- Serialize JSON and constant tensors into

mod.so.

ACL runtime module context

- Load

mod.soand deserialize JSON and constant tensors. - Create ACL functions from JSON representation and cache.

- The cached functions are exposed to the graph runtime as packed functions.

Running the generated module

- Run each ACL function as required by the graph runtime by retrieving from the cache and supplying input and output buffers.

Building with ACL support

The current implementation has two separate build options in CMake. The reason for this split is because ACL cannot be used on an x86 machine. However, we still want to be able to compile an ACL runtime module on an x86 machine.

- USE_ACL - Enabling this flag will add support for compiling an ACL runtime module.

- USE_GRAPH_RUNTIME_ACL - Enabling this flag will allow the graph runtime to compute the ACL offloaded functions when running a module.

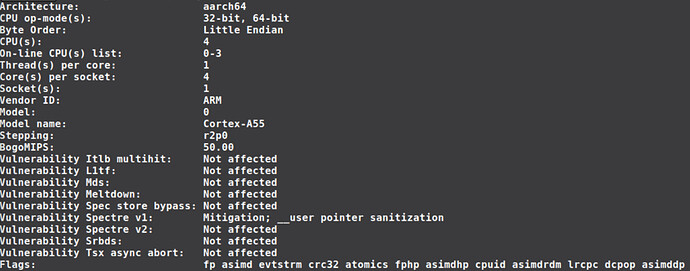

We expect typical usage will see USE_ACL enabled on an x86 “host” device and USE_GRAPH_RUNTIME enabled on an Aarch64 device.

We include a script under docker/ubuntu_install_acl.sh which pulls ACL from the github repository and makes building ACL cross-compiled for Aarch64 easy to use within TVM. This script can also be added to the ci_cpu docker container build.

ACL Graph representation

ACL doesn’t have it’s own graph representation and should be viewed as a library that will compute a single operator and return the result, rather than computing a whole sub-graph. For these reasons we offload operators to ACL one-by-one i.e. we wrap each ACL supported operator in its own function in relay and offload to the ACL runtime one operator at a time. This is the reason we don’t run the MergeCompilerRegions pass mentioned above.

As new features are added to BYOC this approach may change in the future.

Codegen and compilation

The process of offloading partitioned subgraphs to ACL starts at compilation. The aim here is to align with the expectations of ACL and convert the relay “sub-graphs” into a format that the ACL runtime understands. First we pre-process the functions that the codegen receives. Currently, we make use of the ConvertLayout and FoldConstant passes to convert from TVM’s default NHWC kernel layout of HWIO to ACL’s expected kernel layout of OHWI. After this, we codegen JSON from the operators we receive. The output of the codegen module is an ACLModule which contains a serialized JSON representation of the operators and serialized constant tensors.

Runtime Support

We implement ACLModule to translate from the JSON that is received by codegen to ACL API. When an ACL module is compiled it only contains the necessary JSON operator descriptions and constant data for creation of each layer in ACL - we defer the actual creation of ACL layers until runtime. This is because ACL is not cross-platform. When the graph runtime is created, each ACL layer is configured and cached in the module separately. This way we can eliminate the overhead of repeatedly creating and preparing these layers for multiple inferences. ACL also applies another weight transformation for specific convolutions; since these layers are cached this transformation only occurs when creating the runtime.

Another optimization we implement at this level is to allow ACL to request auxiliary memory from TVM. This is working memory that ACL needs to perform some operations (currently only affects convolution). By requesting memory directly from the TVM device API we can witness a performance improvement of around 1.2x compared to using ACL backed memory.

Operator Support

Currently the integration provides support for the following operators using FP32 precision:

- [pad] + conv2d + [bias_add] + [relu], where [] denotes an optional operator.

- maxpool2d

- reshape

This RFC is only intended to be “initial”, further support for a wider range of operators will follow.

Testing

We currently have 4 different types of tests all of which reside under python/contrib/test_acl.

- Under

test_operatorname.pywe have 2 types of tests:- The first requires USE_ACL and USE_ACL_GRAPH_RUNTIME are set. (Or the use of a remote device). These tests check that each individual operator runs end-to-end and that the output matches that of TVM.

- The second only requires USE_ACL and simply test the codegen JSON output is as expected. These tests bypass the ACL runtime.

-

test_network.pyincludes end-to-end network tests (currently vgg16 and mobilenet). These tests offload only the implemented operators to ACL with the rest of the operators that are unsupported continuing through the TVM stack. Again, the results are compared against the output of TVM. -

test_runtime.pytest elements of the runtime that haven’t been tested via individual operators. Currently this consists of multiple inferences on the same model and testing multiple ops offloaded to acl works as intended.

Our hope is that in the future the whole ACL implementation can be checked with an Aarch64 CI setup.

Future improvements

The integration in it’s current form doesn’t add support for most operators in ACL, it is mostly a proof of concept. Below is a series of items we hope to add/improve upon in the near future.

- Support a wider range of operators for FP32 (and FP16).

- Support for quantized operators.

- As BYOC evolves, we may offload whole sub-graphs to ACL (hopefully improving performance) and change the way constants are serialized (with the use of the upcoming JSON runtime).

Thanks, any thoughts are appreciated.