Here is the problem. I try to add a fuse operator to relay.nn, and meet this problem.

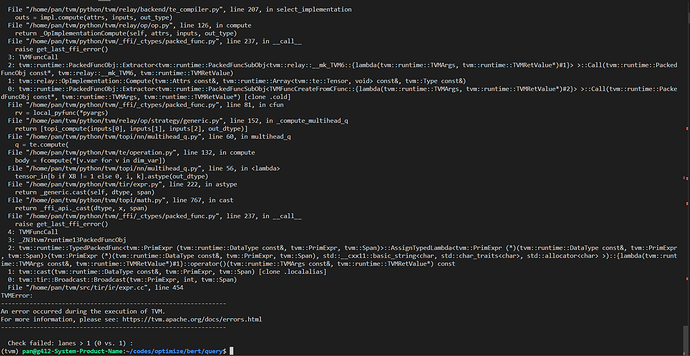

File “/home/pan/tvm/python/tvm/_ffi/_ctypes/packed_func.py”, line 81, in cfun rv = local_pyfunc(*pyargs)

File “/home/pan/tvm/python/tvm/relay/backend/te_compiler.py”, line 320, in lower_call best_impl, outputs = select_implementation(op, call.attrs, inputs, ret_type, target)

File "/home/pan/tvm/python/tvm/relay/backend/te_compiler.py", line 177, in select_implementation

all_impls = get_valid_implementations(op, attrs, inputs, out_type, target)

File "/home/pan/tvm/python/tvm/relay/backend/te_compiler.py", line 113, in get_valid_implementations

assert fstrategy is not None, (

AssertionError: nn.multihead_q doesn't have an FTVMStrategy registered. You can register one in

python with `tvm.relay.op.register_strategy`.

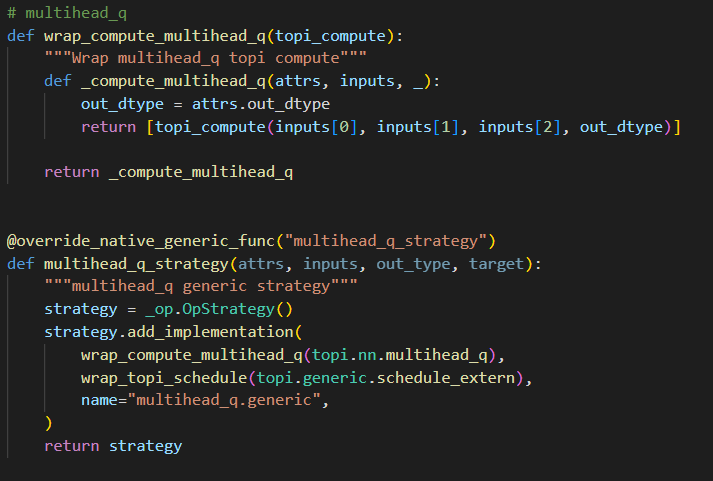

But I TRULY add the register content. I follow Adding an Operator to Relay — tvm 0.13.dev0 documentation, and in ~/tvm/python/tvm/relay/op/strategy/generic.py, I wrote these codes:

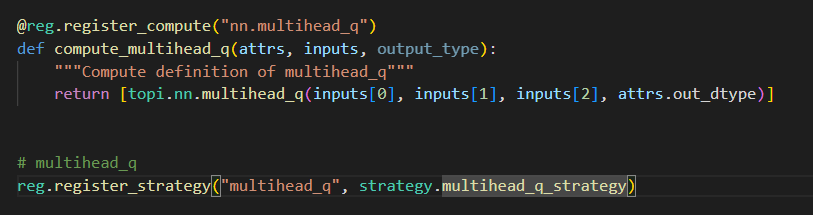

And in ~/tvm/python/tvm/relay/op/nn/_nn.py, I wrote: But the strategy is just None.Does anyone know the reason? Or does anyone successfully add new operator to relay? Could you please share the codes?

. Always happy to help.

. Always happy to help.