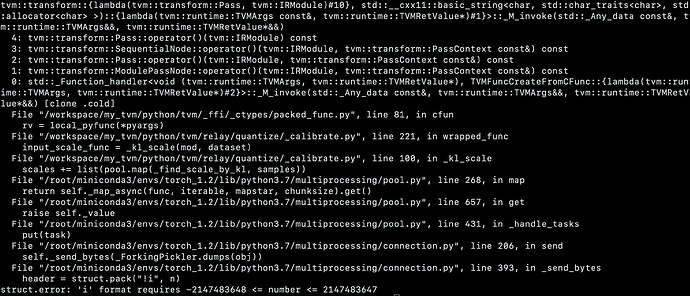

Hi friends: Currently, I am trying to quantize a model based on tutorial (http://tvm.apache.org/docs/tutorials/frontend/deploy_quantized.html#sphx-glr-tutorials-frontend-deploy-quantized-py). however, when I was trying to set data_ware = True to quantize my model, it has the following error:

Here is my quantize method:def quantize(mod, params, data_aware):

print("starting quantize your model ... ")

if data_aware:

list_of_files = glob.glob("/data00/cuiqing.li/image_text_1k/*")

with relay.quantize.qconfig(calibrate_mode="kl_divergence", weight_scale="max"):

mod = relay.quantize.quantize(mod, params, dataset=calibrate_dataset(list_of_files))

else:

with relay.quantize.qconfig(calibrate_mode="global_scale", global_scale=8.0):

mod = relay.quantize.quantize(mod, params)

print("finished quantizing your model ... ")

return mod

and here is my definition of calirate_dataset()

def image_reader(image_path):

my_image = Image.open(image_path)

my_image = my_image.resize((640, 640))

data = asarray(my_image)

data = np.transpose(data, axes=[2, 0, 1])

data = np.expand_dims(data, axis=0).astype("float32")

return data

def calibrate_dataset(list_of_files):

for i, file_name in enumerate(list_of_files):

print(file_name)

print(i)

data = image_reader(file_name)

yield {"data": data}

For me, it looks like memory space is not enough, is there someway to solve this?