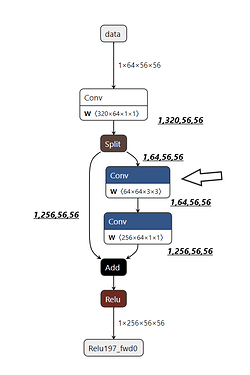

I use TASO to build a simple NN like below:

- Environment: onnx=1.12;tvm=0.11.dev0

Bold,Italic text were the output tensor shape inferred by TASO; Arrow was the place error occured i guess

ERROR MESSAGE:

The Relay type checker is unable to show the following types match:

Tensor[(64, 160, 3, 3), float32]

Tensor[(64, 64, 3, 3), float32]

In particular:

dimension 1 conflicts: 160 does not match 64.

The Relay type checker is unable to show the following types match.

In particular `Tensor[(64, 64, 3, 3), float32]` does not match `Tensor[(64, 160, 3, 3), float32]`

---------------------------------------------------------------------------

DiagnosticError Traceback (most recent call last)

Input In [19], in <cell line: 1>()

----> 1 mod, params = relay.frontend.from_onnx(split, shape_dict,freeze_params=True)

File /public/apps/tvm/python/tvm/relay/frontend/onnx.py:5966, in from_onnx(model, shape, dtype, opset, freeze_params, convert_config)

5964 # Use the graph proto as a scope so that ops can access other nodes if needed.

5965 with g:

-> 5966 mod, params = g.from_onnx(graph, opset)

5968 if freeze_params:

5969 mod = relay.transform.DynamicToStatic()(mod)

File /public/apps/tvm/python/tvm/relay/frontend/onnx.py:5627, in GraphProto.from_onnx(self, graph, opset, get_output_expr)

5625 self._check_user_inputs_in_outermost_graph_scope()

5626 self._check_for_unsupported_ops(graph)

-> 5627 self._construct_nodes(graph)

5629 # now return the outputs

5630 outputs = [self._nodes[self._parse_value_proto(i)] for i in graph.output]

File /public/apps/tvm/python/tvm/relay/frontend/onnx.py:5739, in GraphProto._construct_nodes(self, graph)

5736 attr["tvm_custom"]["name"] = i_name

5737 attr["tvm_custom"]["num_outputs"] = len(node_output)

-> 5739 op = self._convert_operator(op_name, inputs, attr, self.opset)

5740 if not isinstance(op, _expr.TupleWrapper):

5741 outputs_num = 1

File /public/apps/tvm/python/tvm/relay/frontend/onnx.py:5850, in GraphProto._convert_operator(self, op_name, inputs, attrs, opset)

5848 sym = get_relay_op(op_name)(*inputs, **attrs)

5849 elif op_name in convert_map:

-> 5850 sym = convert_map[op_name](inputs, attrs, self._params)

5851 else:

5852 raise NotImplementedError("Operator {} not implemented.".format(op_name))

File /public/apps/tvm/python/tvm/relay/frontend/onnx.py:607, in Conv._impl_v1(cls, inputs, attr, params)

605 data = inputs[0]

606 kernel = inputs[1]

--> 607 input_shape = infer_shape(data)

608 ndim = len(input_shape)

610 kernel_type = infer_type(inputs[1])

File /public/apps/tvm/python/tvm/relay/frontend/common.py:526, in infer_shape(inputs, mod)

524 def infer_shape(inputs, mod=None):

525 """A method to get the output type of an intermediate node in the graph."""

--> 526 out_type = infer_type(inputs, mod=mod)

527 checked_type = out_type.checked_type

528 if hasattr(checked_type, "shape"):

529 # Regular operator that outputs tensors

File /public/apps/tvm/python/tvm/relay/frontend/common.py:501, in infer_type(node, mod)

498 if mod is not None:

499 new_mod.update(mod)

--> 501 new_mod = _transform.InferType()(new_mod)

502 entry = new_mod["main"]

503 ret = entry if isinstance(node, _function.Function) else entry.body

File /public/apps/tvm/python/tvm/ir/transform.py:161, in Pass.__call__(self, mod)

147 def __call__(self, mod):

148 """Execute the pass. Note that for sequential pass, the dependency among

149 different passes will be resolved in the backend.

150

(...)

159 The updated module after applying this pass.

160 """

--> 161 return _ffi_transform_api.RunPass(self, mod)

File /public/apps/tvm/python/tvm/_ffi/_ctypes/packed_func.py:237, in PackedFuncBase.__call__(self, *args)

225 ret_tcode = ctypes.c_int()

226 if (

227 _LIB.TVMFuncCall(

228 self.handle,

(...)

235 != 0

236 ):

--> 237 raise get_last_ffi_error()

238 _ = temp_args

239 _ = args

DiagnosticError: Traceback (most recent call last):

8: TVMFuncCall

7: tvm::runtime::PackedFuncObj::Extractor<tvm::runtime::PackedFuncSubObj<tvm::runtime::TypedPackedFunc<tvm::IRModule (tvm::transform::Pass, tvm::IRModule)>::AssignTypedLambda<tvm::transform::__mk_TVM9::{lambda(tvm::transform::Pass, tvm::IRModule)#1}>(tvm::transform::__mk_TVM9::{lambda(tvm::transform::Pass, tvm::IRModule)#1}, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >)::{lambda(tvm::runtime::TVMArgs const&, tvm::runtime::TVMRetValue*)#1}> >::Call(tvm::runtime::PackedFuncObj const*, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, tvm::runtime::TVMRetValue)

6: tvm::transform::Pass::operator()(tvm::IRModule) const

5: tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const

4: tvm::transform::ModulePassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const

3: _ZN3tvm7runtime13PackedFun

2: tvm::runtime::TypedPackedFunc<tvm::IRModule (tvm::IRModule, tvm::transform::PassContext)>::AssignTypedLambda<tvm::relay::transform::InferType()::{lambda(tvm::IRModule, tvm::transform::PassContext const&)#1}>(tvm::relay::transform::InferType()::{lambda(tvm::IRModule, tvm::transform::PassContext const&)#1})::{lambda(tvm::runtime::TVMArgs const&, tvm::runtime::TVMRetValue*)#1}::operator()(tvm::runtime::TVMArgs const&, tvm::runtime::TVMRetValue*) const

1: tvm::DiagnosticContext::Render()

0: _ZN3tvm7runtime6deta

File "/public/apps/tvm/src/ir/diagnostic.cc", line 105

DiagnosticError: one or more error diagnostics were emitted, please check diagnostic render for output.

I wonder why the tensor type cannot pass the relay check.