Hi guys: I have a question. Suppose I have a tensorflow model which has a dynamic batch size. Originally, we need to set a specific shape (1,224,224,3) to pass it into relay.frontend.from_tensorflow(). Is it possible, or someway we can pass (relay.any(), 224, 224, 3)) pass it to relay.frontend.from_tensorflow(). I tried it, it looks it doesn’t work.

We noticed this PR for tensorrt engine: [BYOC][TensorRT] Reuse TRT engines based on max_batch_size for dynamic batching, improve device buffer allocation by trevor-m · Pull Request #8172 · apache/tvm · GitHub, but it looks doesn’t help to solve the question above

I tried the following codes:

if try_vm:

shape = (relay.Any(), 224, 224, 3)

shape_dict = {"input": shape}

outputs = ['fc2_softmax2']

mod, params = from_tensorflow(graph_def, layout=layout, shape=shape_dict)

print("Tensorflow protobuf imported to relay frontend.")

#run byoc

mod, config = partition_for_tensorrt(mod, params, remove_no_mac_subgraphs=True)

target = "cuda"

if try_vm:

with tvm.transform.PassContext(opt_level=3, config={'relay.ext.tensorrt.options': config}):

relay_exec = relay.create_executor(

"vm", mod=mod, device=tvm.cuda(1), target=target

)

print("set data")

shape = (2, 224, 224, 3)

input_data = (np.random.uniform(size=shape)).astype(dtype)

tvm_input = tvm.nd.array(input_data)

output = relay_exec.evaluate()(tvm_input)

print(output)

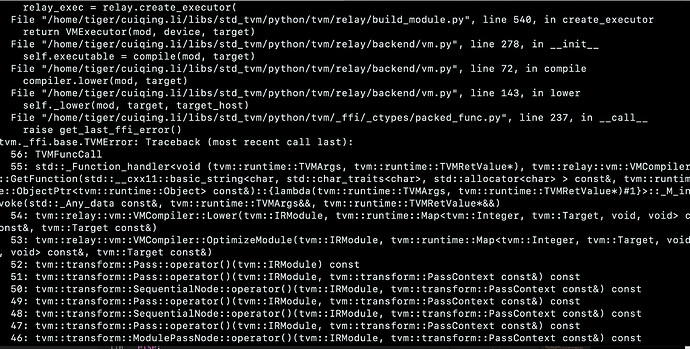

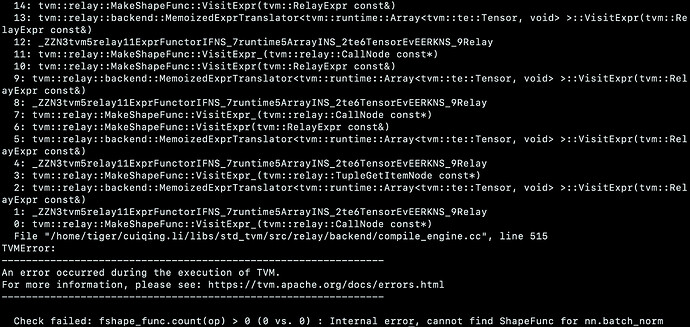

looks like relay.front_end.from_tensorflow do support dynamic shape input, but it gives me the following error message:

Someone know what’s wrong with it?