Hi all, I found an interesting problem of exception in codegen stage in TVM.

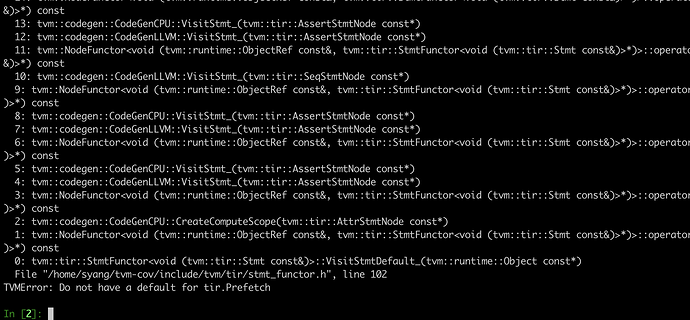

Last month I asked a question(TVM failed to build prefetch statement), and when I reviewed it today, I found it seemed to be fixed by one commit. I will receive an error message rather than a crash if I run this code.

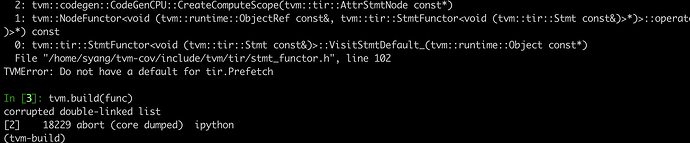

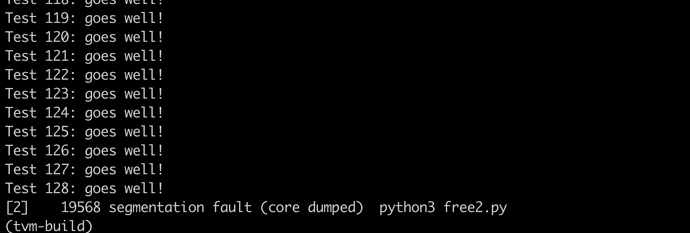

Everything goes well until I try to build this function several times. A strange thing happens - the program crashes:

Here is the code snippet to trigger this crash:

import tvm

from tvm import te

A = te.placeholder((25, 100, 4), name="A")

_A = tvm.tir.decl_buffer(A.shape, A.dtype, name="A")

i = te.size_var("i")

j = te.size_var("j")

region = [tvm.ir.Range.from_min_extent(i[0], i[1]) for i in [(i, 2), (j, 8), (0, 4)]]

stmt = tvm.tir.Prefetch(_A, region)

func = tvm.tir.PrimFunc([_A.data, i, j], stmt, buffer_map={_A.data: _A})

count = 0

while True:

try:

tvm.build(func)

except:

count += 1

print(f'Test {count}: goes well!')

pass

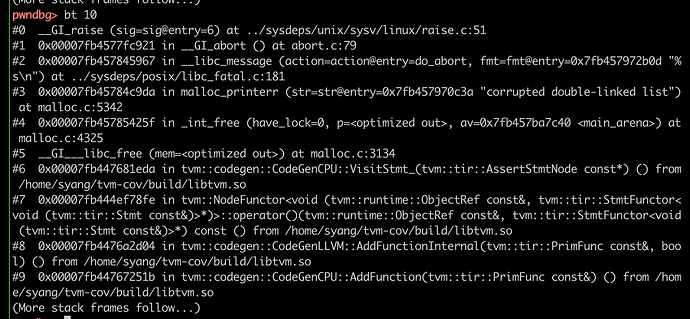

According to the gdb backtrace, I guess that TVM might forget to handle the resource correctly and only throws the exception if there is something wrong in codegen stage. Such behavior seems to be potentially vulnerable and if we try to build a new function, the crash may occur.

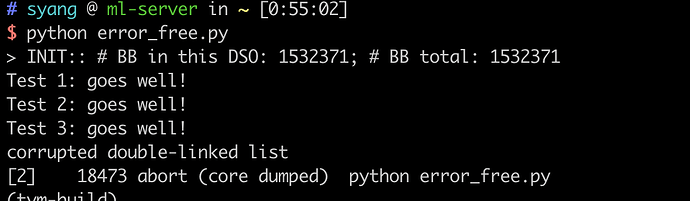

To confirm my assumption, I used another snippet to trigger the crash.

import tvm

buf = tvm.tir.buffer.decl_buffer((1,))

value = tvm.tir.IntImm('int32', 1)

i = tvm.tir.Broadcast(1,4)

index = tvm.tir.Shuffle([i, i], [i])

s = tvm.tir.Store(buf.data, value, index)

f = tvm.tir.PrimFunc({buf.data}, s)

count = 0

while True:

try:

tvm.build(f)

except:

count += 1

print(f'Test {count}: goes well!')

pass

Generally, we will get an exception Shuffled indeces are suppose to be int, but get x4(1) when we build this function. However, if we try to build it more times, it will crash:

I am quite curious about its root cause, and I’d appreciate any help  .

.