Hi,

Though it is not my first time to use tvm, I still have some basic question:

-

When I use two programs to train two models cocurrently on single gpu, the training speed would be reduced. Will this impact the accuracy of tvm autotuning? For example, if I am using one gpu to training the model, at the same time, I started the script to tvm auto-tuning. Will the performance of tuned model be different from models tuned on empty gpu? (Since inference speed of each operator is different from empty gpu).

-

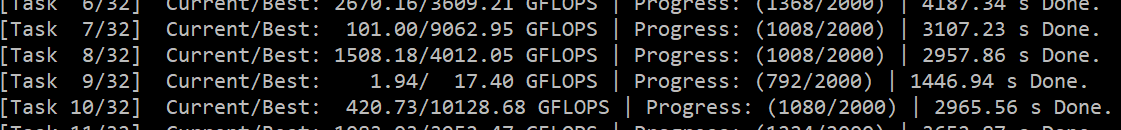

There are many tasks when I tuned my model, my log is like this:

Are these tasks independant to each other, such that we could start many threads to tune multi tasks in parallel? If they are indepandant, do you recommand to me to do so? -

Is there concept of fp16 and fp32 inference in tvm? I mean, do we need to assign a flag to tell tvm to tune and run the model in fp16 or fp32 mode? Or will tvm do that automatically, such that the tuned model and the built engine are mixed-precision, where some operators run in fp16 mode and the others in fp32 mode?