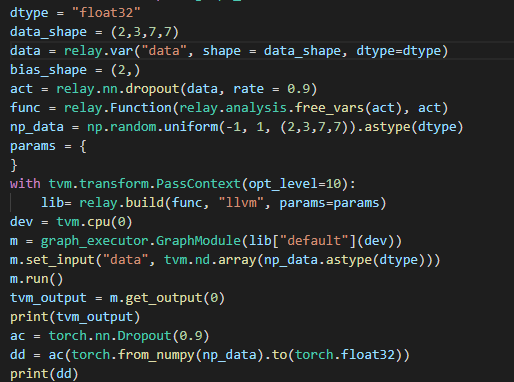

I only use the nn.dropout, but the result seems to be the same as the original array? That means no element in the array will be zero

Dropout is usually a training-time only operation, during inference all nodes will be kept.

As TVM does not support training atm right now the way it works is dropout is basically removed in any graph.

thank you!!@ AndrewZhaoLuo

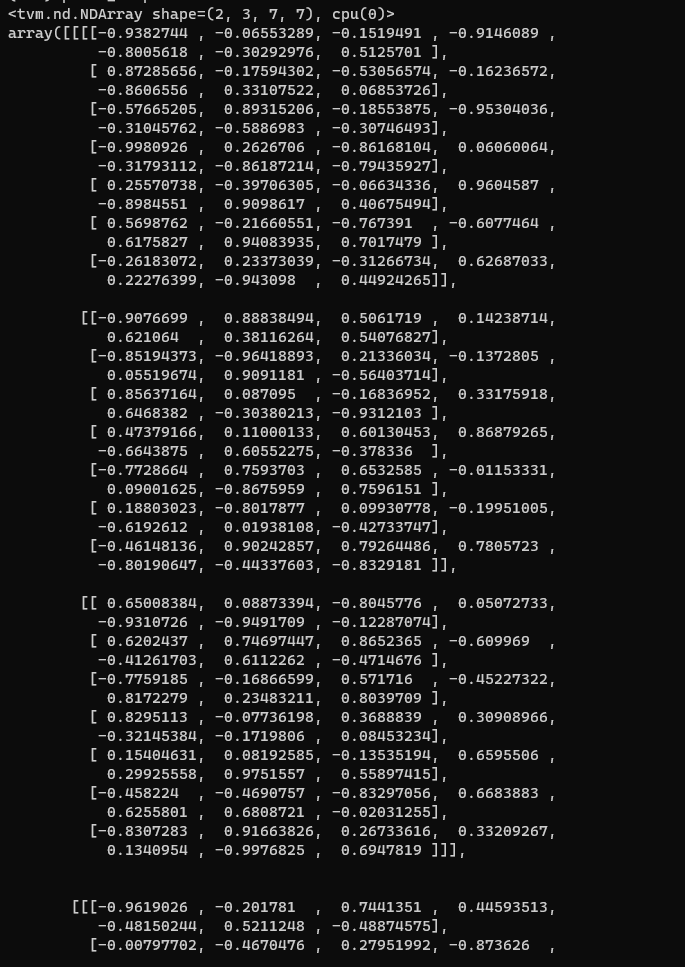

I’m sorry to disturb you again, the “adaptive_avg_pool2d” operator behaves differently in Pytorch and TVM Relay. I’m looking forward to your reply.

Hey jkun, how so? IIRC those operators are labeled as experimental. It could also be a slight difference in the operator specification between pytorch and tvm which can be solved by tweaking the conversion process.

In fact, I compare the result arrays of the “adaptive_avg_pool2d” between the pytorch and tvm . Some elements in the two arrays are not equal, possibly due to precision.

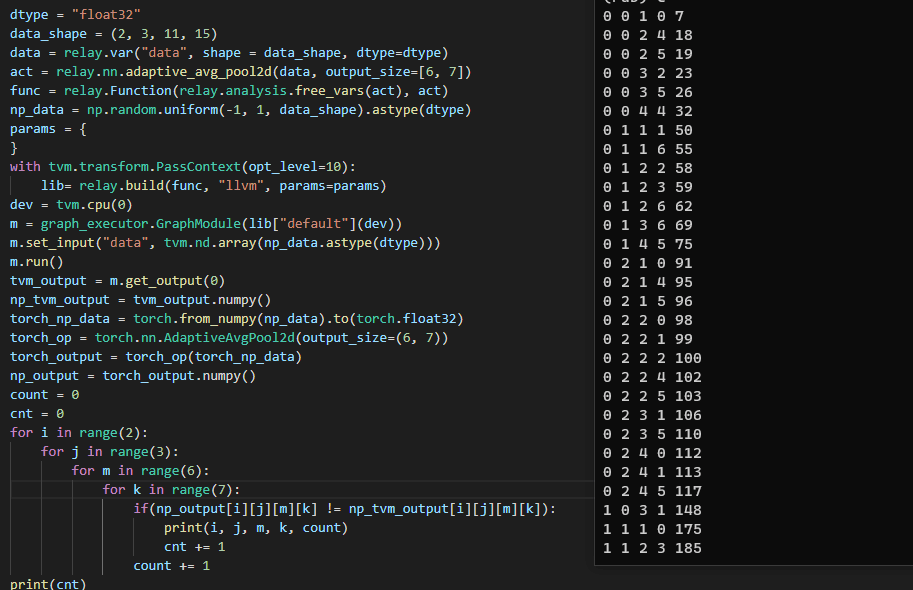

Do you have example script?

Can you make it copy pastable so I can check it out easily