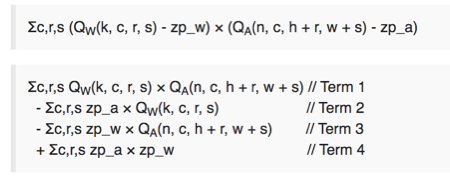

QNN ops are converted to a sequence of Relay operators before they go through traditional Relay optimization passes. Currently, most of this conversion from QNN ops to Relay ops is suitable for devices that have fast Int8 support in HW. For example, a qnn conv2d is broken down using the following equation

More details at - TF Lite quantized conv2d operator conversion - #8 by janimesh - Questions - Apache TVM Discuss

In the above case, Term1 is convolution with just int8 tensors. This is beneficial for HW platforms that have fast Int8 arithmetic support in HW - Like Intel VNNI, ARM Dot product or Nvidia DP4A. Here, the overhead of Term 3 (Term2 and Term4 are constants) is potentially much smaller than the benefit of fast Int8 arithmetic.

However, the question is - Is this lowering good for HW platforms that do not have fast Int8 arithmetic support - Like old Intel machines or Raspberry Pi 3. We have observed that a separate lowering sequence with lower number of relay operations leads to faster performance with the catch that conv2d inputs now become int16.

The new lowering can be seen from the first equation from the above picture, where we first subtract the zero points from data and weight (leading to int16 precision) and then perform convolution. Specific observation is on raspberry Pi 3 where int16 conv leads to much better performance that int8 conv, due to LLVM better packing of instructions ([ARM][Topi] Improving Int8 Perf in Spatial Conv2D schedule. by anijain2305 · Pull Request #4277 · apache/tvm · GitHub). This also binds well with @FrozenGene and @jackwish observations while playing with Integer conv2d schedule for ARM devices.

From code standpoint, specializing this legalization is easy, as we already have a QNN Legalization infrastructure. We can look at target flags to figure out if we have fast HW support - cascadelake for Intel, dotprod for ARM etc. If its not, we can legalize with simpler lowering and LLVM should be able to give us benefits automatically.

What is the downside? The memory footprint takes a hit because the weights are not upcasted to int16.

In case, later we decide that we can write a much better schedule with int8 conv, we can easily disable legalization. So, this is not a 1-way door.

Summary

This RFC discusses a need to do different legalizations for QNN ops depending whether the HW has support for fast Int8 arithmetic operations. Code-wise, we can use the QNN Legalize existing infrastructure.