Hi Sirs,

tvm version: 0.9.dev0 (commit: b555bf5481d3eb261427850cea286c162aa3d2e3) I use command to compile my yolo model (tflite) with CMSIS-NN, ad want to porting to STM32H7.

below is the command i used. python3 -m tvm.driver.tvmc compile --target=cmsis-nn,c --target-cmsis-nn-mcpu=cortex-m7 --target-c-mcpu=cortex-m7 --executor=aot --executor-aot-interface-api=c --executor-aot-unpacked-api=1 --runtime=crt --pass-config tir.disable_vectorize=1 -f mlf yolo.tflite

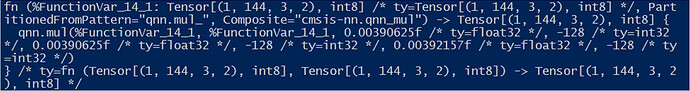

but i got the error message: ################################################################################# /home/markii/Project/tvm/python/tvm/driver/build_module.py:263: UserWarning: target_host parameter is going to be deprecated. Please pass in tvm.target.Target(target, host=target_host) instead. warnings.warn( the function is provided too many arguments expected 1, found 2 Traceback (most recent call last): File “/home/markii/anaconda3/envs/tvm/lib/python3.8/runpy.py”, line 194, in _run_module_as_main return _run_code(code, main_globals, None, File “/home/markii/anaconda3/envs/tvm/lib/python3.8/runpy.py”, line 87, in _run_code exec(code, run_globals) File “/home/markii/Project/tvm/python/tvm/driver/tvmc/main.py”, line 24, in tvmc.main.main() File “/home/markii/Project/tvm/python/tvm/driver/tvmc/main.py”, line 115, in main sys.exit(_main(sys.argv[1:])) File “/home/markii/Project/tvm/python/tvm/driver/tvmc/main.py”, line 103, in _main return args.func(args) File “/home/markii/Project/tvm/python/tvm/driver/tvmc/compiler.py”, line 173, in drive_compile compile_model( File “/home/markii/Project/tvm/python/tvm/driver/tvmc/compiler.py”, line 291, in compile_model mod = partition_function(mod, params, mod_name=mod_name, codegen_from_cli[“opts”]) File “/home/markii/Project/tvm/python/tvm/relay/op/contrib/cmsisnn.py”, line 73, in partition_for_cmsisnn return seq(mod) File “/home/markii/Project/tvm/python/tvm/ir/transform.py”, line 161, in call return _ffi_transform_api.RunPass(self, mod) File “/home/markii/Project/tvm/python/tvm/_ffi/_ctypes/packed_func.py”, line 242, in call raise get_last_ffi_error() tvm.error.DiagnosticError: Traceback (most recent call last): 18: TVMFuncCall 17: tvm::runtime::PackedFuncObj::Extractor<tvm::runtime::PackedFuncSubObj<tvm::runtime::TypedPackedFunc<tvm::IRModule (tvm::transform::Pass, tvm::IRModule)>::AssignTypedLambda<tvm::transform::{lambda(tvm::transform::Pass, tvm::IRModule)#7}>(tvm::transform::{lambda(tvm::transform::Pass, tvm::IRModule)#7}, std::__cxx11::basic_string<char, std::char_traits, std::allocator >)::{lambda(tvm::runtime::TVMArgs const&, tvm::runtime::TVMRetValue)#1}> >::Call(tvm::runtime::PackedFuncObj const, tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) 16: tvm::transform::Pass::operator()(tvm::IRModule) const 15: tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const 14: tvm::transform::SequentialNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const 13: tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const 12: tvm::transform::ModulePassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const 11: tvm::runtime::PackedFuncObj::Extractor<tvm::runtime::PackedFuncSubObj<tvm::runtime::TypedPackedFunc<tvm::IRModule (tvm::IRModule, tvm::transform::PassContext)>::AssignTypedLambda<tvm::relay::transform::InferType()::{lambda(tvm::IRModule, tvm::transform::PassContext const&)#1}>(tvm::relay::transform::InferType()::{lambda(tvm::IRModule, tvm::transform::PassContext const&)#1})::{lambda(tvm::runtime::TVMArgs const&, tvm::runtime::TVMRetValue*)#1}> >::Call(tvm::runtime::PackedFuncObj const*, tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) 10: tvm::relay::TypeInferencer::Infer(tvm::GlobalVar, tvm::relay::Function) 9: tvm::relay::TypeInferencer::GetType(tvm::RelayExpr const&) 8: void tvm::relay::ExpandDataflow<tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#1}, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#2}, tvm::relay::ExpandDataflow<tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#1}, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#2}>(tvm::RelayExpr, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#1}, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#2})::{lambda(tvm::RelayExpr const&)#1}>(tvm::RelayExpr, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#1}, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#2}, tvm::relay::ExpandDataflow<tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#1}, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#2}>(tvm::RelayExpr, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#1}, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#2})::{lambda(tvm::RelayExpr const&)#1}) 7: ZZN3tvm5relay11ExprFunctorIFNS_4TypeERKNS_9RelayExprEEE10InitVTableEvENUlR 6: tvm::relay::TypeInferencer::VisitExpr(tvm::relay::FunctionNode const*) 5: tvm::relay::TypeInferencer::GetType(tvm::RelayExpr const&) 4: void tvm::relay::ExpandDataflow<tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#1}, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#2}, tvm::relay::ExpandDataflow<tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#1}, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#2}>(tvm::RelayExpr, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#1}, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#2})::{lambda(tvm::RelayExpr const&)#1}>(tvm::RelayExpr, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#1}, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#2}, tvm::relay::ExpandDataflow<tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#1}, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#2}>(tvm::RelayExpr, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#1}, tvm::relay::TypeInferencer::VisitExpr(tvm::RelayExpr const&)::{lambda(tvm::RelayExpr const&)#2})::{lambda(tvm::RelayExpr const&)#1}) 3: ZZN3tvm5relay11ExprFunctorIFNS_4TypeERKNS_9RelayExprEEE10InitVTableEvENUlR 2: tvm::relay::TypeInferencer::VisitExpr(tvm::relay::CallNode const*) 1: tvm::relay::TypeInferencer::GeneralCall(tvm::relay::CallNode const*, tvm::runtime::Array<tvm::Type, void>) 0: tvm::DiagnosticContext::Render() File “/home/markii/Project/tvm/src/ir/diagnostic.cc”, line 105 DiagnosticError: one or more error diagnostics were emitted, please check diagnostic render for output. ################################################################################

I do some test:

-

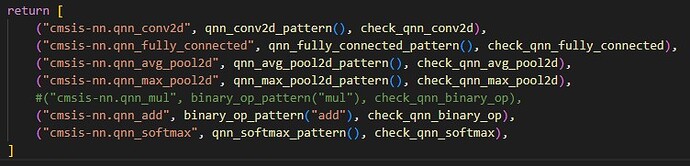

if i mark “cmsis-nn.qnn_mul” in cmsis-nn pattern_table(), the compilation would be ok.

-

change yolo model to mobilenet (https://storage.googleapis.com/download.tensorflow.org/models/mobilenet_v1_2018_08_02/mobilenet_v1_1.0_224_quant.tgz), the compilation also works.

could you help this issue?

Thanks!