Summarizes an important topic in a separate post that we think worth distilling.

Background Context

As we start to build up modular and composable pipelines, it is common for us to configure the compilation pipeline through different means. Some of the configurations are related to the final runtime environment while others are specific to “how” a pass transforms key data structure of interest.

The goal of the post is to clarify the design principles when it comes to how to organize these configurations. This post does not intend to propose any specific configuration structure design. The expectation is that there are still a lot of flexibilities in terms of specific architectural designs following the principle.

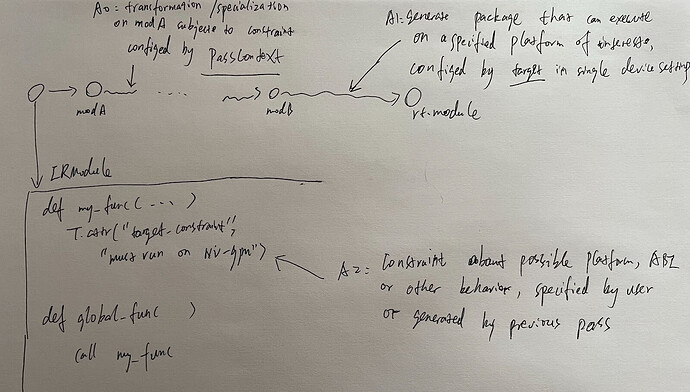

To begin with, a generic flow in TVM can be roughly summarized in the following picture:

- We start with an IRModule(modA), possibly already been transformed by a or some previous passes(e.g. automatic mix precision conversion)

- We run a set of transformation passes on modA to get modB

- We then generate rt.Module from modB, in order to get it running on a specific platform in mind(e.g. a specific hardware environment with a particular runtime).

There are roughly three kinds of “config-like” options appearing in this flow and that can affect the final outcome.

- A0: The specific options used in transformation(e.g. How aggressive we want to inline)

- A1: The build “constraints” of the platform of interest, this can be the instruction set(x86 or ARM), or runtime constraints(crt, packed-api vs unpacked-api).

- A2: Within the IRModule itself, there can be additional constraints on existing functions. Imagine that a previous pass/user decided to optimize my_func on NVDIA GPU, and have already generated a call to my_func via CUDA runtime API. Then follow up optimizations will need to respect that “constraint”.

To some extent, each of the As are related to each other. For example, if we have a final platform constraint that does not support a vector unit, then it means that we will need to disable vectorization. Nevertheless there are still two distinct types of configuration here:

- C0: In the case of A0: we are mainly interested in “how”, aka procedurally what we do with the program. In many cases, regardless of the transformations(e.g. inlining), the final outcome can run on the platform of interest.

- C1: In the case of A1 and A2, we are declaring “constraints” imposed by the final platforms of interest(e.g. must have a vector unit, must use unpacked ABI). These constraints information do not dictate “how” we run the optimization, but can provide additional information for certain specializations.

Design Principle

The main design principle is that we want to separate C0 and C1 type config at the modular level of pass and IRModule transformation. Specifically:

- D0: All transformations are centralized as IRModule→IRModule, with IRModule as the only medium to exchange medium between optimizations passes.

- D1: C1 type config represent “constraints” that followup optimizations must respect, as a result, they need to be attached as some fields/attrs of IRModule, otherwise such information get lost.

- D2: C0 type config that affected the passes should be stored separately (not as part of IRModule), note that optimizations passes will still need to look into C1 type config and sometimes choose to ignore C0 type or have proper warnings (e.g. when C1 indicate there is no vector unit but C0 enforces vectorization).

Note that the design principle applies to the abstraction level of pass and IRModule transformations. It does not preclude higher-level abstraction layers and applications to create separate configurations that maps to this level (these high-level configurations can ideally compose abstractions of this level but not leaking through).

Rationale and alternatives

Let us call the proposed design principles as T0. The main rationale for T0 is to the system modular and compositional.

There are a few alternatives to the design.

- T1: store C0 and C1 information together as part of IRModule

- T2: store non of the information as part of IRModule.

On one hand, we need to store all the necessary information in the key data structure of interest (IRModule), so any transformations can respect these C1-type constraints.

On the other hand, we also want to give pass writers and optimizers flexibility. This means we need to keep necessary and minimum amount of constraints. Putting additional C0 information into IRModule will case the following problems:

- C0 type configurations are essentially stale after the transformation execution. Putting a information in IRModule implies it is something that needed to be aware of by pass developers, adding the design burden and steals un-necessary attention span from the pass developers.

- We will need to support cases where the same C0 type transformation being run multiple times, with different configurations values(e.g. try out different unroll factor and compare), it is not possible (or necessary) to consistently store the different configurations on the IRModule.

- There will also be cases with more complicated pipelines as inspired by the tvm unity evolution, like optimizing in a loop, or alternative paths, where more flexible C0 type configuration is needed specific to the pipeline.

Precedent Case Studies

There are clear precedent designs that can serve as a reference pont to the proposed design principles. In particular, deep learning frameworks have enjoyed great success thanks to the follows of principled and modular design.

Here we relate the deep learning framework design to the proposed design principle(we use the same D labeling as there is one to one correspondence of the design choice):

- D0: Tensor is the key data structure of interest, all transformations are centralized as Tensor→Tensor transformations. Tensor is the only exchange medium between layers.

- D1: C1 type constraints are stored as part of Tensor, notable items include, device, shape and dtype. Layers and array computations must respect these constraints. e.g. CUDNNLayer only applies to cuda.

- D2: C0 type configs, aka specific configurations of layers(number of hidden units, parallel factor) are constructed and composed separately. These configs are not stored as part of Tensor.

In deep learning frameworks, Tensor is the minimum and necessary data structure that exchanges information between layers. Not having C0 config from Tensor has greatly helped deep learning framework to gain modularity and compositionality.

For example, one can construct a residual block without worrying about C0 type configuration of other layers. The result residual block can be composed with a softmax function or BCELoss, SGD or Adam optimizer with only Tensor as exchange medium. Such minimum information exchange and compositionality is the secret sauce of why deep learning frameworks are so successful.

Notably, T1/T2 design also occurred before in deep learning framework land. The first generation deep learning framework (caffe) rely on a global prototxt configuration to configure all the choices including C0 and C1 options. This design, however, was replaced by the later generations(pytorch and tensorflow) that brings clear separation of C0 and C1. Such separation was one of the main reason why later generation frameworks replaced the first generation.

The history of deep learning framework design evolution highlights a useful lesson that motivates this post.