@comaniac Thanks for your reply!

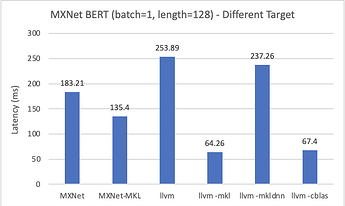

Experiment1 - Different Target

Sorry, I didn’t write completely in the graph. I had used llvm -mcpu=skylake-avx512 for all the TVM experiments. And I had followed the blog post you provide, the results showing in the following. I am wondering why MXNet is not as fast as Pytorch; therefore, we can easily get a great improvement (2X-3X) when using TVM.

Experiment2 - Different Tune

I tune my model follow this repo, but my model is in Pytorch rather than MXNet. Also, I found a repo which had experiment for Pytorch BERT before, showing a little improvement (5%-10%). As a result, I think maybe we can only have little speed up in Pytorch BERT or I miss something to accelerate.

Experiment3 - Different Length

However, I find we can only have a little improvement when the sequence length is not too long.