Hi Everyone.

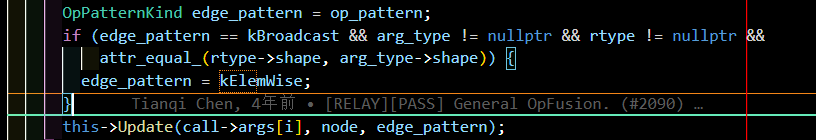

When I am using bitpack op in a model, sometimes it gets fused with cast op. above, like (other_ops+cast+bitpack) and sometimes it doesn’t added to the fused group such that (other_ops+cast), (bitpack).

I did not get how the tvm operator fusion works in this scenario? any help.