I am trying to compile the model with relay with below error

onnx_model = onnx.load('model.onnx')

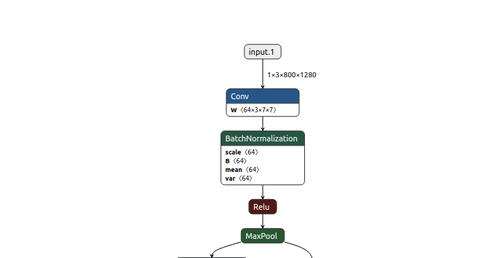

mod, params = relay.frontend.from_onnx(onnx_model, {"input.1": [1,3,800,1280]})

``

mod, params = relay.frontend.from_onnx(onnx_model, {“input.1”: [1,3,800,1280]}) WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm Traceback (most recent call last): File “”, line 1, in File “/home/aniket/tvm/python/tvm/relay/frontend/onnx.py”, line 2470, in from_onnx mod, params = g.from_onnx(graph, opset, freeze_params) File “/home/aniket/tvm/python/tvm/relay/frontend/onnx.py”, line 2278, in from_onnx op = self._convert_operator(op_name, inputs, attr, opset) File “/home/aniket/tvm/python/tvm/relay/frontend/onnx.py”, line 2385, in _convert_operator sym = convert_map[op_name](inputs, attrs, self._params) File “/home/aniket/tvm/python/tvm/relay/frontend/onnx.py”, line 1858, in _impl_v11 scale_shape = infer_shape(scale) File “/home/aniket/tvm/python/tvm/relay/frontend/common.py”, line 500, in infer_shape out_type = infer_type(inputs, mod=mod) File “/home/aniket/tvm/python/tvm/relay/frontend/common.py”, line 478, in infer_type new_mod = IRModule.from_expr(node) File “/home/aniket/tvm/python/tvm/ir/module.py”, line 237, in from_expr return _ffi_api.Module_FromExpr(expr, funcs, defs) File “/home/aniket/tvm/python/tvm/_ffi/_ctypes/packed_func.py”, line 237, in call raise get_last_ffi_error() tvm._ffi.base.TVMError: Traceback (most recent call last): [bt] (6) /home/aniket/tvm/build/libtvm.so(TVMFuncCall+0x63) [0x7fd858c69413] [bt] (5) /home/aniket/tvm/build/libtvm.so(+0x77f348) [0x7fd8581e5348] [bt] (4) /home/aniket/tvm/build/libtvm.so(tvm::IRModule::FromExpr(tvm::RelayExpr const&, tvm::Map<tvm::GlobalVar, tvm::BaseFunc, void, void> const&, tvm::Map<tvm::GlobalTypeVar, tvm::TypeData, void, void> const&)+0x31f) [0x7fd8581dffff] [bt] (3) /home/aniket/tvm/build/libtvm.so(tvm::relay::FreeTypeVars(tvm::RelayExpr const&, tvm::IRModule const&)+0x175) [0x7fd8589897d5] [bt] (2) /home/aniket/tvm/build/libtvm.so(tvm::relay::ExprVisitor::VisitExpr(tvm::RelayExpr const&)+0x8b) [0x7fd858bdf69b] [bt] (1) /home/aniket/tvm/build/libtvm.so(tvm::relay::ExprFunctor<void (tvm::RelayExpr const&)>::VisitExpr(tvm::RelayExpr const&)+0x164) [0x7fd858b8f804] [bt] (0) /home/aniket/tvm/build/libtvm.so(+0x1112df8) [0x7fd858b78df8] File “/home/aniket/tvm/include/tvm/relay/expr_functor.h”, line 90 TVMError: Check failed: n.defined():