I am trying to compile a simple onnx model using the relax framework. Can someone please guide me how to resolve this?

Compilation Steps:

import onnx

import tvm

import tvm.relay as relax

from tvm import relax

from tvm.relax.frontend.onnx import from_onnx

import numpy as np

from typing import List

# Load ONNX model

onnx_model_path = "conv.onnx"

onnx_model = onnx.load(onnx_model_path)

relax_mod_onnx = from_onnx(onnx_model, keep_params_in_input=False)

relax_mod, params = relax.frontend.detach_params(relax_mod_onnx)

# Input Creation

input_shape=(1, 1, 32, 32)

shape_dict = {"permute_input:0": input_shape}

inputs = {"permute_input:0": (input_shape, "float32")}

input_data = {}

for _name, (shape, _dtype) in inputs.items():

input_data[_name] = np.random.uniform(-1.0, 1.0, shape).astype(_dtype)

inputs_tvm: List[tvm.nd.NDArray] = [tvm.nd.array(v) for k, v in input_data.items()]

tvm_target = "llvm"

with tvm.transform.PassContext(opt_level=1):

relax_mod_onnx.show()

llvm_compiled_lib = tvm.compile(relax_mod_onnx, tvm_target)

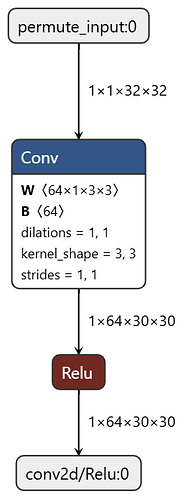

The module seems simple.

@I.ir_module

class Module:

@R.function

def main(permute_input_0: R.Tensor((1, 1, 32, 32), dtype="float32")) -> R.Tensor((1, 64, 30, 30), dtype="float32"):

R.func_attr({"num_input": 1})

with R.dataflow():

lv: R.Tensor((1, 64, 30, 30), dtype="float32") = R.nn.conv2d(permute_input_0, metadata["relax.expr.Constant"][0], strides=[1, 1], padding=[0, 0, 0, 0], dilation=[1, 1], groups=1, data_layout="NCHW", kernel_layout="OIHW", out_layout="NCHW", out_dtype="void")

lv1: R.Tensor((1, 64, 1, 1), dtype="float32") = R.reshape(metadata["relax.expr.Constant"][1], R.shape([1, 64, 1, 1]))

lv2: R.Tensor((1, 64, 30, 30), dtype="float32") = R.add(lv, lv1)

gv: R.Tensor((1, 64, 30, 30), dtype="float32") = R.nn.relu(lv2)

R.output(gv)

return gv

Getting the following Error Message:

src/te/operation/compute_op.cc", line 72, in AssertReduceEqual

ICHECK(eq(a->axis, b->axis)) << shared_text << "However, the reduction axis " << a->axis

tvm.error.InternalError: Check failed: (eq(a->axis, b->axis)) is false: When a TE compute node produces multiple outputs, each of which is a reduction, each reduction must be structurally identical, except for the ReduceNode::value_index. However, the reduction axis [T.iter_var(rc, T.Range(0, 1), "CommReduce", ""), T.iter_var(ry, T.Range(0, 3), "CommReduce", ""), T.iter_var(rx, T.Range(0, 3), "CommReduce", "")] does not match [T.iter_var(rc, T.Range(0, 1), "CommReduce", ""), T.iter_var(ry, T.Range(0, 3), "CommReduce", ""), T.iter_var(rx, T.Range(0, 3), "CommReduce", "")]

The sample error message can be seen while running

python -m pytest tests/python/relax/test_frontend_onnx.py

Setup Related Info:

22.04.1-Ubuntu (x86_64)

commit 8ad523811cc30401955089a4ec0fd73bbc18ab29 (origin/main)

Author: Ruihang Lai <ruihangl@cs.cmu.edu>

Date: Thu Aug 21 17:02:15 2025 -0400

[Thrust] Fix getting CUDA stream (#18220)

This PR updates the `GetCUDAStream` in CUDA thrust integration

to the latest `TVMFFIEnvGetCurrentStream` interface.