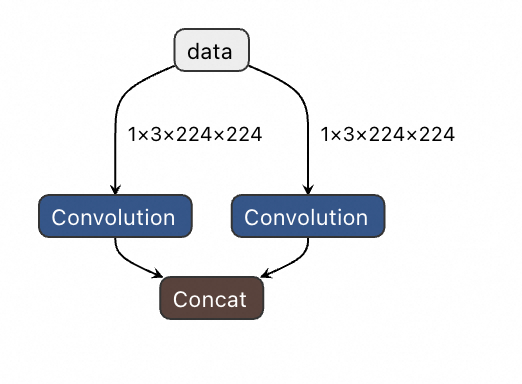

I have a original model like this:

After importing to relay, I get the relay ir as follows:

fn (%data: Tensor[(1, 3, 224, 224), float32] /* span=conv1:0:0 */, %v_param_1: Tensor[(3, 3, 3, 3), float32] /* span=conv1:0:0 */, %v_param_2: Tensor[(3), float32] /* span=conv1:0:0 */, %data1: Tensor[(1, 3, 224, 224), float32] /* span=conv2:0:0 */, %v_param_3: Tensor[(3, 3, 5, 5), float32] /* span=conv2:0:0 */, %v_param_4: Tensor[(3), float32] /* span=conv2:0:0 */) {

%0 = nn.conv2d(%data, %v_param_1, padding=[1, 1, 1, 1], channels=3, kernel_size=[3, 3]) /* span=conv1:0:0 */;

%1 = nn.conv2d(%data1, %v_param_3, padding=[2, 2, 2, 2], channels=3, kernel_size=[5, 5]) /* span=conv2:0:0 */;

%2 = nn.bias_add(%0, %v_param_2) /* span=conv1:0:0 */;

%3 = nn.bias_add(%1, %v_param_4) /* span=conv2:0:0 */;

%4 = (%2, %3) /* span=concat:0:0 */;

concatenate(%4, axis=1) /* span=output@@concat:0:0 */

}

From the above code, it can be seen that there are two different inputs inside, var(data) and var(data1),But in the original model, they are actually the same input.

In order to figure out, I tried to debug, before convert to IRModule, I print the output nodes(haved been converted to relay):

CallNode(Op(concatenate), [Tuple([CallNode(Op(nn.bias_add), [CallNode(Op(nn.conv2d), [Var(data, ty=TensorType([1, 3, 224, 224], float32)), Var(_param_1, ty=TensorType([3, 3, 3, 3], float32))], relay.attrs.Conv2DAttrs(0x389c458), []), Var(_param_2, ty=TensorType([3], float32))], relay.attrs.BiasAddAttrs(0x382d268), []), CallNode(Op(nn.bias_add), [CallNode(Op(nn.conv2d), [Var(data, ty=TensorType([1, 3, 224, 224], float32)), Var(_param_3, ty=TensorType([3, 3, 5, 5], float32))], relay.attrs.Conv2DAttrs(0x3890f28), []), Var(_param_4, ty=TensorType([3], float32))], relay.attrs.BiasAddAttrs(0x380fda8), [])])], relay.attrs.ConcatenateAttrs(0x38972a8), [])

Can someone help me see why this is happening?Thanks