I tried to follow the tutorial here, and I could finish it without error. But when I change the demo file from

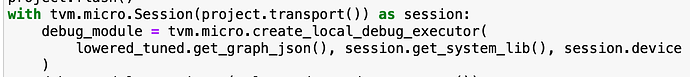

to my own tflite, it can be built and flash to the board, but encountered errors in the open session,And the error is:

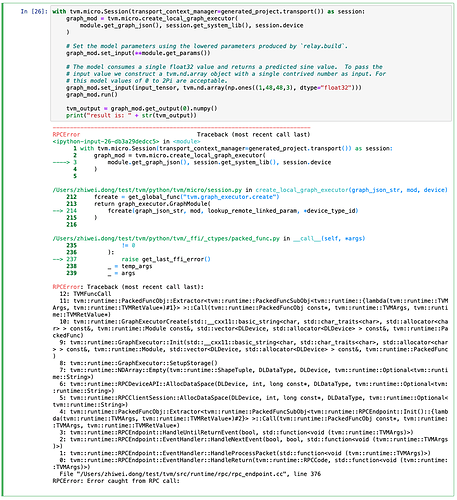

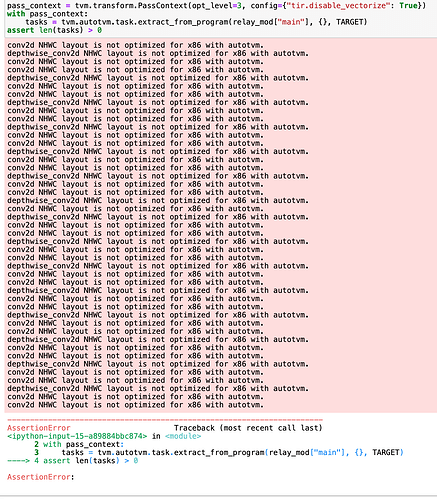

I tried follow another tutorial about autotune with microTVM here, I could clean with that demo model too, but if use my testing tflite file, tasks = tvm.autotvm.task.extract_from_program(relay_mod["main"], {}, TARGET) ‘s return value tasks’ length is 0.

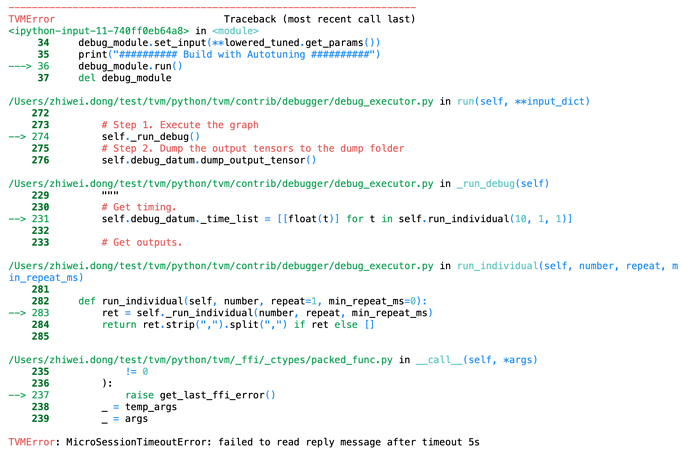

If I replace the model to TVM Conf 20 demo model, which in here, it can extract tasks, autotune, but running with MicroSessionTimeoutError: failed to read reply message after timeout 5s

My testing tflite files in here, mcunet folder, through jobs/download script.

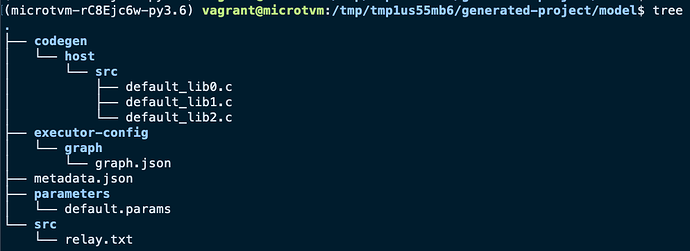

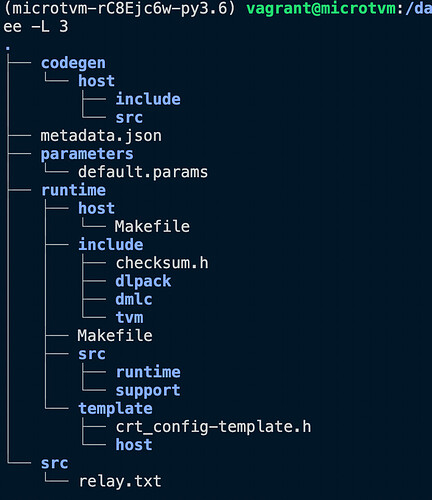

Relay model logs, partial:

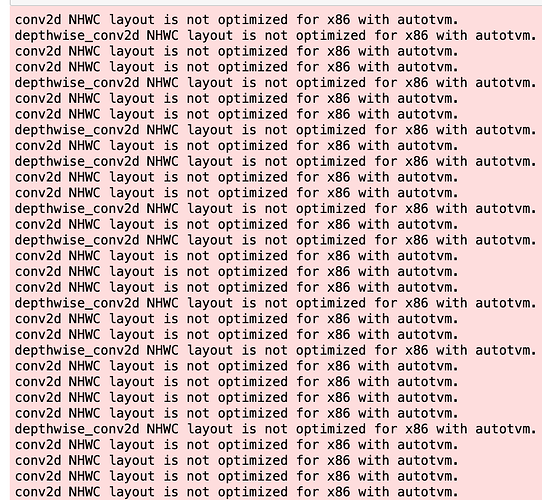

Relay build warnings:

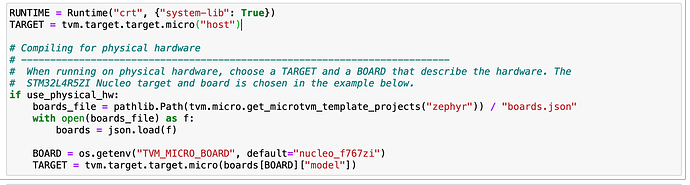

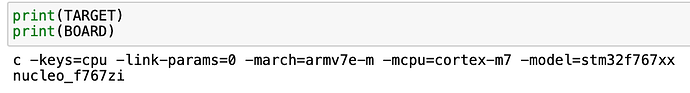

Build config:

Environment:

macOS 12.3.1 + VirtualBOX 6.1.24 + Vagrant 2.7 + MacBook Pro 2018 15-inch(intel i7-8850H) + tutorial VM

I can pass the 9/10 zephyr test.

I’m a newbie to TVM microTVM and MCU, my board is nucleo_f767zi, I changed the board.json and target.py to support this model. I was very confused of this situation, hope for useful answers, thank you all.