Hi, i am getting memory leak in tvm, while relay building module on target: llvm-x86-64

version: tvm-0.7 platform: linux tensorflow: v2.4.0

i change a little bit in tensorflow_ops.py, this enable Einsum op in tensorflow , because my model used einsum op

> +def _einsum():

> + def _impl(inputs, attr, params, mod):

> + equation = attr["equation"].decode("utf-8")

> + return _op.einsum(inputs, equation)

> + return _impl

> +

> +

> @@ -2902,6 +2909,7 @@ _convert_map = {

> "DepthToSpace": _depth_to_space(),

> "DepthwiseConv2dNative": _conv("depthwise"),

> "Dilation2D": _dilation2d(),

> + "Einsum": _einsum(),

> "Elu": _elu(),

> "Equal": _broadcast("equal"),

> "Erf": AttrCvt("erf"),

here is my code

def export_tvm_model(graph_def, shape_dict, so_path): mod, params = tensorflow2.from_tensorflow(graph_def, <- relay IRModel is generated well shape=shape_dict, outputs=outputs) target = tvm.target.Target("llvm", host="llvm") with tvm.transform.PassContext(opt_level=1): <- here got memory leak lib = relay.build(mod, target=target, params=params) lib.export_library(so_path) open(graph_json, "w").write(lib["get_graph_json"]()) return lib

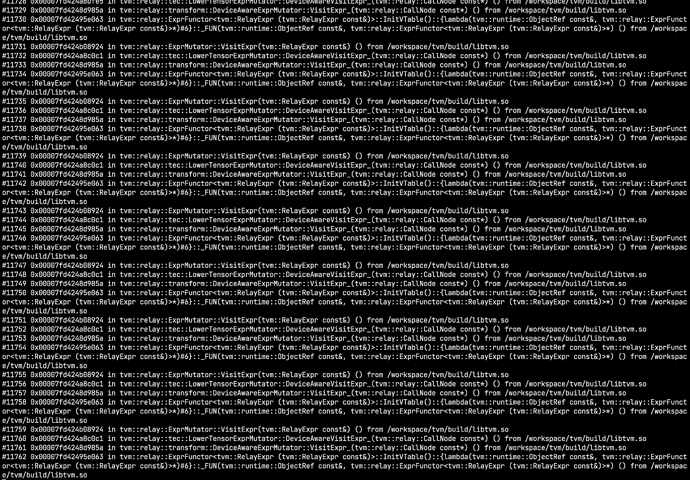

i use gdb to track backtrace , it seems like a infinity recurision call stack

any help will be appreciated