Description and bug reproduce

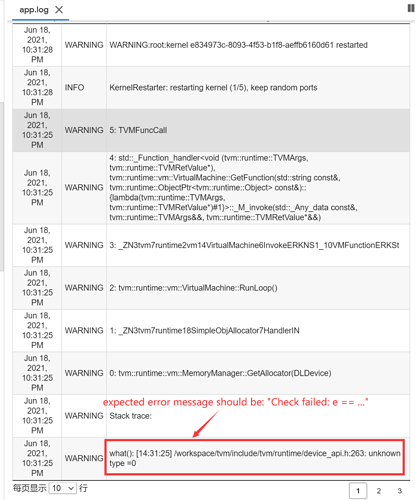

When trying to creating a Out-Of-Memory error in relay.vm, it seems TVM cannot locate the error correctly. i.e., it did not report the OOM error, but ignored it and continues to hit some upcoming errors.

To reproduce: Google Colab

This error happens in colab and my PC (CentOS, Clang13/gcc7/gcc9, etc.).

Troubleshooting

I did some investigation on this but failed to come up with a gentle solution.

Background

When an OOM occurs in CUDA_CALL(cudaMalloc), it is expected to throw InternalError (by GetEntry().Finalize() in LogFatal’s destructor), the python end should report some like: Check failed: (e == cudaSuccess || e == cudaErrorCudartUnloading) is false: CUDA: out of memory\nStack trace:...

GDB Debugging

Before catch (i.e., __cxa_begin_catch), there are 2 more exceptions got “ignored” (nobody catches them and std::terminate is not called).

Ideally I think it is expected to let the first exception (i.e., cuda OOM) result in std::terminate(). Since previous exceptions are “ignored”, the program later performs “undefined behaviors” which finally produce error message elsewhere.

...

(gdb) catch catch

Catchpoint 1 (catch)

(gdb) catch throw

Catchpoint 2 (throw)

(gdb) catch rethrow

Catchpoint 3 (rethrow)

(gdb) r

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/usr/lib64/libthread_db.so.1".

[New Thread 0x7f30b6c50700 (LWP 88390)]

[New Thread 0x7f30b644f700 (LWP 88391)]

[New Thread 0x7f30adc4e700 (LWP 88392)]

...

[New Thread 0x7f2fb7c32700 (LWP 88420)]

warning: File "/home/ljw/lib64/libstdc++.so.6.0.24-gdb.py" auto-loading has been declined by your `auto-load safe-path' set to "$debugdir:$datadir/auto-load".

To enable execution of this file add

add-auto-load-safe-path /home/ljw/lib64/libstdc++.so.6.0.24-gdb.py

line to your configuration file "/home/ljw/.gdbinit".

To completely disable this security protection add

set auto-load safe-path /

line to your configuration file "/home/ljw/.gdbinit".

For more information about this security protection see the

"Auto-loading safe path" section in the GDB manual. E.g., run from the shell:

info "(gdb)Auto-loading safe path"

[Thread 0x7f306a447700 (LWP 88399) exited]

[Thread 0x7f2fe2437700 (LWP 88415) exited]

[Thread 0x7f2feac38700 (LWP 88414) exited]

[Thread 0x7f2ff3439700 (LWP 88413) exited]

...

[Thread 0x7f30b6c50700 (LWP 88390) exited]

[Detaching after fork from child process 88438]

[New Thread 0x7f2fb7c32700 (LWP 88442)]

[New Thread 0x7f2fc8433700 (LWP 88443)]

...

[New Thread 0x7f2fa0474700 (LWP 88472)]

One or more operators have not been tuned. Please tune your model for better performance. Use DEBUG logging level to see more details.

Cannot find config for target=cuda -keys=cuda,gpu -max_num_threads=1024 -thread_warp_size=32, workload=('conv2d_nchw.cuda', ('TENSOR', (any_dim, 3, 224, 224), 'float32'), ('TENSOR', (32, 3, 3, 3), 'float32'), (1, 1), (1, 1, 1, 1), (1, 1), 'float32'). A fallback configuration is used, which may bring great performance regression.

[New Thread 0x7f2f91c73700 (LWP 88477)]

[Thread 0x7f2fe2437700 (LWP 88464) exited]

[Thread 0x7f2fa0474700 (LWP 88472) exited]

...

[Thread 0x7f2fb7c32700 (LWP 88442) exited]

[Detaching after fork from child process 88478]

Running batch size = 0

[New Thread 0x7f2fa0474700 (LWP 88484)]

[New Thread 0x7f2fa0c75700 (LWP 88485)]

Running batch size = 1

Running batch size = 2

...

Running batch size = 62

--Type <RET> for more, q to quit, c to continue without paging--

Thread 1 "python" hit Catchpoint 2 (exception thrown), __cxxabiv1::__cxa_throw (obj=0x7f30c27ead40, tinfo=0x7f2facc4ca90 <typeinfo for tvm::runtime::InternalError>,

dest=0x7f2fa9d4ab40 <tvm::runtime::InternalError::~InternalError()>) at ../../../../libstdc++-v3/libsupc++/eh_throw.cc:76

76 PROBE2 (throw, obj, tinfo);

(gdb) c

Continuing.

Thread 1 "python" hit Catchpoint 2 (exception thrown), __cxxabiv1::__cxa_throw (obj=0x7f30c2c3a5b0, tinfo=0x7f2facc4ca90 <typeinfo for tvm::runtime::InternalError>,

dest=0x7f2fa9d4ab40 <tvm::runtime::InternalError::~InternalError()>) at ../../../../libstdc++-v3/libsupc++/eh_throw.cc:76

76 PROBE2 (throw, obj, tinfo);

(gdb) c

Continuing.

Thread 1 "python" hit Catchpoint 2 (exception thrown), __cxxabiv1::__cxa_throw (obj=0x7f30c23944e0, tinfo=0x7f2facc4ca90 <typeinfo for tvm::runtime::InternalError>,

dest=0x7f2fa9d4ab40 <tvm::runtime::InternalError::~InternalError()>) at ../../../../libstdc++-v3/libsupc++/eh_throw.cc:76

76 PROBE2 (throw, obj, tinfo);

(gdb) c

Continuing.

Thread 1 "python" hit Catchpoint 1 (exception caught), __cxxabiv1::__cxa_begin_catch (exc_obj_in=0x7f30c23944c0) at ../../../../libstdc++-v3/libsupc++/eh_catch.cc:84

84 PROBE2 (catch, objectp, header->exceptionType);

(gdb) c

Continuing.

terminate called after throwing an instance of 'tvm::runtime::InternalError'

Thread 1 "python" hit Catchpoint 3 (exception rethrown), __cxxabiv1::__cxa_rethrow () at ../../../../libstdc++-v3/libsupc++/eh_throw.cc:114

114 PROBE2 (rethrow, __get_object_from_ambiguous_exception(header),

(gdb) c

Continuing.

Thread 1 "python" hit Catchpoint 1 (exception caught), __cxxabiv1::__cxa_begin_catch (exc_obj_in=0x7f30c23944c0) at ../../../../libstdc++-v3/libsupc++/eh_catch.cc:84

84 PROBE2 (catch, objectp, header->exceptionType);

(gdb) c

Continuing.

what(): [23:56:01] /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/device_api.h:263: unknown type =0

Stack trace:

0: tvm::runtime::vm::MemoryManager::GetAllocator(DLDevice)

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/memory_manager.cc:154

1: tvm::runtime::vm::StorageObj::~StorageObj()

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/vm/memory_manager.h:126

2: tvm::runtime::SimpleObjAllocator::Handler<tvm::runtime::vm::StorageObj>::Deleter_(tvm::runtime::Object*)

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/memory.h:138

3: tvm::runtime::Object::DecRef()

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/object.h:796

4: tvm::runtime::ObjectPtr<tvm::runtime::vm::StorageObj>::reset()

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/object.h:442

5: tvm::runtime::ObjectPtr<tvm::runtime::vm::StorageObj>::~ObjectPtr()

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/object.h:396

6: tvm::runtime::vm::VirtualMachine::RunLoop()

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:592

7: tvm::runtime::vm::VirtualMachine::Invoke(tvm::runtime::vm::VMFunction const&, std::vector<tvm::runtime::ObjectRef, std::allocator<tvm::runtime::ObjectRef> > const&)

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:262

8: tvm::runtime::vm::VirtualMachine::GetFunction(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, tvm::runtime::ObjectPtr<tvm::runtime::Object> const&)::$_0::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:132

9: std::_Function_handler<void (tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*), tvm::runtime::vm::VirtualMachine::GetFunction(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, tvm::runtime::ObjectPtr<tvm::runtime::Object> const&)::$_0>::_M_invoke(std::_Any_data const&, tvm::runtime::TVMArgs&&, tvm::runtime::TVMRetValue*&&)

at /home/ljw/bin/../lib/gcc/x86_64-pc-linux-gnu/7.5.0/../../../../include/c++/7.5.0/bits/std_function.h:316

10: std::function<void (tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*)>::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

at /home/ljw/bin/../lib/gcc/x86_64-pc-linux-gnu/7.5.0/../../../../include/c++/7.5.0/bits/std_function.h:706

11: tvm::runtime::PackedFunc::CallPacked(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/packed_func.h:1150

12: TVMFuncCall

at /data/ljw_data/PROJECTS/tvm/src/runtime/c_runtime_api.cc:474

Thread 1 "python" received signal SIGABRT, Aborted.

0x00007f30c01c8387 in raise () from /usr/lib64/libc.so.6

(gdb)

And if we make a breakpoint at the constructor of InternalError, we can see CUDA_CALL(cudaMalloc) did throw and its message is complete.

(gdb) list

242 std::ostringstream s;

243 // XXX: Do not change this format, otherwise all error handling in python will break (because it

244 // parses the message to reconstruct the error type).

245 // TODO(tkonolige): Convert errors to Objects, so we can avoid the mess of formatting/parsing

246 // error messages correctly.

247 s << "[" << std::put_time(std::localtime(&time), "%H:%M:%S") << "] " << file << ":" << lineno

248 << ": " << message << std::endl;

249 if (backtrace.size() > 0) {

250 s << backtrace << std::endl;

251 }

(gdb) n

247 s << "[" << std::put_time(std::localtime(&time), "%H:%M:%S") << "] " << file << ":" << lineno

(gdb) n

248 << ": " << message << std::endl;

(gdb) n

248 << ": " << message << std::endl;

(gdb) n

249 if (backtrace.size() > 0) {

(gdb) n

250 s << backtrace << std::endl;

(gdb) n

250 s << backtrace << std::endl;

(gdb) n

252 full_message_ = s.str();

(gdb) n

253 }

(gdb) printf "%s\n", full_message_.c_str()

[00:05:55] /data/ljw_data/PROJECTS/tvm/src/runtime/cuda/cuda_device_api.cc:118:

---------------------------------------------------------------

An error occurred during the execution of TVM.

For more information, please see: https://tvm.apache.org/docs/errors.html

---------------------------------------------------------------

Check failed: (e == cudaSuccess || e == cudaErrorCudartUnloading) is false: CUDA: out of memory

Stack trace:

0: tvm::runtime::CUDADeviceAPI::AllocDataSpace(DLDevice, unsigned long, unsigned long, DLDataType)

at /data/ljw_data/PROJECTS/tvm/src/runtime/cuda/cuda_device_api.cc

1: tvm::runtime::vm::PooledAllocator::Alloc(unsigned long, unsigned long, DLDataType)

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/pooled_allocator.h:60

2: tvm::runtime::vm::VirtualMachine::RunLoop()

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:587

3: tvm::runtime::vm::VirtualMachine::Invoke(tvm::runtime::vm::VMFunction const&, std::vector<tvm::runtime::ObjectRef, std::allocator<tvm::runtime::ObjectRef> > const&)

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:262

4: tvm::runtime::vm::VirtualMachine::GetFunction(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, tvm::runtime::ObjectPtr<tvm::runtime::Object> const&)::$_0::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:132

5: std::_Function_handler<void (tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*), tvm::runtime::vm::VirtualMachine::GetFunction(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, tvm::runtime::ObjectPtr<tvm::runtime::Object> const&)::$_0>::_M_invoke(std::_Any_data const&, tvm::runtime::TVMArgs&&, tvm::runtime::TVMRetValue*&&)

at /home/ljw/bin/../lib/gcc/x86_64-pc-linux-gnu/7.5.0/../../../../include/c++/7.5.0/bits/std_function.h:316

6: std::function<void (tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*)>::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

at /home/ljw/bin/../lib/gcc/x86_64-pc-linux-gnu/7.5.0/../../../../include/c++/7.5.0/bits/std_function.h:706

7: tvm::runtime::PackedFunc::CallPacked(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/packed_func.h:1150

8: TVMFuncCall

at /data/ljw_data/PROJECTS/tvm/src/runtime/c_runtime_api.cc:474

If we look at how they skipped the exceptions, I found not “__cxa_catch” or “abort” were called after the first 2 exceptions.

(gdb) c

Continuing.

Thread 1 "python" hit Catchpoint 4 (exception thrown), __cxxabiv1::__cxa_throw (obj=0x7fb1a0865170, tinfo=0x7fb088d6fa90 <typeinfo for tvm::runtime::InternalError>,

dest=0x7fb085e6db40 <tvm::runtime::InternalError::~InternalError()>) at ../../../../libstdc++-v3/libsupc++/eh_throw.cc:76

76 PROBE2 (throw, obj, tinfo);

(gdb) bt

#0 __cxxabiv1::__cxa_throw (obj=0x7fb1a0865170, tinfo=0x7fb088d6fa90 <typeinfo for tvm::runtime::InternalError>, dest=0x7fb085e6db40 <tvm::runtime::InternalError::~InternalError()>)

at ../../../../libstdc++-v3/libsupc++/eh_throw.cc:76

#1 0x00007fb085e6d494 in tvm::runtime::detail::LogFatal::Entry::Finalize (this=<optimized out>) at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/logging.h:356

#2 0x00007fb08701f7ea in tvm::runtime::CUDADeviceAPI::AllocDataSpace (this=<optimized out>, dev=..., nbytes=398196736, alignment=<optimized out>, type_hint=...)

at /home/ljw/bin/../lib/gcc/x86_64-pc-linux-gnu/7.5.0/../../../../include/c++/7.5.0/bits/basic_ios.h:158

#3 0x00007fb086fcbf35 in tvm::runtime::vm::PooledAllocator::Alloc (this=0x7fb1a0430410, nbytes=<optimized out>, alignment=64, type_hint=...)

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/pooled_allocator.h:60

#4 0x00007fb086fd1110 in tvm::runtime::vm::VirtualMachine::RunLoop (this=<optimized out>, this@entry=0x7fb1a04a8660) at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:587

#5 0x00007fb086fd52a8 in tvm::runtime::vm::VirtualMachine::Invoke (this=0x7fb1a04a8660, func=..., args=...) at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:262

#6 tvm::runtime::vm::VirtualMachine::GetFunction(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, tvm::runtime::ObjectPtr<tvm::runtime::Object> const&)::$_0::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const (this=<optimized out>, args=..., rv=0x7ffd10aaf908) at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:132

#7 std::_Function_handler<void (tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*), tvm::runtime::vm::VirtualMachine::GetFunction(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, tvm::runtime::ObjectPtr<tvm::runtime::Object> const&)::$_0>::_M_invoke(std::_Any_data const&, tvm::runtime::TVMArgs&&, tvm::runtime::TVMRetValue*&&) (

__functor=..., __args=<optimized out>, __args=<optimized out>) at /home/ljw/bin/../lib/gcc/x86_64-pc-linux-gnu/7.5.0/../../../../include/c++/7.5.0/bits/std_function.h:316

#8 0x00007fb086f78fc6 in std::function<void (tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*)>::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const (this=0x7fb1a0865170,

__args=0x7ffd10aaf908, __args=0x7ffd10aaf908) at /home/ljw/bin/../lib/gcc/x86_64-pc-linux-gnu/7.5.0/../../../../include/c++/7.5.0/bits/std_function.h:706

#9 tvm::runtime::PackedFunc::CallPacked (this=0x7fb1a0865170, args=..., rv=0x7ffd10aaf908) at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/packed_func.h:1150

#10 TVMFuncCall (func=0x7fb1a0865170, args=<optimized out>, arg_type_codes=<optimized out>, num_args=-1601783936, ret_val=0x7fb168321790, ret_type_code=0x7fb168321a90)

at /data/ljw_data/PROJECTS/tvm/src/runtime/c_runtime_api.cc:474

#11 0x00007fb195b629dd in ffi_call_unix64 () from /home/ljw/anaconda3/envs/tvm-keras/lib/python3.8/lib-dynload/../../libffi.so.7

--Type <RET> for more, q to quit, c to continue without paging--Quit

(gdb) n

78 __cxa_eh_globals *globals = __cxa_get_globals ();

(gdb) list

73 __cxxabiv1::__cxa_throw (void *obj, std::type_info *tinfo,

74 void (_GLIBCXX_CDTOR_CALLABI *dest) (void *))

75 {

76 PROBE2 (throw, obj, tinfo);

77

78 __cxa_eh_globals *globals = __cxa_get_globals ();

79 globals->uncaughtExceptions += 1;

80 // Definitely a primary.

81 __cxa_refcounted_exception *header =

82 __cxa_init_primary_exception(obj, tinfo, dest);

(gdb) n

79 globals->uncaughtExceptions += 1;

(gdb) n

82 __cxa_init_primary_exception(obj, tinfo, dest);

(gdb) n

88 _Unwind_RaiseException (&header->exc.unwindHeader);

(gdb) n

83 header->referenceCount = 1;

(gdb) n

88 _Unwind_RaiseException (&header->exc.unwindHeader);

(gdb) n

0x00007fb085e6d494 in tvm::runtime::detail::LogFatal::Entry::Finalize (this=<optimized out>) at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/logging.h:356

356 throw InternalError(file_, lineno_, stream_.str());

(gdb) n

0x00007fb086fcbf7d in tvm::runtime::vm::PooledAllocator::Alloc (this=0x7fb1a0430410, nbytes=<optimized out>, alignment=64, type_hint=...)

at /home/ljw/bin/../lib/gcc/x86_64-pc-linux-gnu/7.5.0/../../../../include/c++/7.5.0/bits/std_mutex.h:107

107 __throw_system_error(__e);

(gdb) list

102 {

103 int __e = __gthread_mutex_lock(&_M_mutex);

104

105 // EINVAL, EAGAIN, EBUSY, EINVAL, EDEADLK(may)

106 if (__e)

107 __throw_system_error(__e);

108 }

109

110 bool

111 try_lock() noexcept

(gdb) n

64 }

(gdb) n

0x00007fb086fd1dca in tvm::runtime::vm::VirtualMachine::RunLoop (this=<optimized out>, this@entry=0x7fb1a04a8660) at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:420

420 throw std::runtime_error("VM encountered fatal error");

(gdb) list

415 WriteRegister(instr.dst, from_obj);

416 pc_++;

417 goto main_loop;

418 }

419 case Opcode::Fatal: {

420 throw std::runtime_error("VM encountered fatal error");

421 }

422 case Opcode::LoadConst: {

423 auto constant_obj = exec_->constants[instr.const_index];

424 // We cache the allocated object in the constant pool. To measure, the

(gdb) n

592 }

(gdb) c

Continuing.

Thread 1 "python" hit Catchpoint 4 (exception thrown), __cxxabiv1::__cxa_throw (obj=0x7fb1a0414c20, tinfo=0x7fb088d6fa90 <typeinfo for tvm::runtime::InternalError>,

dest=0x7fb085e6db40 <tvm::runtime::InternalError::~InternalError()>) at ../../../../libstdc++-v3/libsupc++/eh_throw.cc:76

76 PROBE2 (throw, obj, tinfo);

(gdb) n

78 __cxa_eh_globals *globals = __cxa_get_globals ();

(gdb) c

Continuing.

Thread 1 "python" hit Catchpoint 4 (exception thrown), __cxxabiv1::__cxa_throw (obj=0x7fb1a02c9b80, tinfo=0x7fb088d6fa90 <typeinfo for tvm::runtime::InternalError>,

dest=0x7fb085e6db40 <tvm::runtime::InternalError::~InternalError()>) at ../../../../libstdc++-v3/libsupc++/eh_throw.cc:76

76 PROBE2 (throw, obj, tinfo);

(gdb) n

78 __cxa_eh_globals *globals = __cxa_get_globals ();

(gdb) n

79 globals->uncaughtExceptions += 1;

(gdb) n

82 __cxa_init_primary_exception(obj, tinfo, dest);

(gdb) n

88 _Unwind_RaiseException (&header->exc.unwindHeader);

(gdb) n

83 header->referenceCount = 1;

(gdb) n

88 _Unwind_RaiseException (&header->exc.unwindHeader);

(gdb) n

0x00007fb085e6d494 in tvm::runtime::detail::LogFatal::Entry::Finalize (this=<optimized out>) at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/logging.h:356

356 throw InternalError(file_, lineno_, stream_.str());

(gdb) n

0x00007fb086fcb831 in tvm::runtime::vm::MemoryManager::GetAllocator (dev=...) at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/memory_manager.cc:154

154 LOG(FATAL) << "Allocator for " << DeviceName(dev.device_type) << "(" << dev.device_id

(gdb) n

Thread 1 "python" hit Catchpoint 2 (exception caught), __cxxabiv1::__cxa_begin_catch (exc_obj_in=0x7fb1a02c9b60) at ../../../../libstdc++-v3/libsupc++/eh_catch.cc:84

84 PROBE2 (catch, objectp, header->exceptionType);

(gdb) n

90 }

(gdb) n

terminate called after throwing an instance of 'tvm::runtime::InternalError'

Thread 1 "python" hit Catchpoint 5 (exception rethrown), __cxxabiv1::__cxa_rethrow () at ../../../../libstdc++-v3/libsupc++/eh_throw.cc:114

114 PROBE2 (rethrow, __get_object_from_ambiguous_exception(header),

(gdb) n

124 _Unwind_Resume_or_Rethrow (&header->unwindHeader);

(gdb) n

0x00007fb07ff28475 in __gnu_cxx::__verbose_terminate_handler () at ../../../../libstdc++-v3/libsupc++/vterminate.cc:95

95 abort();

(gdb) n

82 __catch(const exception& exc)

(gdb) n

Thread 1 "python" hit Catchpoint 2 (exception caught), __cxxabiv1::__cxa_begin_catch (exc_obj_in=0x7fb1a02c9b60) at ../../../../libstdc++-v3/libsupc++/eh_catch.cc:84

84 PROBE2 (catch, objectp, header->exceptionType);

(gdb) n

90 }

(gdb) n

__gnu_cxx::__verbose_terminate_handler () at ../../../../libstdc++-v3/libsupc++/vterminate.cc:84

(gdb) c

Continuing.

[00:29:57] /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/device_api.h:263: unknown type =0

Stack trace:

0: tvm::runtime::vm::MemoryManager::GetAllocator(DLDevice)

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/memory_manager.cc:154

1: tvm::runtime::vm::StorageObj::~StorageObj()

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/vm/memory_manager.h:126

2: tvm::runtime::SimpleObjAllocator::Handler<tvm::runtime::vm::StorageObj>::Deleter_(tvm::runtime::Object*)

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/memory.h:138

3: tvm::runtime::Object::DecRef()

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/object.h:796

4: tvm::runtime::ObjectPtr<tvm::runtime::vm::StorageObj>::reset()

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/object.h:442

5: tvm::runtime::ObjectPtr<tvm::runtime::vm::StorageObj>::~ObjectPtr()

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/object.h:396

6: tvm::runtime::vm::VirtualMachine::RunLoop()

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:592

7: tvm::runtime::vm::VirtualMachine::Invoke(tvm::runtime::vm::VMFunction const&, std::vector<tvm::runtime::ObjectRef, std::allocator<tvm::runtime::ObjectRef> > const&)

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:262

8: tvm::runtime::vm::VirtualMachine::GetFunction(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, tvm::runtime::ObjectPtr<tvm::runtime::Object> const&)::$_0::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

at /data/ljw_data/PROJECTS/tvm/src/runtime/vm/vm.cc:132

9: std::_Function_handler<void (tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*), tvm::runtime::vm::VirtualMachine::GetFunction(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, tvm::runtime::ObjectPtr<tvm::runtime::Object> const&)::$_0>::_M_invoke(std::_Any_data const&, tvm::runtime::TVMArgs&&, tvm::runtime::TVMRetValue*&&)

at /home/ljw/bin/../lib/gcc/x86_64-pc-linux-gnu/7.5.0/../../../../include/c++/7.5.0/bits/std_function.h:316

10: std::function<void (tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*)>::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

at /home/ljw/bin/../lib/gcc/x86_64-pc-linux-gnu/7.5.0/../../../../include/c++/7.5.0/bits/std_function.h:706

11: tvm::runtime::PackedFunc::CallPacked(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

at /data/ljw_data/PROJECTS/tvm/include/tvm/runtime/packed_func.h:1150

12: TVMFuncCall

at /data/ljw_data/PROJECTS/tvm/src/runtime/c_runtime_api.cc:474

Discussion

Some dirty fixes

Marking the destructor noexcept(true) can help the first exception not being ignored. (but this is a bit ugly…

Throw in destructor?

According to ISO C++ WIKI and many others, it is suggested to avoid “throw” in destructor, since things becomes tricky when dealing with stack unwinding.

Other libraries, though supported “throw while logging” functionality, avoids throw anything in the destructor (BOOST_LOG_THROW) or at least made it optional (GLOG’s LogMessageFatal).

Disabling such pattern in TVM’s logger might make the codes more robust, but it costs simplicity (it is cool to write ICHECK(...) like C++ streams, while BOOST_LOG_THROW writes like BOOST_LOG_THROW(lg, fatal, boost::exception, "Error: " << dump);).