Hi all, I came across a confusing problem, the compiled module has a different inference with onnxruntime.

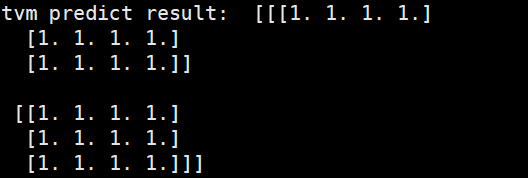

TVM predict result:

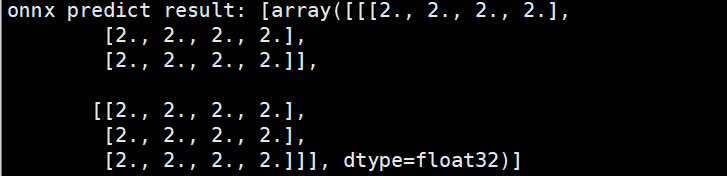

ONNX predict result:

Script for reproduce this bug:

import onnx

from tvm import relay

from tvm.contrib import graph_runtime

import tvm

import numpy as np

onnx_model_path = "model_seq.onnx"

model = onnx.load(onnx_model_path)

irmod, params = relay.frontend.from_onnx(model, {'X': [2, 3, 4], 'Y': [2, 3, 4], 'Z': [2, 3, 4]}, freeze_params=True)

graph, lib, params = relay.build(irmod, target='llvm', params=params)

input_data_x = np.full([2, 3, 4], 1, dtype='float32')

input_data_y = np.full([2, 3, 4], 0, dtype='float32')

input_data_z = np.full([2, 3, 4], 2, dtype='float32')

module = graph_runtime.create(graph, lib, tvm.cpu(0))

module.set_input('X', input_data_x)

module.set_input('Y', input_data_y)

module.set_input('Z', input_data_z)

module.set_input(**params)

module.run()

outputs_dict = {'out': [2, 3, 4]}

res_tvm = module.get_output(0, tvm.nd.empty([2, 3, 4])).asnumpy()

print("tvm predict result: ", res_tvm)

# ---------------------------------------------------------------------------

import onnxruntime as rt

sess = rt.InferenceSession(onnx_model_path)

res_frame = sess.run(['out'], {'X': input_data_x, 'Y': input_data_y, 'Z': input_data_z})

print("onnx predict result:", res_frame)

related model used in the script:

you can receive the simplest onnx model by this link: model_seq.onnx