I was trying to deploy the FSRCNN model(https://github.com/yjn870/FSRCNN-pytorch) on CPU/GPU/VTA using TVM. To be precise:

#load model

onnx_model = onnx.load('fsrcnn.onnx')

#load input image

from PIL import Image

img_url = "https://github.com/dmlc/mxnet.js/blob/main/data/cat.png?raw=true"

img_path = download_testdata(img_url, "cat.png", module="data")

img = Image.open(img_path)

img_rgb = img.convert("RGB")

image_width = (img.width // 3) * 3

image_height = (img.height // 3) * 3

hr = img.resize((image_width, image_height), resample=Image.BICUBIC)

lr = hr.resize((hr.width // 3, hr.height // 3), resample=Image.BICUBIC)

bicubic = lr.resize((lr.width * 3, lr.height * 3), resample=Image.BICUBIC)

lr, _ = preprocess(lr, 'cpu')

hr, _ = preprocess(hr, 'cpu')

_, ycbcr = preprocess(bicubic, 'cpu')

#compile the model with relay

target = "llvm"

input_name = "0"

print (lr.shape)

shape_dict = {input_name:lr.shape}

mod, params = relay.frontend.from_onnx(onnx_model, shape_dict)

I got the errors:

torch.Size([1, 1, 85, 85])

Incompatible broadcast type TensorType([56], float32) and TensorType([1, 56, 85, 85], float32)

Traceback (most recent call last):

File "deploy_fsrcnn_simple.py", line 41, in <module>

mod, params = relay.frontend.from_onnx(onnx_model, shape_dict)

File "/home/tvm/python/tvm/relay/frontend/onnx.py", line 5745, in from_onnx

mod, params = g.from_onnx(graph, opset)

File "/home/tvm/python/tvm/relay/frontend/onnx.py", line 5415, in from_onnx

self._construct_nodes(graph)

File "/home/tvm/python/tvm/relay/frontend/onnx.py", line 5527, in _construct_nodes

op = self._convert_operator(op_name, inputs, attr, self.opset)

File "/home/tvm/python/tvm/relay/frontend/onnx.py", line 5638, in _convert_operator

sym = convert_map[op_name](inputs, attrs, self._params)

File "/home/tvm/python/tvm/relay/frontend/onnx.py", line 599, in _impl_v1

input_shape = infer_shape(data)

File "/home/tvm/python/tvm/relay/frontend/common.py", line 526, in infer_shape

out_type = infer_type(inputs, mod=mod)

File "/home/tvm/python/tvm/relay/frontend/common.py", line 501, in infer_type

new_mod = _transform.InferType()(new_mod)

File "/home/tvm/python/tvm/ir/transform.py", line 161, in __call__

return _ffi_transform_api.RunPass(self, mod)

File "/home/tvm/python/tvm/_ffi/_ctypes/packed_func.py", line 237, in __call__

raise get_last_ffi_error()

tvm.error.DiagnosticError: Traceback (most recent call last):

6: TVMFuncCall

5: tvm::runtime::PackedFuncObj::Extractor<tvm::runtime::PackedFuncSubObj<tvm::runtime::TypedPackedFunc<tvm::IRModule (tvm::transform::Pass, tvm::IRModule)>::AssignTypedLambda<tvm::transform::{lambda(tvm::transform::Pass, tvm::IRModule)#7}>(tvm::transform::{lambda(tvm::transform::Pass, tvm::IRModule)#7}, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >)::{lambda(tvm::runtime::TVMArgs const&, tvm::runtime::TVMRetValue*)#1}> >::Call(tvm::runtime::PackedFuncObj const*, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, tvm::runtime::TVMRetValue)

4: tvm::transform::Pass::operator()(tvm::IRModule) const

3: tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const

2: tvm::transform::ModulePassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const

1: _ZN3tvm7runtime13PackedFuncObj9ExtractorINS0_16PackedFuncSubObjIZNS0_15TypedPackedFuncIFNS_8IRModuleES5_NS_9transform11PassContextEEE17AssignTypedLambdaIZNS_5relay9transform9InferTypeEvEUlS5_RKS7_E_EEvT_EUlRKNS0_7TVMArgsEPNS0_11TVMRetValueEE_EEE4CallEPKS1_SH_SL_

0: tvm::DiagnosticContext::Render()

File "/home/tvm/src/ir/diagnostic.cc", line 105

DiagnosticError: one or more error diagnostics were emitted, please check diagnostic render for output.

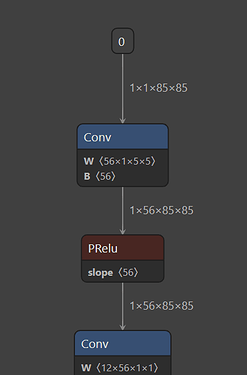

The TensorType([56], float32) and TensorType([1, 56, 85, 85], float32) comes from the output of the first Conv Layer and the PRelu:

anybody have any idea to fix this bug?any help will be appreciated!