I want to import a scripted instead of traced PyTorch model in TVM. But why? I am working with a model that cannot be traced at all because the input data is dynamic. Only scripting works.

According to the documentation, https://tvm.apache.org/docs/api/python/relay/frontend.html#tvm.relay.frontend.from_pytorch

, scripting is not supported: Note: We currently only support traces (ie: torch.jit.trace(model, input))

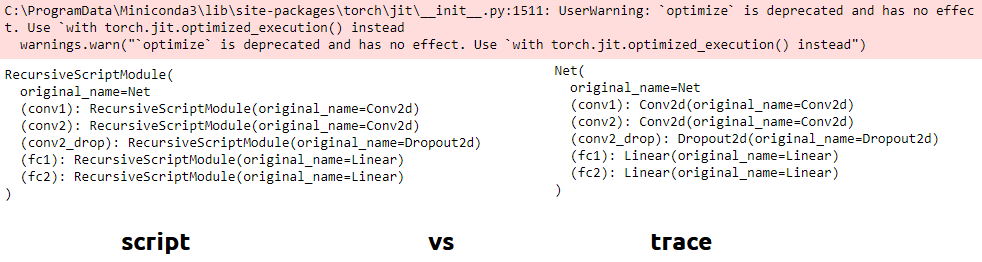

The difference between scripting and tracing for a very basic neural net can be seen below:

When using the traced version with TVM, it works fine. When I try to use the scripted version, the following errors occur: NotImplementedError: The following operators are not implemented: ['aten::feature_dropout_', 'aten::__is__', 'aten::format', 'aten::conv2d', 'prim::unchecked_cast', 'aten::warn', 'aten::dim', 'aten::__isnot__']

It could be that some operators are not implemented in TVM, but seeing as a lot of work on this is/was done (see i.e. apache/tvm#5133) this is not super probable. Does anyone know if there’s a proposed solution for this?