And then I tried another example:https://tvm.apache.org/docs/how_to/work_with_microtvm/micro_autotune.html, there are also some problem.

I don’t modify the code in the example except the board config.

boards[BOARD]

{'board': 'mimxrt1060_evk',

'model': 'imxrt10xx',

'is_qemu': False,

'fpu': True,

'vid_hex': '0d28',

'pid_hex': '0204'}

And I get this result for auto-tuning code

in terminal:

Current/Best: 0.00/ 0.00 MFLOPS | Progress: (0/10) | 0.00 s

model/codegen/host/src/lib1.c: In function ‘default_function’:

model/codegen/host/src/lib1.c:24:9: warning: unused variable ‘arg_data_shape’ [-Wunused-variable]

24 | void* arg_data_shape = (((DLTensor*)arg_data)[0].shape);

| ^~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:21:9: warning: unused variable ‘arg_kernel_shape’ [-Wunused-variable]

21 | void* arg_kernel_shape = (((DLTensor*)arg_kernel)[0].shape);

| ^~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:17:9: warning: unused variable ‘arg_conv2d_NCHWc_shape’ [-Wunused-variable]

17 | void* arg_conv2d_NCHWc_shape = (((DLTensor*)arg_conv2d_NCHWc)[0].shape);

| ^~~~~~~~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:15:11: warning: unused variable ‘arg_data_code’ [-Wunused-variable]

15 | int32_t arg_data_code = arg_type_ids[2];

| ^~~~~~~~~~~~~

model/codegen/host/src/lib1.c:13:11: warning: unused variable ‘arg_kernel_code’ [-Wunused-variable]

13 | int32_t arg_kernel_code = arg_type_ids[1];

| ^~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:11:11: warning: unused variable ‘arg_conv2d_NCHWc_code’ [-Wunused-variable]

11 | int32_t arg_conv2d_NCHWc_code = arg_type_ids[0];

| ^~~~~~~~~~~~~~~~~~~~~

Current/Best: 359.22/ 359.22 MFLOPS | Progress: (1/10) | 4.82 s

microTVM runtime: 0-length read, exiting!

model/codegen/host/src/lib1.c: In function ‘default_function’:

model/codegen/host/src/lib1.c:24:9: warning: unused variable ‘arg_data_shape’ [-Wunused-variable]

24 | void* arg_data_shape = (((DLTensor*)arg_data)[0].shape);

| ^~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:21:9: warning: unused variable ‘arg_kernel_shape’ [-Wunused-variable]

21 | void* arg_kernel_shape = (((DLTensor*)arg_kernel)[0].shape);

| ^~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:17:9: warning: unused variable ‘arg_conv2d_NCHWc_shape’ [-Wunused-variable]

17 | void* arg_conv2d_NCHWc_shape = (((DLTensor*)arg_conv2d_NCHWc)[0].shape);

| ^~~~~~~~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:15:11: warning: unused variable ‘arg_data_code’ [-Wunused-variable]

15 | int32_t arg_data_code = arg_type_ids[2];

| ^~~~~~~~~~~~~

model/codegen/host/src/lib1.c:13:11: warning: unused variable ‘arg_kernel_code’ [-Wunused-variable]

13 | int32_t arg_kernel_code = arg_type_ids[1];

| ^~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:11:11: warning: unused variable ‘arg_conv2d_NCHWc_code’ [-Wunused-variable]

11 | int32_t arg_conv2d_NCHWc_code = arg_type_ids[0];

| ^~~~~~~~~~~~~~~~~~~~~

Current/Best: 276.81/ 359.22 MFLOPS | Progress: (2/10) | 7.82 s

microTVM runtime: 0-length read, exiting!

model/codegen/host/src/lib1.c: In function ‘default_function’:

model/codegen/host/src/lib1.c:24:9: warning: unused variable ‘arg_data_shape’ [-Wunused-variable]

24 | void* arg_data_shape = (((DLTensor*)arg_data)[0].shape);

| ^~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:21:9: warning: unused variable ‘arg_kernel_shape’ [-Wunused-variable]

21 | void* arg_kernel_shape = (((DLTensor*)arg_kernel)[0].shape);

| ^~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:17:9: warning: unused variable ‘arg_conv2d_NCHWc_shape’ [-Wunused-variable]

17 | void* arg_conv2d_NCHWc_shape = (((DLTensor*)arg_conv2d_NCHWc)[0].shape);

| ^~~~~~~~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:15:11: warning: unused variable ‘arg_data_code’ [-Wunused-variable]

15 | int32_t arg_data_code = arg_type_ids[2];

| ^~~~~~~~~~~~~

model/codegen/host/src/lib1.c:13:11: warning: unused variable ‘arg_kernel_code’ [-Wunused-variable]

13 | int32_t arg_kernel_code = arg_type_ids[1];

| ^~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:11:11: warning: unused variable ‘arg_conv2d_NCHWc_code’ [-Wunused-variable]

11 | int32_t arg_conv2d_NCHWc_code = arg_type_ids[0];

| ^~~~~~~~~~~~~~~~~~~~~

Current/Best: 369.08/ 369.08 MFLOPS | Progress: (3/10) | 10.76 s

microTVM runtime: 0-length read, exiting!

model/codegen/host/src/lib1.c: In function ‘default_function’:

model/codegen/host/src/lib1.c:24:9: warning: unused variable ‘arg_data_shape’ [-Wunused-variable]

24 | void* arg_data_shape = (((DLTensor*)arg_data)[0].shape);

| ^~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:21:9: warning: unused variable ‘arg_kernel_shape’ [-Wunused-variable]

21 | void* arg_kernel_shape = (((DLTensor*)arg_kernel)[0].shape);

| ^~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:17:9: warning: unused variable ‘arg_conv2d_NCHWc_shape’ [-Wunused-variable]

17 | void* arg_conv2d_NCHWc_shape = (((DLTensor*)arg_conv2d_NCHWc)[0].shape);

| ^~~~~~~~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:15:11: warning: unused variable ‘arg_data_code’ [-Wunused-variable]

15 | int32_t arg_data_code = arg_type_ids[2];

| ^~~~~~~~~~~~~

model/codegen/host/src/lib1.c:13:11: warning: unused variable ‘arg_kernel_code’ [-Wunused-variable]

13 | int32_t arg_kernel_code = arg_type_ids[1];

| ^~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:11:11: warning: unused variable ‘arg_conv2d_NCHWc_code’ [-Wunused-variable]

11 | int32_t arg_conv2d_NCHWc_code = arg_type_ids[0];

| ^~~~~~~~~~~~~~~~~~~~~

Current/Best: 607.20/ 607.20 MFLOPS | Progress: (4/10) | 13.56 s

microTVM runtime: 0-length read, exiting!

model/codegen/host/src/lib1.c: In function ‘default_function’:

model/codegen/host/src/lib1.c:24:9: warning: unused variable ‘arg_data_shape’ [-Wunused-variable]

24 | void* arg_data_shape = (((DLTensor*)arg_data)[0].shape);

| ^~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:21:9: warning: unused variable ‘arg_kernel_shape’ [-Wunused-variable]

21 | void* arg_kernel_shape = (((DLTensor*)arg_kernel)[0].shape);

| ^~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:17:9: warning: unused variable ‘arg_conv2d_NCHWc_shape’ [-Wunused-variable]

17 | void* arg_conv2d_NCHWc_shape = (((DLTensor*)arg_conv2d_NCHWc)[0].shape);

| ^~~~~~~~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:15:11: warning: unused variable ‘arg_data_code’ [-Wunused-variable]

15 | int32_t arg_data_code = arg_type_ids[2];

| ^~~~~~~~~~~~~

model/codegen/host/src/lib1.c:13:11: warning: unused variable ‘arg_kernel_code’ [-Wunused-variable]

13 | int32_t arg_kernel_code = arg_type_ids[1];

| ^~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:11:11: warning: unused variable ‘arg_conv2d_NCHWc_code’ [-Wunused-variable]

11 | int32_t arg_conv2d_NCHWc_code = arg_type_ids[0];

| ^~~~~~~~~~~~~~~~~~~~~

Current/Best: 537.81/ 607.20 MFLOPS | Progress: (5/10) | 16.40 s

microTVM runtime: 0-length read, exiting!

model/codegen/host/src/lib1.c: In function ‘default_function’:

model/codegen/host/src/lib1.c:24:9: warning: unused variable ‘arg_data_shape’ [-Wunused-variable]

24 | void* arg_data_shape = (((DLTensor*)arg_data)[0].shape);

| ^~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:21:9: warning: unused variable ‘arg_kernel_shape’ [-Wunused-variable]

21 | void* arg_kernel_shape = (((DLTensor*)arg_kernel)[0].shape);

| ^~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:17:9: warning: unused variable ‘arg_conv2d_NCHWc_shape’ [-Wunused-variable]

17 | void* arg_conv2d_NCHWc_shape = (((DLTensor*)arg_conv2d_NCHWc)[0].shape);

| ^~~~~~~~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:15:11: warning: unused variable ‘arg_data_code’ [-Wunused-variable]

15 | int32_t arg_data_code = arg_type_ids[2];

| ^~~~~~~~~~~~~

model/codegen/host/src/lib1.c:13:11: warning: unused variable ‘arg_kernel_code’ [-Wunused-variable]

13 | int32_t arg_kernel_code = arg_type_ids[1];

| ^~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:11:11: warning: unused variable ‘arg_conv2d_NCHWc_code’ [-Wunused-variable]

11 | int32_t arg_conv2d_NCHWc_code = arg_type_ids[0];

| ^~~~~~~~~~~~~~~~~~~~~

Current/Best: 280.94/ 607.20 MFLOPS | Progress: (6/10) | 19.44 s

microTVM runtime: 0-length read, exiting!

model/codegen/host/src/lib1.c: In function ‘default_function’:

model/codegen/host/src/lib1.c:24:9: warning: unused variable ‘arg_data_shape’ [-Wunused-variable]

24 | void* arg_data_shape = (((DLTensor*)arg_data)[0].shape);

| ^~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:21:9: warning: unused variable ‘arg_kernel_shape’ [-Wunused-variable]

21 | void* arg_kernel_shape = (((DLTensor*)arg_kernel)[0].shape);

| ^~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:17:9: warning: unused variable ‘arg_conv2d_NCHWc_shape’ [-Wunused-variable]

17 | void* arg_conv2d_NCHWc_shape = (((DLTensor*)arg_conv2d_NCHWc)[0].shape);

| ^~~~~~~~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:15:11: warning: unused variable ‘arg_data_code’ [-Wunused-variable]

15 | int32_t arg_data_code = arg_type_ids[2];

| ^~~~~~~~~~~~~

model/codegen/host/src/lib1.c:13:11: warning: unused variable ‘arg_kernel_code’ [-Wunused-variable]

13 | int32_t arg_kernel_code = arg_type_ids[1];

| ^~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:11:11: warning: unused variable ‘arg_conv2d_NCHWc_code’ [-Wunused-variable]

11 | int32_t arg_conv2d_NCHWc_code = arg_type_ids[0];

| ^~~~~~~~~~~~~~~~~~~~~

Current/Best: 274.39/ 607.20 MFLOPS | Progress: (7/10) | 22.23 s

microTVM runtime: 0-length read, exiting!

model/codegen/host/src/lib1.c: In function ‘default_function’:

model/codegen/host/src/lib1.c:24:9: warning: unused variable ‘arg_data_shape’ [-Wunused-variable]

24 | void* arg_data_shape = (((DLTensor*)arg_data)[0].shape);

| ^~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:21:9: warning: unused variable ‘arg_kernel_shape’ [-Wunused-variable]

21 | void* arg_kernel_shape = (((DLTensor*)arg_kernel)[0].shape);

| ^~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:17:9: warning: unused variable ‘arg_conv2d_NCHWc_shape’ [-Wunused-variable]

17 | void* arg_conv2d_NCHWc_shape = (((DLTensor*)arg_conv2d_NCHWc)[0].shape);

| ^~~~~~~~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:15:11: warning: unused variable ‘arg_data_code’ [-Wunused-variable]

15 | int32_t arg_data_code = arg_type_ids[2];

| ^~~~~~~~~~~~~

model/codegen/host/src/lib1.c:13:11: warning: unused variable ‘arg_kernel_code’ [-Wunused-variable]

13 | int32_t arg_kernel_code = arg_type_ids[1];

| ^~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:11:11: warning: unused variable ‘arg_conv2d_NCHWc_code’ [-Wunused-variable]

11 | int32_t arg_conv2d_NCHWc_code = arg_type_ids[0];

| ^~~~~~~~~~~~~~~~~~~~~

Current/Best: 319.04/ 607.20 MFLOPS | Progress: (8/10) | 25.18 s

microTVM runtime: 0-length read, exiting!

model/codegen/host/src/lib1.c: In function ‘default_function’:

model/codegen/host/src/lib1.c:24:9: warning: unused variable ‘arg_data_shape’ [-Wunused-variable]

24 | void* arg_data_shape = (((DLTensor*)arg_data)[0].shape);

| ^~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:21:9: warning: unused variable ‘arg_kernel_shape’ [-Wunused-variable]

21 | void* arg_kernel_shape = (((DLTensor*)arg_kernel)[0].shape);

| ^~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:17:9: warning: unused variable ‘arg_conv2d_NCHWc_shape’ [-Wunused-variable]

17 | void* arg_conv2d_NCHWc_shape = (((DLTensor*)arg_conv2d_NCHWc)[0].shape);

| ^~~~~~~~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:15:11: warning: unused variable ‘arg_data_code’ [-Wunused-variable]

15 | int32_t arg_data_code = arg_type_ids[2];

| ^~~~~~~~~~~~~

model/codegen/host/src/lib1.c:13:11: warning: unused variable ‘arg_kernel_code’ [-Wunused-variable]

13 | int32_t arg_kernel_code = arg_type_ids[1];

| ^~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:11:11: warning: unused variable ‘arg_conv2d_NCHWc_code’ [-Wunused-variable]

11 | int32_t arg_conv2d_NCHWc_code = arg_type_ids[0];

| ^~~~~~~~~~~~~~~~~~~~~

Current/Best: 231.81/ 607.20 MFLOPS | Progress: (9/10) | 28.03 s

microTVM runtime: 0-length read, exiting!

model/codegen/host/src/lib1.c: In function ‘default_function’:

model/codegen/host/src/lib1.c:24:9: warning: unused variable ‘arg_data_shape’ [-Wunused-variable]

24 | void* arg_data_shape = (((DLTensor*)arg_data)[0].shape);

| ^~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:21:9: warning: unused variable ‘arg_kernel_shape’ [-Wunused-variable]

21 | void* arg_kernel_shape = (((DLTensor*)arg_kernel)[0].shape);

| ^~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:17:9: warning: unused variable ‘arg_conv2d_NCHWc_shape’ [-Wunused-variable]

17 | void* arg_conv2d_NCHWc_shape = (((DLTensor*)arg_conv2d_NCHWc)[0].shape);

| ^~~~~~~~~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:15:11: warning: unused variable ‘arg_data_code’ [-Wunused-variable]

15 | int32_t arg_data_code = arg_type_ids[2];

| ^~~~~~~~~~~~~

model/codegen/host/src/lib1.c:13:11: warning: unused variable ‘arg_kernel_code’ [-Wunused-variable]

13 | int32_t arg_kernel_code = arg_type_ids[1];

| ^~~~~~~~~~~~~~~

model/codegen/host/src/lib1.c:11:11: warning: unused variable ‘arg_conv2d_NCHWc_code’ [-Wunused-variable]

11 | int32_t arg_conv2d_NCHWc_code = arg_type_ids[0];

| ^~~~~~~~~~~~~~~~~~~~~

Current/Best: 272.80/ 607.20 MFLOPS | Progress: (10/10) | 30.78 s Done.

microTVM runtime: 0-length read, exiting!

in log file:

{"input": ["c -keys=cpu -link-params=0 -mcpu=cortex-m7 -model=imxrt10xx", "conv2d_NCHWc.x86", [["TENSOR", [1, 3, 10, 10], "float32"], ["TENSOR", [6, 3, 5, 5], "float32"], [1, 1], [2, 2, 2, 2], [1, 1], "NCHW", "NCHW", "float32"], {}], "config": {"index": 86, "code_hash": null, "entity": [["tile_ic", "sp", [-1, 1]], ["tile_oc", "sp", [-1, 6]], ["tile_ow", "sp", [-1, 8]], ["unroll_kw", "ot", false]]}, "result": [[0.000262], 0, 2.5539603233337402, 1655886068.604009], "version": 0.2, "tvm_version": "0.9.dev0"}

{"input": ["c -keys=cpu -link-params=0 -mcpu=cortex-m7 -model=imxrt10xx", "conv2d_NCHWc.x86", [["TENSOR", [1, 3, 10, 10], "float32"], ["TENSOR", [6, 3, 5, 5], "float32"], [1, 1], [2, 2, 2, 2], [1, 1], "NCHW", "NCHW", "float32"], {}], "config": {"index": 85, "code_hash": null, "entity": [["tile_ic", "sp", [-1, 3]], ["tile_oc", "sp", [-1, 3]], ["tile_ow", "sp", [-1, 8]], ["unroll_kw", "ot", false]]}, "result": [[0.00034], 0, 2.8432226181030273, 1655886071.5829275], "version": 0.2, "tvm_version": "0.9.dev0"}

{"input": ["c -keys=cpu -link-params=0 -mcpu=cortex-m7 -model=imxrt10xx", "conv2d_NCHWc.x86", [["TENSOR", [1, 3, 10, 10], "float32"], ["TENSOR", [6, 3, 5, 5], "float32"], [1, 1], [2, 2, 2, 2], [1, 1], "NCHW", "NCHW", "float32"], {}], "config": {"index": 23, "code_hash": null, "entity": [["tile_ic", "sp", [-1, 3]], ["tile_oc", "sp", [-1, 6]], ["tile_ow", "sp", [-1, 4]], ["unroll_kw", "ot", true]]}, "result": [[0.000255], 0, 2.7958567142486572, 1655886074.537234], "version": 0.2, "tvm_version": "0.9.dev0"}

{"input": ["c -keys=cpu -link-params=0 -mcpu=cortex-m7 -model=imxrt10xx", "conv2d_NCHWc.x86", [["TENSOR", [1, 3, 10, 10], "float32"], ["TENSOR", [6, 3, 5, 5], "float32"], [1, 1], [2, 2, 2, 2], [1, 1], "NCHW", "NCHW", "float32"], {}], "config": {"index": 25, "code_hash": null, "entity": [["tile_ic", "sp", [-1, 3]], ["tile_oc", "sp", [-1, 1]], ["tile_ow", "sp", [-1, 5]], ["unroll_kw", "ot", true]]}, "result": [[0.000155], 0, 2.6651906967163086, 1655886077.3360674], "version": 0.2, "tvm_version": "0.9.dev0"}

{"input": ["c -keys=cpu -link-params=0 -mcpu=cortex-m7 -model=imxrt10xx", "conv2d_NCHWc.x86", [["TENSOR", [1, 3, 10, 10], "float32"], ["TENSOR", [6, 3, 5, 5], "float32"], [1, 1], [2, 2, 2, 2], [1, 1], "NCHW", "NCHW", "float32"], {}], "config": {"index": 16, "code_hash": null, "entity": [["tile_ic", "sp", [-1, 1]], ["tile_oc", "sp", [-1, 1]], ["tile_ow", "sp", [-1, 4]], ["unroll_kw", "ot", true]]}, "result": [[0.000175], 0, 2.7136850357055664, 1655886080.1852794], "version": 0.2, "tvm_version": "0.9.dev0"}

{"input": ["c -keys=cpu -link-params=0 -mcpu=cortex-m7 -model=imxrt10xx", "conv2d_NCHWc.x86", [["TENSOR", [1, 3, 10, 10], "float32"], ["TENSOR", [6, 3, 5, 5], "float32"], [1, 1], [2, 2, 2, 2], [1, 1], "NCHW", "NCHW", "float32"], {}], "config": {"index": 75, "code_hash": null, "entity": [["tile_ic", "sp", [-1, 3]], ["tile_oc", "sp", [-1, 2]], ["tile_ow", "sp", [-1, 5]], ["unroll_kw", "ot", false]]}, "result": [[0.000335], 0, 2.898169994354248, 1655886083.2180862], "version": 0.2, "tvm_version": "0.9.dev0"}

{"input": ["c -keys=cpu -link-params=0 -mcpu=cortex-m7 -model=imxrt10xx", "conv2d_NCHWc.x86", [["TENSOR", [1, 3, 10, 10], "float32"], ["TENSOR", [6, 3, 5, 5], "float32"], [1, 1], [2, 2, 2, 2], [1, 1], "NCHW", "NCHW", "float32"], {}], "config": {"index": 49, "code_hash": null, "entity": [["tile_ic", "sp", [-1, 3]], ["tile_oc", "sp", [-1, 1]], ["tile_ow", "sp", [-1, 1]], ["unroll_kw", "ot", false]]}, "result": [[0.000343], 0, 2.653069257736206, 1655886086.0076249], "version": 0.2, "tvm_version": "0.9.dev0"}

{"input": ["c -keys=cpu -link-params=0 -mcpu=cortex-m7 -model=imxrt10xx", "conv2d_NCHWc.x86", [["TENSOR", [1, 3, 10, 10], "float32"], ["TENSOR", [6, 3, 5, 5], "float32"], [1, 1], [2, 2, 2, 2], [1, 1], "NCHW", "NCHW", "float32"], {}], "config": {"index": 38, "code_hash": null, "entity": [["tile_ic", "sp", [-1, 1]], ["tile_oc", "sp", [-1, 6]], ["tile_ow", "sp", [-1, 8]], ["unroll_kw", "ot", true]]}, "result": [[0.000295], 0, 2.7871172428131104, 1655886088.933378], "version": 0.2, "tvm_version": "0.9.dev0"}

{"input": ["c -keys=cpu -link-params=0 -mcpu=cortex-m7 -model=imxrt10xx", "conv2d_NCHWc.x86", [["TENSOR", [1, 3, 10, 10], "float32"], ["TENSOR", [6, 3, 5, 5], "float32"], [1, 1], [2, 2, 2, 2], [1, 1], "NCHW", "NCHW", "float32"], {}], "config": {"index": 61, "code_hash": null, "entity": [["tile_ic", "sp", [-1, 3]], ["tile_oc", "sp", [-1, 3]], ["tile_ow", "sp", [-1, 2]], ["unroll_kw", "ot", false]]}, "result": [[0.000406], 0, 2.718482255935669, 1655886091.8119724], "version": 0.2, "tvm_version": "0.9.dev0"}

{"input": ["c -keys=cpu -link-params=0 -mcpu=cortex-m7 -model=imxrt10xx", "conv2d_NCHWc.x86", [["TENSOR", [1, 3, 10, 10], "float32"], ["TENSOR", [6, 3, 5, 5], "float32"], [1, 1], [2, 2, 2, 2], [1, 1], "NCHW", "NCHW", "float32"], {}], "config": {"index": 59, "code_hash": null, "entity": [["tile_ic", "sp", [-1, 3]], ["tile_oc", "sp", [-1, 2]], ["tile_ow", "sp", [-1, 2]], ["unroll_kw", "ot", false]]}, "result": [[0.000345], 0, 2.614313840866089, 1655886094.5607457], "version": 0.2, "tvm_version": "0.9.dev0"}

I’m not sure if the result is correct, and what’s the meaning of microTVM runtime: 0-length read, exiting!

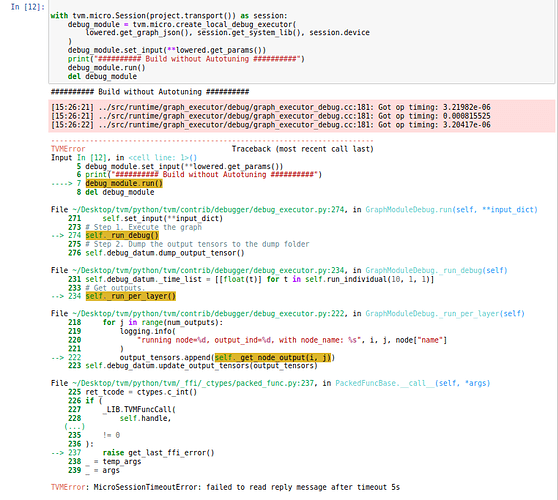

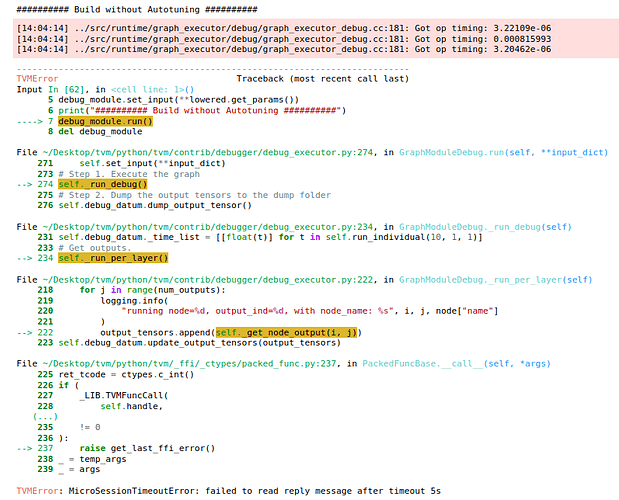

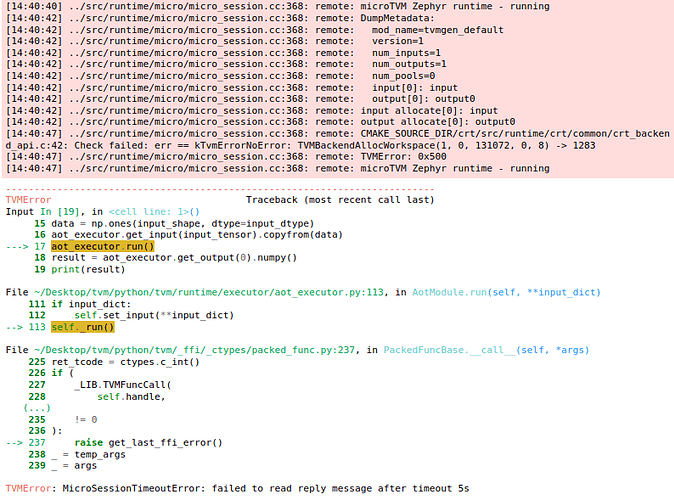

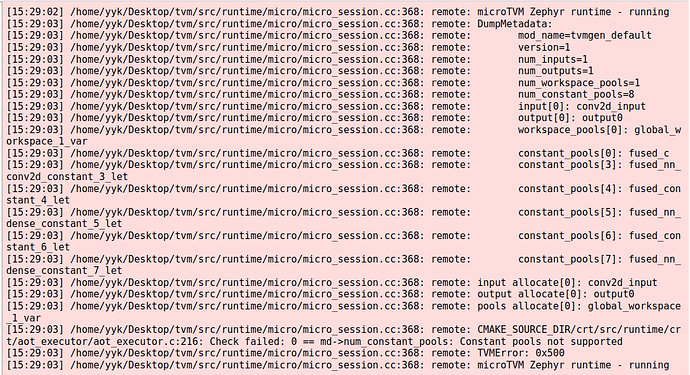

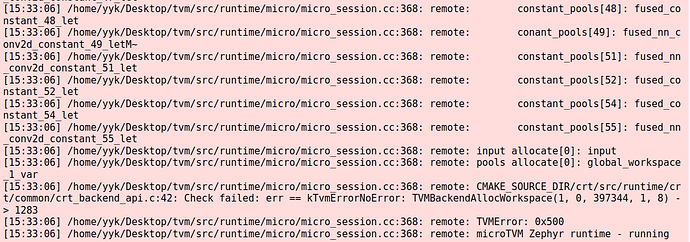

Then, another error occurs when I try to Timing the untuned program:

########## Build without Autotuning ##########

[15:26:21] ../src/runtime/graph_executor/debug/graph_executor_debug.cc:181: Got op timing: 3.21982e-06

[15:26:21] ../src/runtime/graph_executor/debug/graph_executor_debug.cc:181: Got op timing: 0.000815525

[15:26:22] ../src/runtime/graph_executor/debug/graph_executor_debug.cc:181: Got op timing: 3.20417e-06

---------------------------------------------------------------------------

TVMError Traceback (most recent call last)

Input In [12], in <cell line: 1>()

5 debug_module.set_input(**lowered.get_params())

6 print("########## Build without Autotuning ##########")

----> 7 debug_module.run()

8 del debug_module

File ~/Desktop/tvm/python/tvm/contrib/debugger/debug_executor.py:274, in GraphModuleDebug.run(self, **input_dict)

271 self.set_input(**input_dict)

273 # Step 1. Execute the graph

--> 274 self._run_debug()

275 # Step 2. Dump the output tensors to the dump folder

276 self.debug_datum.dump_output_tensor()

File ~/Desktop/tvm/python/tvm/contrib/debugger/debug_executor.py:234, in GraphModuleDebug._run_debug(self)

231 self.debug_datum._time_list = [[float(t)] for t in self.run_individual(10, 1, 1)]

233 # Get outputs.

--> 234 self._run_per_layer()

File ~/Desktop/tvm/python/tvm/contrib/debugger/debug_executor.py:222, in GraphModuleDebug._run_per_layer(self)

218 for j in range(num_outputs):

219 logging.info(

220 "running node=%d, output_ind=%d, with node_name: %s", i, j, node["name"]

221 )

--> 222 output_tensors.append(self._get_node_output(i, j))

223 self.debug_datum.update_output_tensors(output_tensors)

File ~/Desktop/tvm/python/tvm/_ffi/_ctypes/packed_func.py:237, in PackedFuncBase.__call__(self, *args)

225 ret_tcode = ctypes.c_int()

226 if (

227 _LIB.TVMFuncCall(

228 self.handle,

(...)

235 != 0

236 ):

--> 237 raise get_last_ffi_error()

238 _ = temp_args

239 _ = args

TVMError: MicroSessionTimeoutError: failed to read reply message after timeout 5s

Maybe I should start a new topic for this?