mod, params = relay_from_onnx(

onnx_model, opset=13, freeze_params=True, shape={"input.1": (1, 3, 1024, 1024)}

)

passes = tvm.transform.Sequential([

relay.transform.InferType(),

relay.transform.FakeQuantizationToInteger(),

])

mod = passes(mod)

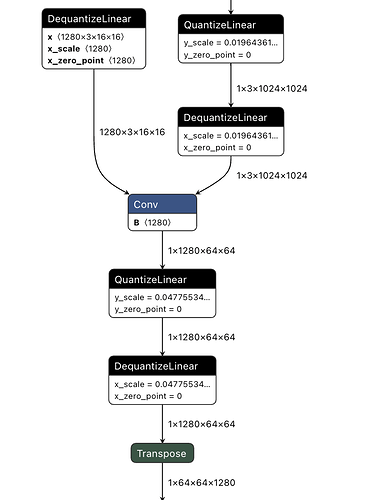

I tried to use the above code but I feel that TVM doesn’t handle delayed dequant operations, for example I want to do Q-DQ-conv-Q-DQ-conv becomes Q-conv-requant-conv-requant-DQ. i.e. implement full int8 inference. Is there any way to do this?