Hi,

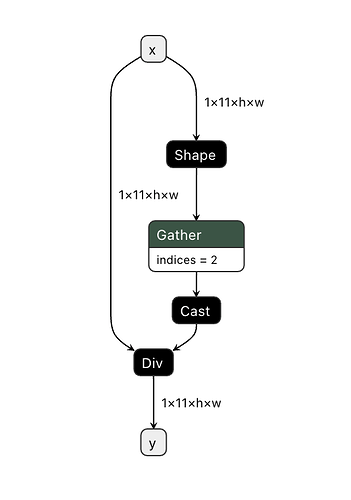

Here is torch code snippet that do tensor divide with its shape.

class IFBlock(nn.Module):

def __init__(self):

super(IFBlock, self).__init__()

def forward(self, x):

return x / (x.shape[2])

When play with relax, and input shape is dynamic, its onnx_frontend got error as below:

File "/data/aigc/workset/tvm_upstream/python/tvm/relax/frontend/onnx/onnx_frontend.py", line 2397, in from_onnx

return g.from_onnx(graph, opset)

File "/data/aigc/workset/tvm_upstream/python/tvm/relax/frontend/onnx/onnx_frontend.py", line 2040, in from_onnx

self._construct_nodes(graph)

File "/data/aigc/workset/tvm_upstream/python/tvm/relax/frontend/onnx/onnx_frontend.py", line 2203, in _construct_nodes

op = self._convert_operator(op_name, inputs, attr, self.opset)

File "/data/aigc/workset/tvm_upstream/python/tvm/relax/frontend/onnx/onnx_frontend.py", line 2301, in _convert_operator

sym = op_function(self.bb, inputs, attrs, [self._nodes, self._params])

File "/data/aigc/workset/tvm_upstream/python/tvm/relax/frontend/onnx/onnx_frontend.py", line 244, in _impl_v14

else inputs[0].data.numpy()

File "/data/aigc/workset/tvm_upstream/python/tvm/runtime/object.py", line 75, in __getattr__

raise AttributeError(f"{type(self)} has no attribute {name}") from None

AttributeError: <class 'tvm.relax.expr.Var'> has no attribute data

So I wonder whether this kind of operation could be supported by relax? I mean with pure relax script, how could we implement such function as metioned above?

Thx~