Hello everyone,

I have just installed TVM and was going through the tutorial Automatically Tuning the ResNet Model.

I ran

tvmc tune --target "llvm" --output resnet50-v2-7-autotuner_records.json resnet50-v2-7.onnx,

but it failed with the message:

tvmc tune --target “llvm” --output resnet50-v2-7-autotuner_records.json resnet50-v2-7.onnx

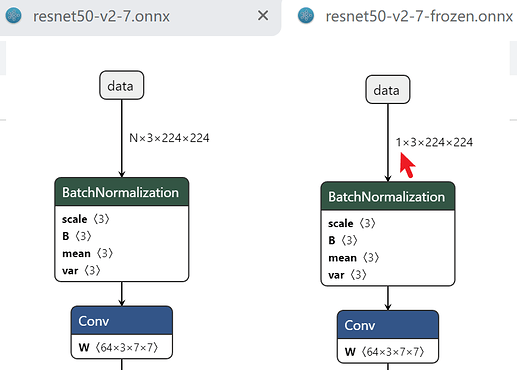

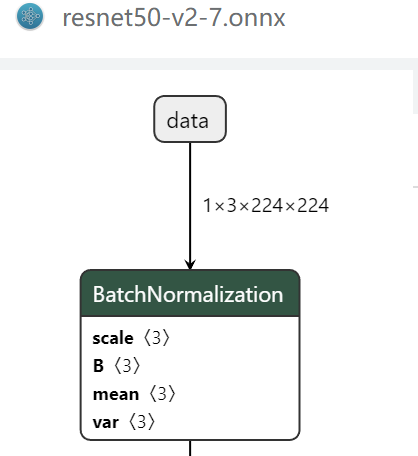

/root/tvm/python/tvm/relay/frontend/onnx.py:5538: UserWarning: Input data has unknown dimension shapes: [‘N’, 3, 224, 224]. Specifying static values may improve performance warnings.warn(warning_msg) /root/tvm/python/tvm/driver/build_module.py:267: UserWarning: target_host parameter is going to be deprecated. Please pass in tvm.target.Target(target, host=target_host) instead. warnings.warn( /root/tvm/python/tvm/target/target.py:272: UserWarning: target_host parameter is going to be deprecated. Please pass in tvm.target.Target(target, host=target_host) instead. warnings.warn( URLError(gaierror(-3, ‘Temporary failure in name resolution’)) Download attempt 0/3 failed, retrying. URLError(gaierror(-3, ‘Temporary failure in name resolution’)) Download attempt 1/3 failed, retrying. WARNING:root:Failed to download tophub package for llvm: <urlopen error [Errno -3] Temporary failure in name resolution> Traceback (most recent call last): File “/root/tvm/python/tvm/autotvm/task/task.py”, line 523, in _prod_length num_iter = int(np.prod([get_const_int(axis.dom.extent) for axis in axes])) File “/root/tvm/python/tvm/autotvm/task/task.py”, line 523, in num_iter = int(np.prod([get_const_int(axis.dom.extent) for axis in axes])) File “/root/tvm/python/tvm/autotvm/utils.py”, line 164, in get_const_int raise ValueError(“Expect value to be constant int”) ValueError: Expect value to be constant inturing handling of the above exception, another exception occurred:

Traceback (most recent call last): File “/root/tvm/python/tvm/autotvm/task/task.py”, line 613, in compute_flop ret = traverse(sch.outputs) File “/root/tvm/python/tvm/autotvm/task/task.py”, line 592, in traverse num_element = _prod_length(op.axis) File “/root/tvm/python/tvm/autotvm/task/task.py”, line 525, in _prod_length raise FlopCalculationError("The length of axis is not constant. ") tvm.autotvm.task.task.FlopCalculationError: The length of axis is not constant.

During handling of the above exception, another exception occurred:

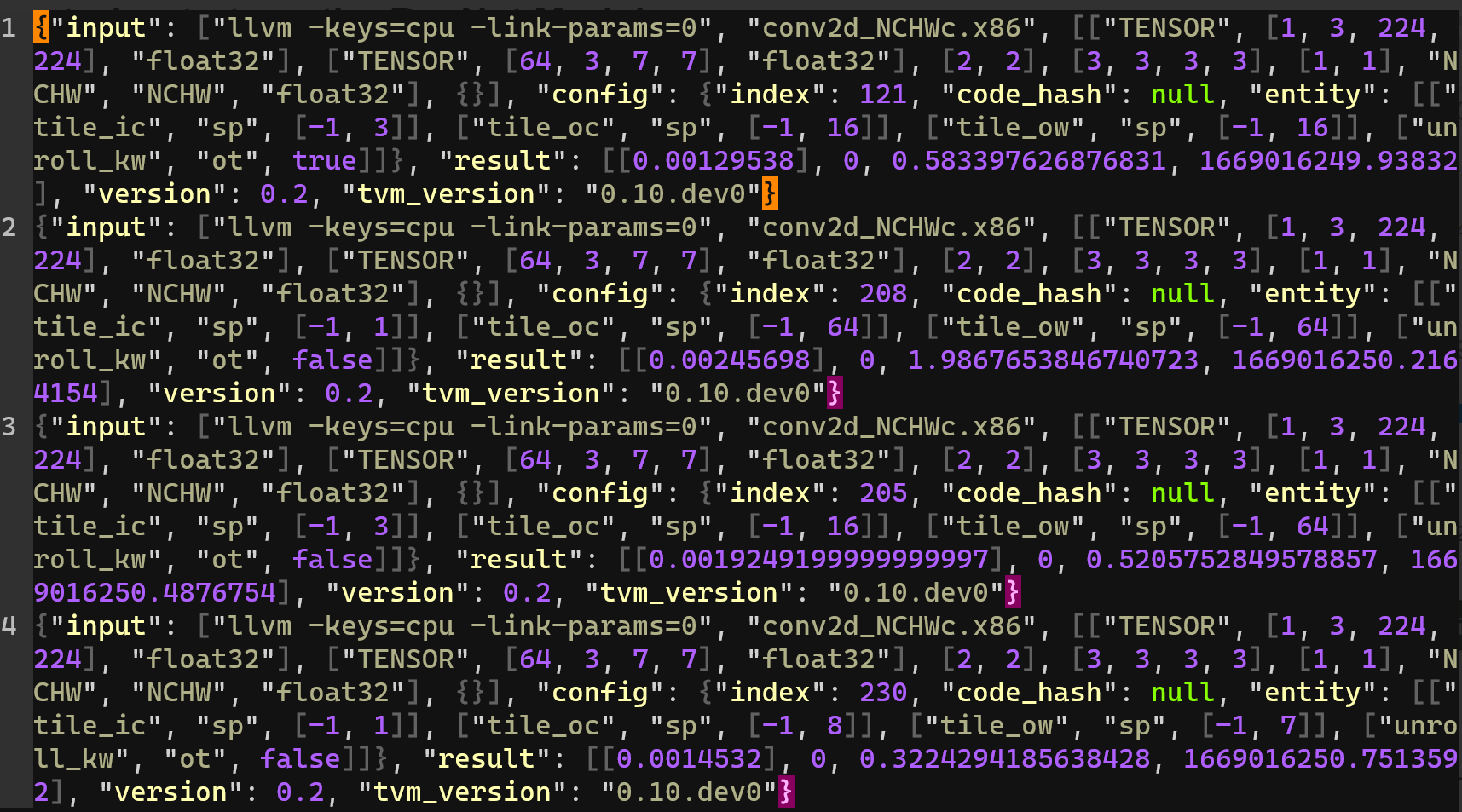

Traceback (most recent call last): File “/root/anaconda3/envs/tvm/lib/python3.8/runpy.py”, line 192, in _run_module_as_main return _run_code(code, main_globals, None, File “/root/anaconda3/envs/tvm/lib/python3.8/runpy.py”, line 85, in _run_code exec(code, run_globals) File “/root/tvm/python/tvm/driver/tvmc/main.py”, line 24, in tvmc.main.main() File “/root/tvm/python/tvm/driver/tvmc/main.py”, line 115, in main sys.exit(_main(sys.argv[1:])) File “/root/tvm/python/tvm/driver/tvmc/main.py”, line 103, in _main return args.func(args) File “/root/tvm/python/tvm/driver/tvmc/autotuner.py”, line 273, in drive_tune tune_model( File “/root/tvm/python/tvm/driver/tvmc/autotuner.py”, line 470, in tune_model tasks = autotvm_get_tuning_tasks( File “/root/tvm/python/tvm/driver/tvmc/autotuner.py”, line 532, in autotvm_get_tuning_tasks tasks = autotvm.task.extract_from_program( File “/root/tvm/python/tvm/autotvm/task/relay_integration.py”, line 84, in extract_from_program return extract_from_multiple_program([mod], [params], target, ops=ops) File “/root/tvm/python/tvm/autotvm/task/relay_integration.py”, line 150, in extract_from_multiple_program tsk = create(task_name, args, target=target) File “/root/tvm/python/tvm/autotvm/task/task.py”, line 483, in create ret.flop = ret.config_space.flop or compute_flop(sch) File “/root/tvm/python/tvm/autotvm/task/task.py”, line 615, in compute_flop raise RuntimeError( RuntimeError: FLOP estimator fails for this operator. Error msg: The length of axis is not constant. . Please use

cfg.add_flopto manually set FLOP for this operator

My system is Ubuntu 18.04(WSL2), I think I have installed TVM and its dependencies correctly.

If anyone can give me some advice to solve the problem, I would appreciate it.