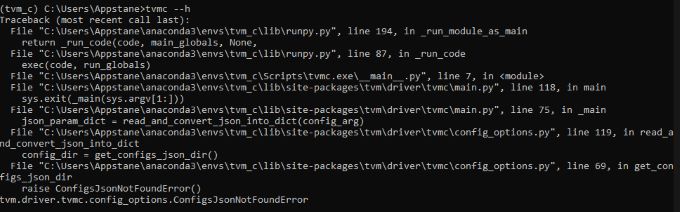

I have recently started learning about TVM. After taking guidance from official documentation of mlc-llm I have successfully installed tvm using - “python3 -m pip install --pre -U -f https://mlc.ai/wheels mlc-ai-nightly” [in conda environment on windows] and also validated tvm, but when I run '“tvmc --h” it gives attached error:

I have also tried in Windows virtual environment and also referred to tlcpack.ai but still the error persists.

Is there any issue while installing or any missing command? Don’t know what’s causing this, but I’d appreciate some help from the community.