I’m currently reading some asm code from ACL, and trying to reproduce some great design in TVM using schedule primitives, but failed to get codegen result as I expected.

For example there’re 32 vector register files in the ARMv8 Neon instruction set, each has 128 bit. By adding cache_read/cache_write and some schedule primitives, I can somehow get a schedule like this in a 510 * 512 * 512 matmul workload:

primfn(A_1: handle, B_1: handle, C_1: handle) -> ()

attr = {"global_symbol": "main", "tir.noalias": True}

buffers = {C: Buffer(C_2: Pointer(float32), float32, [510, 512], [], align=64),

B: Buffer(B_2: Pointer(float32), float32, [512, 512], [], align=64),

A: Buffer(A_2: Pointer(float32), float32, [510, 512], [], align=64)}

buffer_map = {A_1: A, B_1: B, C_1: C} {

attr [C.local: Pointer(float32)] "storage_scope" = "local";

allocate(C.local, float32, [96]);

attr [A.local: Pointer(float32)] "storage_scope" = "local";

allocate(A.local, float32, [24]);

attr [B.local: Pointer(float32)] "storage_scope" = "local";

allocate(B.local, float32, [8]);

for (i.outer: int32, 0, 85) {

for (j.outer: int32, 0, 32) {

for (j.c.outer.outer.inner.init: int32, 0, 2) {

for (i.c.inner.init: int32, 0, 6) {

for (j.c.outer.inner.init: int32, 0, 2) {

C.local[ramp((((i.c.inner.init*16) + (j.c.outer.outer.inner.init*8)) + (j.c.outer.inner.init*4)), 1, 4)] = broadcast(0f32, 4)

}

}

}

for (k.outer: int32, 0, 128) {

for (ax0: int32, 0, 6) {

A.local[ramp((ax0*4), 1, 4)] = (float32x4*)A_2[ramp((((i.outer*3072) + (ax0*512)) + (k.outer*4)), 1, 4)]

}

for (j.c.outer.outer.inner: int32, 0, 2) {

for (k.inner: int32, 0, 4) {

B.local[ramp(0, 1, 8)] = (float32x8*)B_2[ramp(((((k.outer*2048) + (k.inner*512)) + (j.outer*16)) + (j.c.outer.outer.inner*8)), 1, 8)]

for (i.c.inner: int32, 0, 6) {

for (j.c.outer.inner: int32, 0, 2) {

C.local[ramp((((i.c.inner*16) + (j.c.outer.outer.inner*8)) + (j.c.outer.inner*4)), 1, 4)] = ((float32x4*)C.local[ramp((((i.c.inner*16) + (j.c.outer.outer.inner*8)) + (j.c.outer.inner*4)), 1, 4)] + (broadcast((float32*)A.local[((i.c.inner*4) + k.inner)], 4)*(float32x4*)B.local[ramp((j.c.outer.inner*4), 1, 4)]))

}

}

}

}

}

for (i.inner: int32, 0, 6) {

C_2[ramp((((i.outer*3072) + (i.inner*512)) + (j.outer*16)), 1, 16)] = (float32x16*)C.local[ramp((i.inner*16), 1, 16)]

}

}

}

}

I was willing to get a final codegen result with C.local assigned 24 register files, A.local assigned 6 register files and B.local assigned 2 register files. While the asm result of llvm is totally different from what I expected.

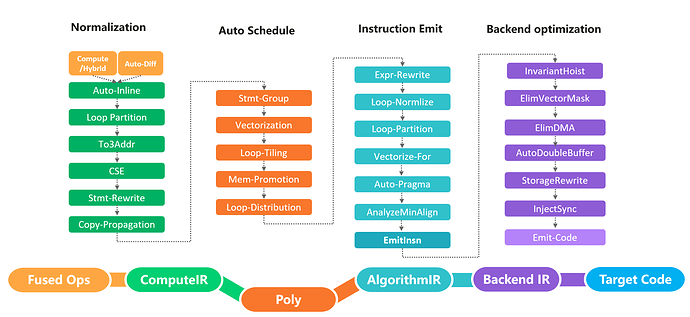

Emm … I know it seems difficult to reproduce an asm design after multiple level’s conversion(TE schedule primitives → IR AST → LLVM → ASM code), but still want to know if there is any possibility to control it better.

Will the new TIR more likely to be “what you see is what you get” compared to the original TE?

(p.s. Is “local” memory scopy guaranteed to generate a memory buffer in register?)

(Maybe I can have a try on the tensorir private branch…)

(Maybe I can have a try on the tensorir private branch…)