I have deployed some models in RK 3288, which completed in for example 5ms. But I found that the TVM threads seems consume CPU continuously after the model inference completed. I tested by the following code:

tvm::runtime::Module mod = (*tvm::runtime::Registry::Get("tvm.graph_runtime.create"))(json_data, mod_syslib, device_type, device_id);

tvm::runtime::PackedFunc set_input = mod.GetFunction("set_input");

set_input("data", data);

tvm::runtime::PackedFunc load_params = mod.GetFunction("load_params");

load_params(params);

tvm::runtime::PackedFunc run = mod.GetFunction("run");

while (True)

{

run();

usleep(100000); // in microsecond

}

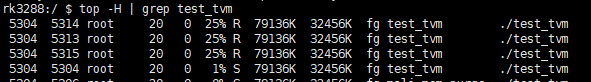

I used 4 tvm threads. The inference run() will be continued 5ms, then sleeped 100ms. But I used top cmd and saw that the cpu occupation is about 75%, use top -H saw that there were 3 threads occupied cpu core continuesly (RK3288 has 4 arm core in total).

My question is how to sleep the threads in time without release the tvm::runtime::Module mod.