Currently, we have to use separate threads for spatial and reduction axes to do cross-thread reduction. Having one thread that both binds to spatial and reduction will cause incorrect codegen, even if they are in effect the same thread mapping.

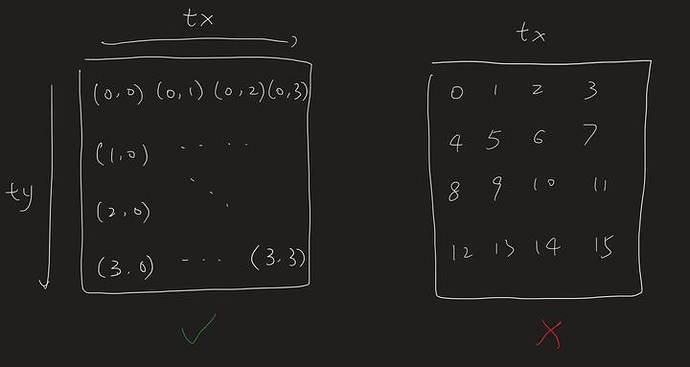

For example, if we use 16 threads to reduce 4x4 tensor A into 4x1 (torch.sum(A, dim=-1))

A repro: allreduce.py · GitHub

Why do we probably want this?

Suppose A is in a 4x4 packed layout tensor, and we’d like to reduce 8 rows in a thread block with 64 threads per CTA, then to obtain maximum coalesced read (contiguous threads access contiguous positions), we want the thread mapping to be also in 4x4 packed layout, but there’s no way to arrange tx and ty to achieve this while tx and ty are either only spatial or reduction at the same time.