There are quite a few mentions of new global memory planning, but with this I would like to start a more comprehensive discussion and would be interested in your current plans. I’m working on model transformations that aim to reduce memory usage on memory constrained devices. For this work to show full potential, I would need to implement a few changes in the memory planning of TVM. So this is also meant to align our goals.

Current status as far as I understand:

- GraphPlanMemory: Goes through the final Relay IR data-flow and allocates new buffers whenever required. Every buffer is a distinct storage and buffers can only be placed at the beginning.

- Intra-TIR function memory allocation: Temporary buffers for operations.

Proposed goals:

- Unify these two methods of allocation into a global memory plan. The first important step towards that seems to be approached through this: [RFC][uTVM] Query intermediary workspace requirement. I do not have anything to add in this regard.

- Improve method of planning. In my opinion, there are two good approaches:

- Use a heuristic like in TFLM to find an often optimal solution with low computational complexity

- In short: Sort buffers descending by size; loop through buffers, trying to place them at the lowest address of a linear buffer; if there is a lifetime-conflict, still place them at the lowest possible address, therefore finding “gaps”. (see https://github.com/tensorflow/tensorflow/blob/master/tensorflow/lite/micro/memory_planner/greedy_memory_planner.h)

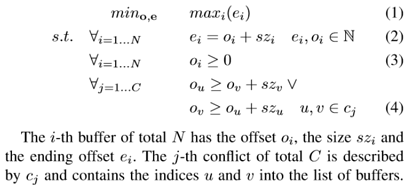

- Find the optimum with a mixed integer programming optimizer. This is pretty simple and optimal, but of course has higher computational complexity. It could look something like this:

- (1) sets the objective to minimize the largest ending offset

- (2) sets up constraints for ending and starting offsets

- (3) ensures positive offsets

- (4) sets up constraints against overlaps for conflicting buffers. conflicting buffers would simply be buffers that have overlapping lifetimes

- Use a heuristic like in TFLM to find an often optimal solution with low computational complexity

Both approaches requires an input in the form of a list of buffers with the attributes “size” and “lifetime”. Or more generally than “lifetime” could be just “conflict”.

My current plan to approach this is to change GraphPlanMemory into a function that analyzes the given Relay IR, but instead of returning the storage_ids, it would just return the buffers with sizes and lifetimes. Then the memory planning can work as an independent unit which transforms the given buffers (which can also include the intra-TIR function ones) to a static memory plan that would just consist of one “storage” for all storages that can be combined. Additional conflict constraints would come from the buffers being on different devices.

Open questions:

- Was there a reason why the buffers are only placed at the start of storages?

- Maybe related to above: How to handle exotic alignment requirements?

- How to align this with non-static memory plans?