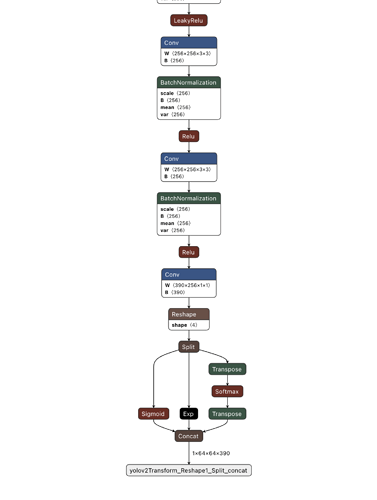

I have a relatively large ONNX model (~80MB) created using YOLO that I am attempting to use for object recognition in 512x512 images. Using python to compile and run the model works perfectly, using both Relay and tvmc. However, when attempting to run the same compiled model in C++, the output I get behaves as though no input were given, or as though a blank image were fed in. Here is the abbreviated code that is working in python to compile and run the model.

model = onnx.load(model_file_path);

shape_dict = {"data": (1, 3, 512, 512)}

mod, params = relay.frontend.from_onnx(model, shape_dict)

compiled = relay.build(mod, tvm.target.Target("llvm"), params=params)

compiled.export_library(compiled_model)

module = tvm.runtime.load_module(compiled_model, 'so')

dev = tvm.device(str(target), 0)

mod = graph_executor.GraphModule(module["default"](dev))

mod.set_input('data', img)

mod.run()

out = mod.get_output(0).numpy()

And here is the abbreviated code that successfully runs the model without error, but has an incorrect output:

tvm::runtime::Module mod_syslib = tvm::runtime::Module::LoadFromFile("<path goes here>/compiled_model_2.dylib");

DLDevice dev{kDLCPU, 0};

tvm::runtime::Module gmod = mod_syslib.GetFunction("default")(dev);

tvm::runtime::PackedFunc set_input = gmod.GetFunction("set_input");

tvm::runtime::PackedFunc get_output = gmod.GetFunction("get_output");

tvm::runtime::PackedFunc run = gmod.GetFunction("run");

tvm::runtime::NDArray x = tvm::runtime::NDArray::Empty({1, 3, 512, 512}, DLDataType{kDLFloat, 32, 1}, dev);

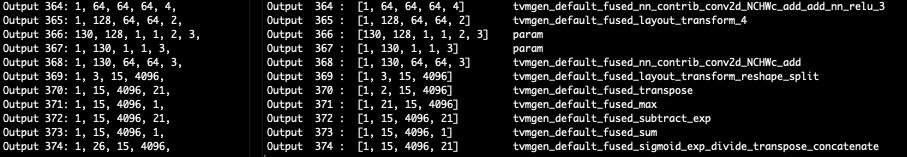

tvm::runtime::NDArray y = tvm::runtime::NDArray::Empty({1, 26, 15, 4096}, DLDataType{kDLFloat, 32, 1}, dev);

cv::Mat image_mat = cv::imread("<Path goes here>/pic.jpg");

cv::Mat image_form = cv::dnn::blobFromImage(image_mat, 1.0/255.0, cv::Size(512, 512), cv::Scalar(0,0,0), false);

x.CopyFromBytes(image_form.data, image_form.total()*image_form.channels()*image_form.elemSize());

set_input("data", x);

run();

get_output(0, y);

const int *inter_dim = new int[4] {26, 15, 64, 64};

cv::Mat out_data(y->ndim, inter_dim, CV_32FC1);

y.CopyToBytes(out_data.data, inter_dim[0] * inter_dim[1] * inter_dim[2] * inter_dim[3] * sizeof(float));

- I have also tried running the model in C++ by compiling the model using tvmc and creating the graph_executor using the following method. I was able to get it to run without error, but it had the same incorrect output as the previous method:

tvm::runtime::Module gmod = (*tvm::runtime::Registry::Get("tvm.graph_executor.create"))(json_data, mod_syslib, device_type, device_id);

- I’ve verified that the parameters are present in the C++ model, and appear to match what is present in the compilation of the model in the Python script. The only other input is the ‘data’ input.

- I have also verified that the input is indeed being set, as scaling the image color from 0-255 instead of what it is supposed to be, 0-1, heavily affects the appearance of the output, though it is just as incorrect.

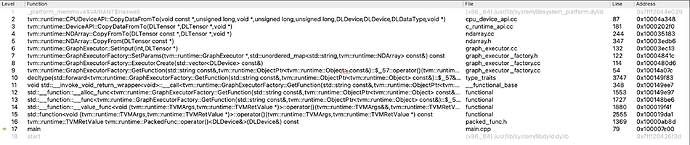

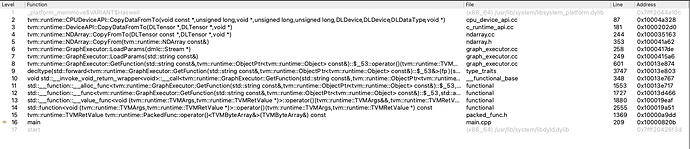

- I have attempted to compile the model by changing the target from “llvm” to “llvm -link-params” as suggested here, but while this compiles successfully, it causes both the python and C++ programs to crash once they attempt to create the graph executor using the newly compiled model. Here is the stack trace when this happens:

This is being compiled on an x86 MacBook Pro with macOS Big Sur Version 11.6.

The big mystery in my mind is why the Python interface is able to run perfectly, whereas I cannot seem to get the C++ implementation to give the correct output despite using the same compiled model and inputs, no matter what method I use.

Please let me know if any more information should be added. I’ve spent about 3 weeks or so troubleshooting this, so any help or suggestions are massively appreciated. Thank you very much for your time and attention.