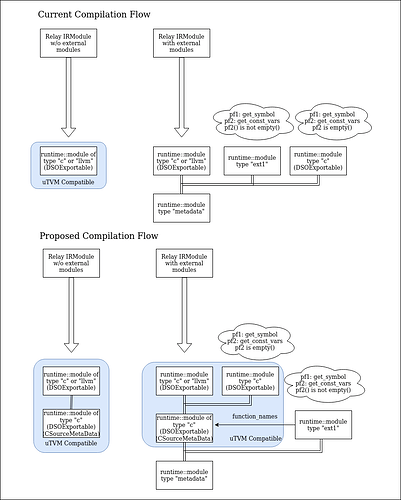

There had been a need for a common c-source module to hold the metadata for uTVM where it mostly deals with “c” or “llvm” modules. One of the main need is to have a model-wide function registry for c-runtime in the absense of dlsym in bare-metal environments (discussed here : https://github.com/apache/tvm/pull/6950 ). There is a “metadata” module in the codebase but that currently gets compiled and re-created in the runtime via packing and unpacking of imports.

Moreover, when using BYOC compilation flow all the external metadata modules are wrapped in a metadata module unconditionally. This approach serves to seperate metadata (currently only constants are seperated out) from code to ease out the compilation of external modules. However, the metadata module being a non-DSOExportable module it uses SaveToBinary() interface to pack itself as an import and uses init() process construct itself back in the runtime in the stack/heap. Thus, in the world of uTVM this process ends up creating the constants (params or otherwise) in the volatile memory which may not be practical for memory constrained devices.

While that works reasonably well for non memory constrained devices, we think it would be beneficial to have another layer of a metadata module in the form of a CSourceModule for bare-metal environments / or for compilation flows where it does not prefer metadata/constant unpacking into the stack/heap.

Thus, this RFC provides a c-source layer to the multi-module runtime-module hierarchies to present the metadata that needs to compiled in a global scope. Thus, it could be useful to the TVM stack in general though most of the initial use is for bare-metal uTVM compilation to hold the function registry.

We would very much like to hear thoughts on the proposal

Function Registry

The main requirement for c-source metadata module comes from the requirement of needing to have function registry in the bare-metal environments. This was one of the features that was introduced in this PR : [µTVM] Add --runtime=c, remove micro_dev target, enable LLVM backend by areusch · Pull Request #6145 · apache/tvm · GitHub. However, function registry need not just to include the functions present in the TIR-generated runtime module but also all the modules that get generated from the relay IRModule in the presence of external modules. Thus, this RFC proposes that every runtime module to implement a PackedFunc : “get_func_names” that return the names of the function it contains. Therefore, as it stands today, we would not need to create the function registry as part of the TIR-based codegen of “c” and “llvm” modules. Moreover, this enables all the function names of all external modules to be included in the function registry that gets generated as a c-source in the CSourceMetaData module.

) and external modules.

) and external modules.