I am a newer to TVM, I want to know wheather the parameter disabled_pass can be set to any pass? If not, what is the restriction about using this parameter.

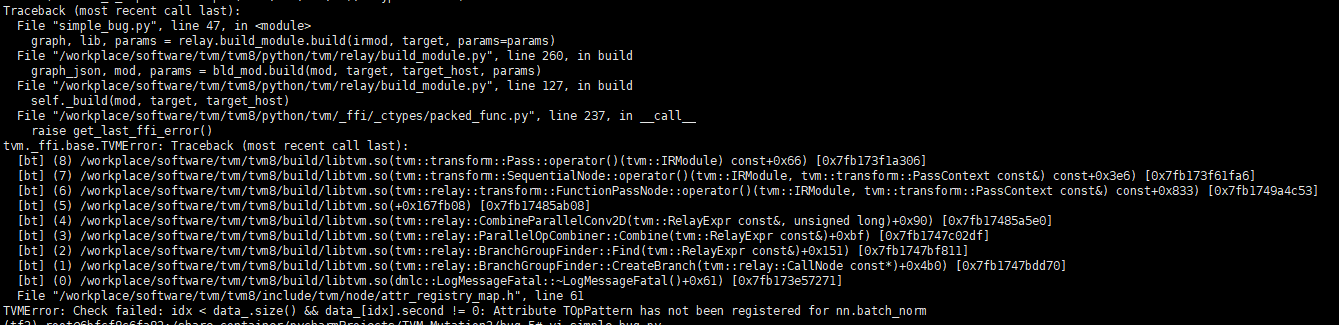

The crash messages are as follows:

Besides, in order to confirm that it is this disabled_pass=['SimplifyInference'] triggered the bug, I did the some experiments.

- change opt_level from 0 to 4 —> opt_level is not related with this crash.

- use other passes —> other passes didn’t crash.

- use other models ----> models may not related with this crash.

- add a extra statement

irmod, params = relay.optimize(irmod,target=target,params=params), the bug disappear.

Even the bug disappered in experiment 4, I still cann’t understand why disabled_pass = ['SimplifyInference'] triggered the crash, and why can add a extra optimization statement( relay.optimize) avoid this crash?

The reproducible script:

import keras

import os

import tvm

from tvm import te

import tvm.relay as relay

import numpy as np

from PIL import Image

import tvm.runtime as runtime

from tvm.contrib import graph_runtime

input_tensor = 'conv2d_1_input'

def image_resize(x, shape):

x_return = []

for x_test in x:

tmp = np.copy(x_test)

img = Image.fromarray(tmp.astype('uint8')).convert('RGB')

img = img.resize(shape, Image.ANTIALIAS)

x_return.append(np.array(img))

return np.array(x_return)

input_precessor = keras.applications.vgg16.preprocess_input

input_shape = (32,32)

dataset_dir = "/share_container/data/dataset/"

data_path = os.path.join(dataset_dir,"imagenet-val-1500.npz")

data = np.load(data_path)

x, y = data['x_test'], data['y_test']

x_resize = image_resize(np.copy(x),input_shape)

x_test = input_precessor(x_resize)

y_test = keras.utils.to_categorical(y, num_classes=1000)

model_path = '/share_container/data/keras_model/vgg16-cifar10_origin.h5'

print('hi')

predict_model = keras.models.load_model(model_path)

print(predict_model.input)

shape_dict = {input_tensor: (1,3,32,32)}

irmod, params = relay.frontend.from_keras(predict_model, shape_dict)

target = 'llvm'

ctx = tvm.cpu(0)

# irmod, params = relay.optimize(irmod,target=target,params=params)

disabled_pass = ['SimplifyInference']

with tvm.transform.PassContext(opt_level=1, disabled_pass= disabled_pass):

graph, lib, params = relay.build_module.build(irmod, target, params=params)

Environment

TVM : 0.8.dev0

ONNX: 1.8.1

OS: Ubuntu 16.04