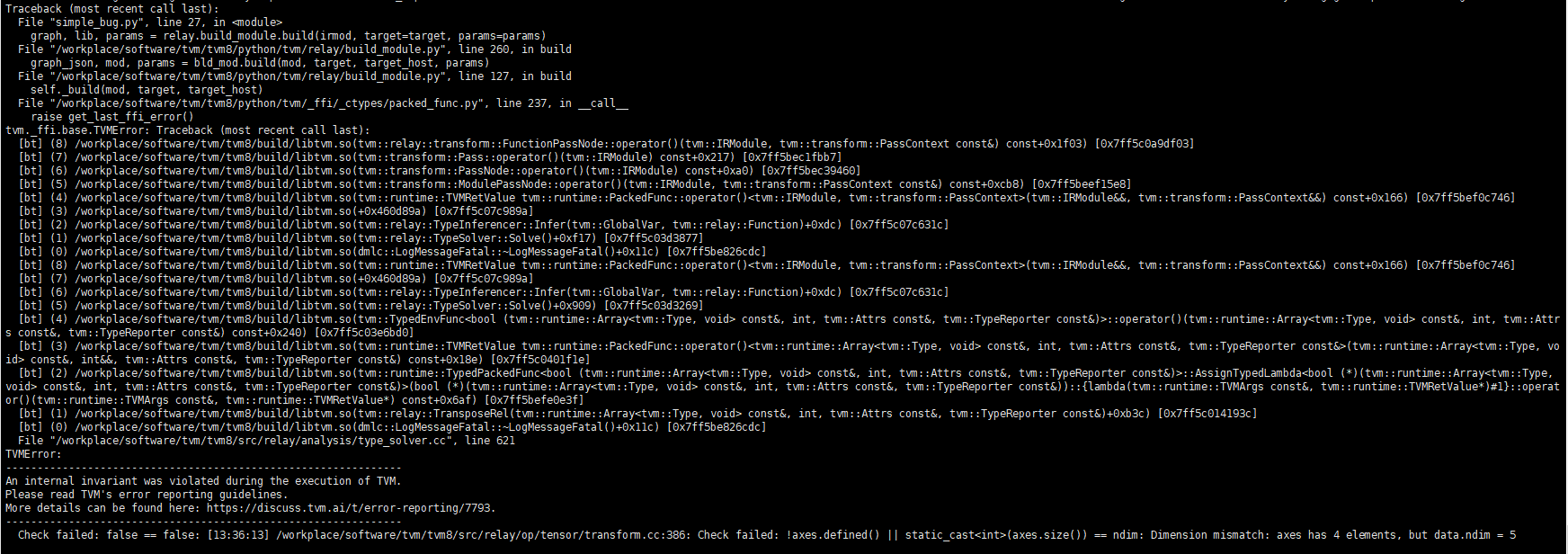

When statements relay.vm.compile() and relay.build_module.build () are executed step by step, the script will crash.

However, the script can run very well when I delete one of the statements from relay.vm.compile() and relay.build_module.build ().

The runnable script:

import keras

import tvm

import tvm.relay as relay

if __name__ == '__main__':

model_path = './vgg16-cifar10_origin.h5'

target = 'llvm'

predict_model = keras.models.load_model(model_path)

shape_dict = {'conv2d_1_input': (1, 3, 32, 32)}

irmod, params = relay.frontend.from_keras(predict_model, shape_dict)

with tvm.transform.PassContext(opt_level=3):

vm_exec = relay.vm.compile(irmod, target, params=params)

graph, lib, params = relay.build_module.build(irmod, target=target, params=params)

You can access the vgg16-cifar10_origin.h5 model from the link: