@popojames

First of all, I wanna double-check if I wanna re-build a new TVM environment, should I go with this GitHub branch: https://github.com/huajsj/tvm/tree/threadaffinity?

yes, rebuild is necessary to use the said cpu affinity feature,

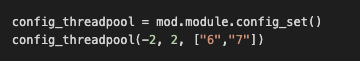

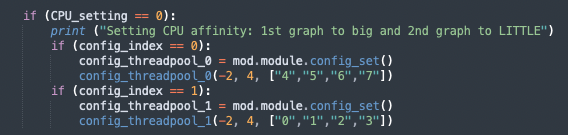

In this setting, I wanna double-check if I wanna use the “kSpecify” mode, is it similar to the original setting as shown below (i.e, calling config_threadpool) but with CPU affinity mode = -2 and adding parameters “cpus” and “exclude_worker0”?

user need to use “Configure” function like "tvm::runtime::threading ::Configure(tvm::runtime::threading::ThreadGroup::kSpecify, 0, cpus, concurrency_config);"to do the cpu affinity settings.

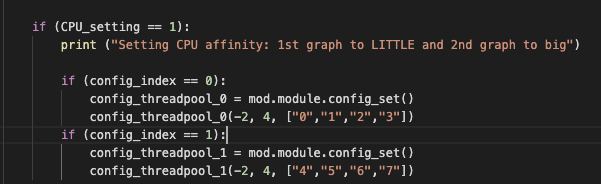

For the example that I mentioned above, if I wanna split the network into two sub-graphs, then set the first graph → 4 small cores, second graph ->4 big cores. (1) Should I set “cpus” =[4,4]? (2) How exactly to set the small & big CPU affinity order?

the 4 small cpu should like {0,1,2,3} the 4 big cpu should like {4, 5, 6, 7}

How exactly to launch multi-threads if I call tvm backend in python to run the benchmark?

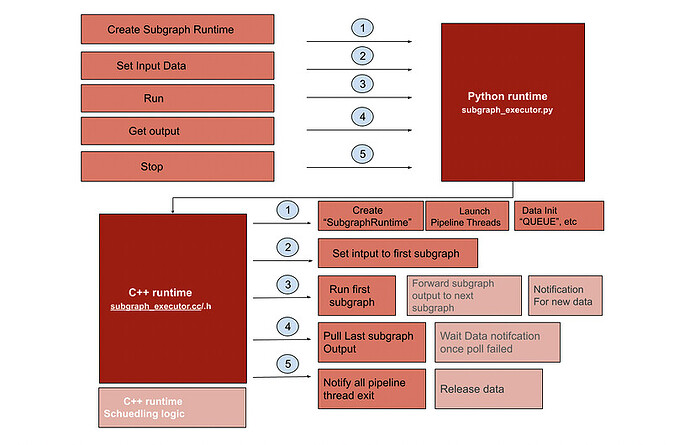

tvm::runtime::threading ::Configure is a c++ function, you only can call it in c++ library, after split compute graph into 2 sub-graph, you should run each sub-graph with specify runtime in different thread and call the said function.

Is it possible to share any example code for this CPU affinity setting? I think one simple example like a hand-made multilayer perceptron with CPU splitting would be really helpful for me and other users to understand the whole process.

tests/cpp/threading_backend_test.cc::TVMBackendAffinityConfigure is the example.

Happy new year