Hello TVM developer and community,

I have been working on running inference with TVM on CPU only. Especially, I am working on ARM big Little CPU core.

I am wondering about ARM big Little CPU core, is it possible to for TVM capture the communication cost between Big core and Little core of ARM Big Little CPU?

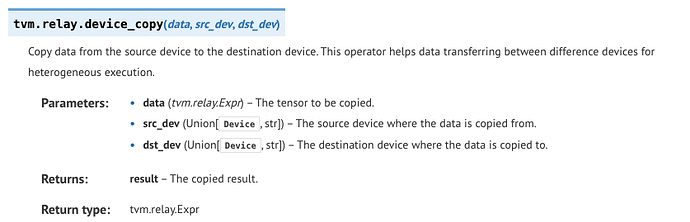

I knew in the GPU-CPU cooperation case, we can use device_copy to model the communication cost between CPU and GPU and get the communication cost between CPU (llvm) and GPU (open CL)

Since Both big and little CPU clusters are regarded as “a llvm device”, I cannot capture communication costs as we did in GPU-CPU. Is there any way to obtain such information?

Any thoughts are welcomed.